Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

APPLIES TO:  Azure Data Factory

Azure Data Factory  Azure Synapse Analytics

Azure Synapse Analytics

In a data integration solution, incrementally (or delta) loading data after an initial full data load is a widely used scenario. The tutorials in this section show you different ways of loading data incrementally by using Azure Data Factory.

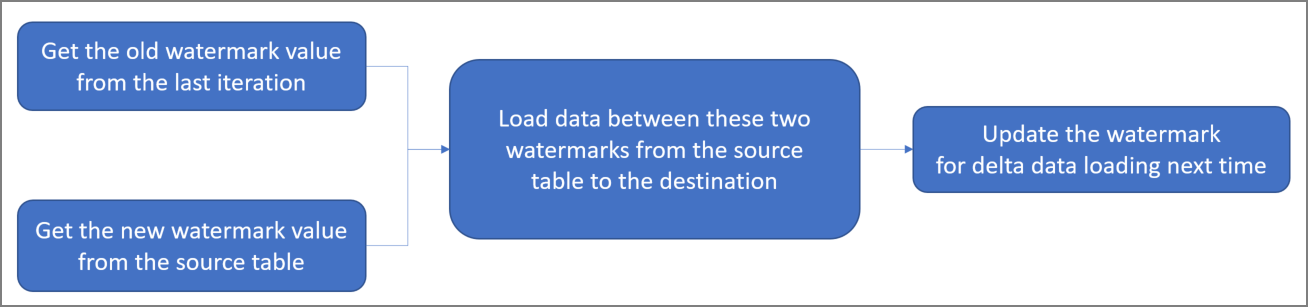

Delta data loading from database by using a watermark

In this case, you define a watermark in your source database. A watermark is a column that has the last updated time stamp or an incrementing key. The delta loading solution loads the changed data between an old watermark and a new watermark. The workflow for this approach is depicted in the following diagram:

For step-by-step instructions, see the following tutorials:

- Incrementally copy data from one table in Azure SQL Database to Azure Blob storage

- Incrementally copy data from multiple tables in a SQL Server instance to Azure SQL Database

For templates, see the following:

Delta data loading from SQL DB by using the Change Tracking technology

Change Tracking technology is a lightweight solution in SQL Server and Azure SQL Database that provides an efficient change tracking mechanism for applications. It enables an application to easily identify data that was inserted, updated, or deleted.

The workflow for this approach is depicted in the following diagram:

![]()

For step-by-step instructions, see the following tutorial:

Loading new and changed files only by using LastModifiedDate

You can copy the new and changed files only by using LastModifiedDate to the destination store. ADF will scan all the files from the source store, apply the file filter by their LastModifiedDate, and only copy the new and updated file since last time to the destination store. If you let ADF scan huge amounts of files but you only copy a few files to the destination, this will still take a long time because of the file scanning process.

For step-by-step instructions, see the following tutorial:

For templates, see the following:

Loading new files only by using time partitioned folder or file name

You can copy new files only, where files or folders has already been time partitioned with timeslice information as part of the file or folder name (for example, /yyyy/mm/dd/file.csv). It's the most performant approach for incrementally loading new files.

For step-by-step instructions, see the following tutorial:

Related content

Advance to the following tutorial: