生活在大规模互连的社会中,这意味着有时候你也成了社交网络中的一部分 。 使用社交网络与朋友、同事和家人保持联系,有时还会与有共同兴趣的人分享我们的激情。

作为工程师或开发人员,你可能想知道这些网络如何存储和互连数据。 或者,你甚至可能承担着为特定利基市场创建或构建新社交网络的任务。 这时就会产生一个大问题:所有这些数据是如何存储的?

假设你正在创建一个新型时尚的社交网络,用户可以在此网络中发布带有相关媒体(如图片、视频,甚至音乐)的文章。 用户可以对帖子发表评论并打分以进行评级。 主网站登录页上将提供用户可见并可与之交互的帖子源。 这种方法听起来并不复杂,但为了简单起见,我们就此止步。 (你可以深入了解受关系影响的自定义用户订阅源,但这超出了本文的目标。)

那么,如何确定存储这些数据的方式以及位置?

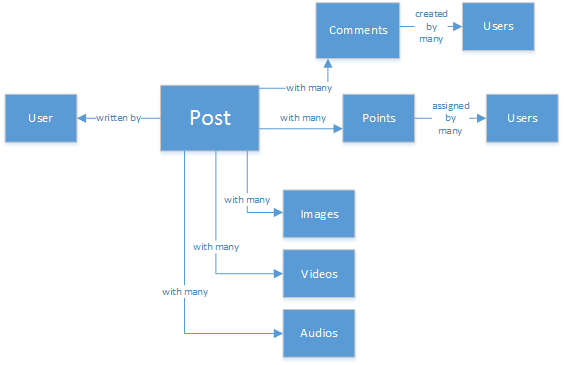

你可能有使用 SQL 数据库的经验,或者了解数据的关系模型。 可开始绘制如下内容:

非常标准且美观的数据结构,但却不可扩展。

不要误会,我一直致力于开发 SQL 数据库。 它们很棒,但就像每种模式、实践方法和软件平台一样,它并不适用于所有场景。

为什么在此方案中 SQL 不是最佳选择? 我们来看一下单个帖子的结构。 如果我想在网站或应用程序中显示帖子,我必须执行查询,通过连接八个表(!) 来仅显示一个帖子。 现在请想象一下:一系列帖子动态地加载并显示在屏幕上。你可能就明白我的意思了。

可以使用一个具备足够计算能力的庞大SQL实例来解决数以千计的查询,其中通过许多联接来提供内容。 但当已经有一个更简单的解决方案存在时,为什么还要选择这种呢?

NoSQL 之路

本文将指导你使用 Azure 的 NoSQL 数据库 Azure Cosmos DB 经济高效地对社交平台的数据进行建模。 它还介绍如何使用其他 Azure Cosmos DB 功能,例如 用于 Gremlin 的 API。 使用 NoSQL 方法以 JSON 格式存储数据并应用非规范化,就可以将以前的复杂帖子转换为单个文档:

{

"id":"ew12-res2-234e-544f",

"title":"post title",

"date":"2016-01-01",

"body":"this is an awesome post stored on NoSQL",

"createdBy":User,

"images":["https://myfirstimage.png","https://mysecondimage.png"],

"videos":[

{"url":"https://myfirstvideo.mp4", "title":"The first video"},

{"url":"https://mysecondvideo.mp4", "title":"The second video"}

],

"audios":[

{"url":"https://myfirstaudio.mp3", "title":"The first audio"},

{"url":"https://mysecondaudio.mp3", "title":"The second audio"}

]

}

可以使用单个查询获得,且无需联接。 此查询非常简单直观,且在预算方面,它所需要的资源更少,但得到的结果更好。

Azure Cosmos DB 的自动索引功能可确保为所有属性都编制索引。 自动索引甚至可以进行自定义。 通过此无架构方法,可存储具有不同动态结构的文档。 也许明天你会想让帖子有一个与之相关的类别或话题标签列表? Azure Cosmos DB 使用添加的属性处理新文档,而无需我们执行额外的工作。

可以将对帖子的评论视为具有父属性的其他帖子。 (这可以简化对象映射。)

{

"id":"1234-asd3-54ts-199a",

"title":"Awesome post!",

"date":"2016-01-02",

"createdBy":User2,

"parent":"ew12-res2-234e-544f"

}

{

"id":"asd2-fee4-23gc-jh67",

"title":"Ditto!",

"date":"2016-01-03",

"createdBy":User3,

"parent":"ew12-res2-234e-544f"

}

并且所有社交互动都可以作为计数器存储在单个对象上:

{

"id":"dfe3-thf5-232s-dse4",

"post":"ew12-res2-234e-544f",

"comments":2,

"likes":10,

"points":200

}

创建信息源只是创建可以按给定相关顺序保存帖子 ID 列表的文档而已。

[

{"relevance":9, "post":"ew12-res2-234e-544f"},

{"relevance":8, "post":"fer7-mnb6-fgh9-2344"},

{"relevance":7, "post":"w34r-qeg6-ref6-8565"}

]

你可以有一个“最新”流,其中的帖子按创建日期排序。 或者可以有一个“最热门”的流,其中包含过去 24 小时内点赞数最多的帖子。 甚至可以根据关注者和兴趣等逻辑为每个用户实现自定义流。 它仍然是一个文章列表。 虽然如何生成这些列表还是一个问题,但读取性能不会受到阻碍。 获取其中一个列表后,使用 IN 关键字向 Azure Cosmos DB 发出单个查询,一次获取文章页面。

数据流可以使用 Azure 应用服务的后台进程来生成。 创建一个帖子后,可以通过使用 Azure 存储队列触发后台处理,从而根据自己的自定义逻辑实现流内的帖子传播。

通过使用同样的技术,帖子上的评分和点赞可以以延迟方式处理,从而创建一个实现最终一致性的环境。

关注者更棘手。 Azure Cosmos DB 具有文档大小限制,而且读取/写入大型文档会影响应用程序的可伸缩性。 因此,您可以考虑将关注者存储为具有以下结构的文档:

{

"id":"234d-sd23-rrf2-552d",

"followersOf": "dse4-qwe2-ert4-aad2",

"followers":[

"ewr5-232d-tyrg-iuo2",

"qejh-2345-sdf1-ytg5",

//...

"uie0-4tyg-3456-rwjh"

]

}

此结构可能适用于拥有数千位关注者的用户。 但是,如果某些名人加入排名,此方法会导致文档大小较大,最终可能会达到文档大小上限。

为了解决此问题,可以使用一种混合方法。 可以在用户统计信息文档中存储关注者人数:

{

"id":"234d-sd23-rrf2-552d",

"user": "dse4-qwe2-ert4-aad2",

"followers":55230,

"totalPosts":452,

"totalPoints":11342

}

可以使用 Azure Cosmos DB API for Gremlin 存储实际的关注者图形,为每位用户创建顶点和维持“A 关注 B”关系的边。 使用 API for Gremlin 不仅可以获取某位用户的关注者,而且还能创建更复杂的查询以推荐具有共同点的用户。 如果在图形中添加用户喜欢或感兴趣的内容类别,就可以开始布置智能内容发现、推荐关注用户感兴趣的内容和查找具有共同点的用户等体验。

仍然可以使用用户统计信息文档在 UI 或快速配置文件预览中创建卡片。

“阶梯”模式和数据重复

你可能已注意到,在引用帖子的 JSON 文档中,某个用户出现了多次。 你猜得没错,这些重复意味着,由于这种反规范化,描述用户的信息可能会出现在多个地方。

若要实现更快速的查询,您会接受数据重复。 此负面影响的问题在于,如果某些操作导致用户的数据发生更改,那么需要查找该用户曾经执行过的所有活动并对这些活动全部进行更新。 听上去不太实用,对不对?

通过识别用户的关键属性解决该问题。对于每个活动,都会在应用程序中显示此属性。 如果在应用程序中直观显示一个帖子并仅显示创建者的姓名和照片,那么为什么还要在“createdBy”属性中存储用户的所有数据呢? 如果对于每一条评论都只显示用户的照片,那么的确不需要该用户的其余信息。 这就会涉及到我所称的“阶梯模式”。

我们以用户信息为例:

{

"id":"dse4-qwe2-ert4-aad2",

"name":"John",

"surname":"Doe",

"address":"742 Evergreen Terrace",

"birthday":"1983-05-07",

"email":"john@doe.com",

"twitterHandle":"\@john",

"username":"johndoe",

"password":"some_encrypted_phrase",

"totalPoints":100,

"totalPosts":24

}

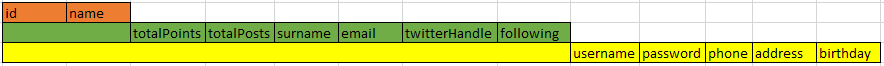

通过查看此信息,可以快速检测出哪些是重要的信息,哪些不是,从而就会创建一个“阶梯”:

最简单的一步称为 UserChunk,这是标识用户的最小信息块并可用于数据重复。 通过减少重复数据的大小直到只留下要“显示”的信息,可以降低大规模更新的可能性。

中间步骤称为用户。 它是对 Azure Cosmos DB 上大多数依赖于性能的查询使用的完整数据,访问次数最多且最关键。 它包括由 UserChunk 表示的信息。

最大的是扩展用户。 它包括重要用户信息和其他不需要快速读取或最终使用的数据,如登录过程。 此数据可以存储在 Azure Cosmos DB 外、Azure SQL 数据库或 Azure 存储表中。

为什么要拆分用户,甚至将此信息存储在不同的位置? 因为,从性能角度考虑,文档越大,查询成本将越高。 保持文档简洁,包含能为社交网络执行所有依赖性能的查询的必要信息。 存储用于可能场景的其他额外信息,例如完整配置文件编辑、登录以及用于使用情况分析和大数据计划的数据挖掘。 不必关心数据挖掘的数据收集过程是否较慢,因为它在 Azure SQL 数据库上运行。 你确实担心你的用户能否获得快速而精简的体验。 Azure Cosmos DB 中存储的用户如下列代码所示:

{

"id":"dse4-qwe2-ert4-aad2",

"name":"John",

"surname":"Doe",

"username":"johndoe"

"email":"john@doe.com",

"twitterHandle":"\@john"

}

帖子将显示如下内容:

{

"id":"1234-asd3-54ts-199a",

"title":"Awesome post!",

"date":"2016-01-02",

"createdBy":{

"id":"dse4-qwe2-ert4-aad2",

"username":"johndoe"

}

}

当区块属性受影响需要进行编辑时,可轻松找到受影响的文档。 只需使用指向已编入索引属性的查询,例如SELECT * FROM posts p WHERE p.createdBy.id == "edited_user_id",然后更新数据块。

搜索框

幸运的是,用户会生成大量内容。 您应该能够提供搜索和查找这些内容的功能,这些内容可能没有直接出现在用户内容流中。可能是因为您没有关注特定的创作者,或者是因为您只是想找到六个月前发布的旧帖子。

由于使用的是 Azure Cosmos DB,因此可使用 Azure AI 搜索 在几分钟内轻松实现搜索引擎,而无需键入任何代码(搜索进程和 UI 除外)。

为什么此过程如此简单?

Azure AI 搜索可实现它们称之为索引器的内容,这是在数据存储库中挂钩的后台处理程序,可以自动添加、更新或删除索引中的对象。 它们支持 Azure SQL 数据库索引器、Azure Blobs 索引器和 Azure Cosmos DB 索引器。 从 Azure Cosmos DB 到 Azure AI 搜索的信息转换非常简单。 这两种技术都以 JSON 格式存储信息,因此只需创建索引并从要编制索引的文档中映射属性。 就这么简单! 根据数据的大小,云基础结构中最好的搜索即服务解决方案可在几分钟内搜索所有内容。

有关 Azure AI 搜索的详细信息,请访问 Hitchhiker’s Guide to Search(搜索漫游指南)。

基础知识

存储所有日益增长的此内容后,你可能会考虑:我如何处理来自我的用户的所有此信息流?

答案非常简单:投入使用并从中进行学习。

但是,可以学到什么? 几个简单的例子包括情感分析、基于用户偏好的内容推荐,或者甚至一个自动化的内容审查器,它确保你的社交网络上发布的内容对家庭安全。

现在我已经吸引住了你,你可能会认为需要一些数学科学的博士学位才能从简单的数据库和文件中提取这些模式和信息,其实不然。

另一个可用的选项是使用 Azure AI 服务分析用户内容;你可更好地理解这些内容(通过使用文本分析 API 分析用户编写的内容),还可使用计算机视觉 API 检测不需要的内容或成人内容,并采取相应的措施。 Azure AI 服务包括许多现用的解决方案,这些解决方案不需要任何类型的机器学习知识即可使用。

多个区域的规模化社交体验

我必须讨论最后但同样重要的一个重要话题:可伸缩性。 设计架构时,每个组件都应该能够独立扩展。 你最终需要处理更多数据,或者希望具有更大的地理覆盖范围。 幸运的是,使用 Azure Cosmos DB 完成这两项任务是一种开箱即用的体验。

Azure Cosmos DB 支持现成的动态分区。 它会根据给定的分区键自动创建分区,分区键在文档中定义为属性 。 定义正确的分区键操作必须在设计时完成。 有关详细信息,请参阅 Azure Cosmos DB 分区。

对于社交体验,必须将分区策略与查询和写入方式保持一致。 (例如,推荐在同一分区内进行读取,并通过在多个分区上分散写入来避免“热点”。)某些选项为:基于临时键的分区(日/月/周)、按内容类别、按地理区域,或按用户。 这完全取决于查询数据的方式,并在社交体验中显示数据。

Azure Cosmos DB 以透明方式在所有分区上运行查询(包括 聚合),因此无需在数据增长时添加任何逻辑。

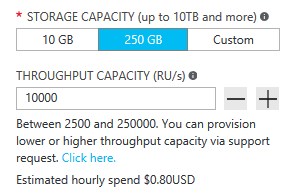

一段时间后,最终流量会增加,资源消耗(通过 RU 即“请求单位”进行度量)也会增加。 随着用户群的增长,你将更频繁地读取和写入。 用户群开始创建和阅读更多内容。 因此,扩展吞吐量的能力至关重要。 增加 RU 非常容易。 可以通过在 Azure 门户上选择一些选项或通过 API 发出命令来执行此作。

如果情况不断好转会怎样? 假设来自其他国家/地区或大洲的用户注意到你的平台并开始使用。 真是太棒了!

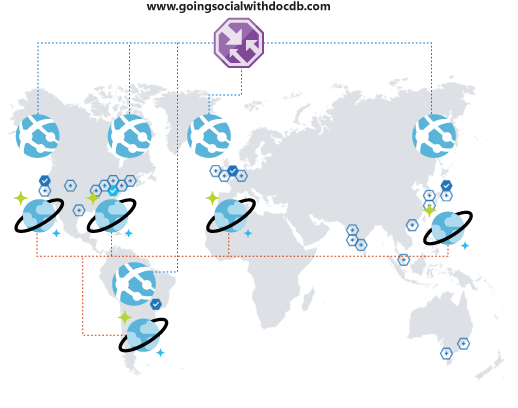

可是等等! 你很快就会发现他们使用平台的体验并不是最佳体验。 他们距离你的运营区域太远,会出现非常严重的延迟情况。 你显然不希望他们放弃。 如果有一种简单的方法可以扩展多区域覆盖范围就好了。 确实有!

Azure Cosmos DB 允许您以透明的方式在多个区域复制数据,并可以从您的客户端代码中通过选择来动态选择这些可用区域。 此进程还意味着可以拥有多个故障转移区域。

将数据复制到多个区域时,需确保客户端可以利用该数据。 如果要使用 Web 前端或从移动客户端访问 API,则可以部署 Azure 流量管理器并在所有所需区域克隆 Azure 应用服务(方法是使用某个性能配置来支持扩展的多区域覆盖范围)。 当客户端访问前端或 API 时,它们将路由到最近的应用服务,后者反过来会连接到本地 Azure Cosmos DB 副本。

结论

本文阐述了使用低成本服务完全在 Azure 上创建社交网络的替代方案。 它通过鼓励使用多层存储解决方案和数据分发(称为“阶梯”)来提供结果。

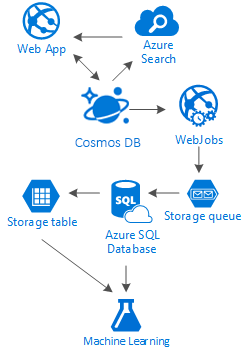

事实上,对于此类方案并没有万能方法。 需结合各种卓越的服务共同创建,才能提供绝佳的体验:Azure Cosmos DB 的速度和自由性,可用于提供绝佳的社交应用程序;Azure AI 搜索等一流搜索解决方案,可用于提供幕后的智能操作;Azure 应用服务的灵活性,不仅可以托管与语言无关的应用程序,甚至还可以托管功能强大的后台处理程序;Azure 存储和 Azure SQL 数据库的可扩展性,可用于存储大量数据;Azure 机器学习的分析功能,可创建能够为进程提供反馈,并且有助于我们向合适的用户提供合适的内容的知识和智能。

后续步骤

若要了解有关 Azure Cosmos DB 用例的详细信息,请参阅 Azure Cosmos DB 常见用例。