本文介绍如何跟踪和调试在 HDInsight 群集上运行的 Apache Spark 作业。 使用 Apache Hadoop YARN UI、Spark UI 和 Spark History Server 进行调试。 使用 Spark 群集中提供的笔记本启动 Spark 作业,相关信息请参阅“机器学习:使用 MLLib 对食物检测数据进行预测分析”。 按以下步骤跟踪使用任何其他方法(例如 spark-submit)提交的应用程序。

如果没有 Azure 订阅,可在开始前创建一个试用帐户。

先决条件

HDInsight 上的 Apache Spark 群集。 有关说明,请参阅在 Azure HDInsight 中创建 Apache Spark 群集。

你应当已开始运行笔记本,相关信息请参阅机器学习:使用 MLLib 对食物检测数据进行预测分析。 有关如何运行此 Notebook 的说明,请单击以下链接。

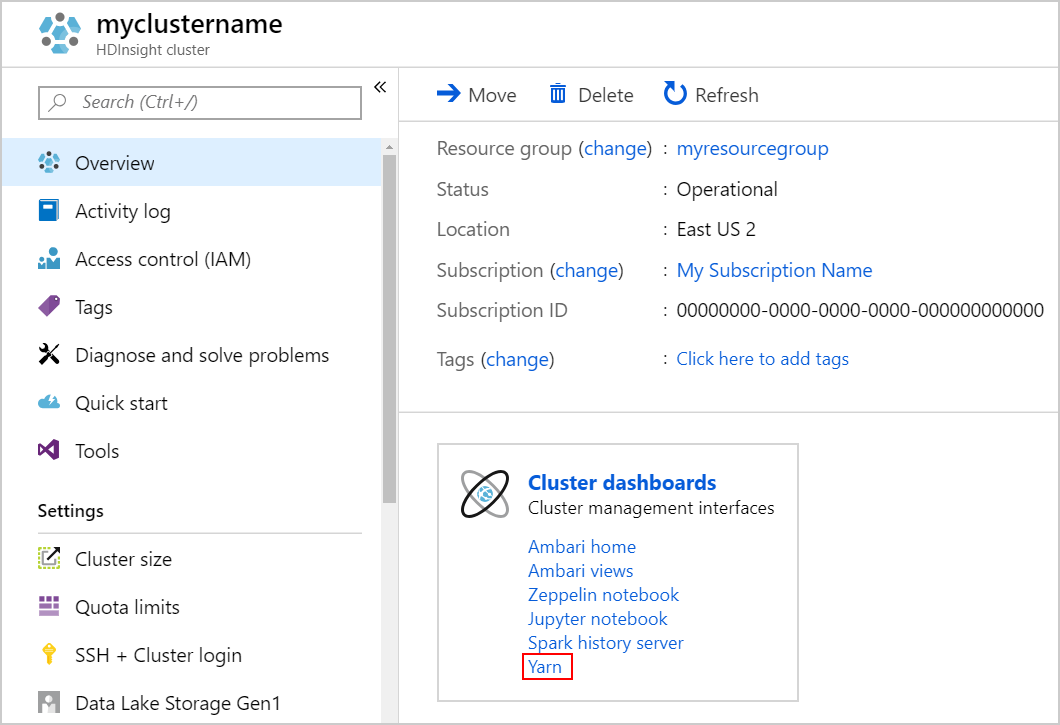

在 YARN UI 中跟踪应用程序

启动 YARN UI。 在“群集仪表板”下选择“Yarn” 。

提示

或者,也可以从 Ambari UI 启动 YARN UI。 若要启动 Ambari UI,请在“群集仪表板”下选择“Ambari 主页” 。 在 Ambari UI 中,导航到“YARN”>“快速链接”>“活动资源管理器”>“资源管理器 UI”。

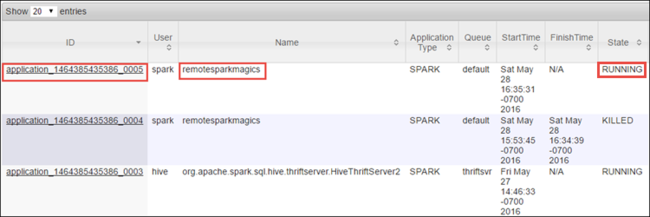

由于 Spark 作业是使用 Jupyter Notebook 启动的,因此应用程序的名称为“remotesparkmagics”(从笔记本启动的所有应用程序的名称)。 根据应用程序名称选择应用程序 ID,以获取有关该作业的详细信息。 此操作会启动应用程序视图。

对于从 Jupyter Notebook 启动的应用程序,在退出笔记本之前,其状态始终是“正在运行”。

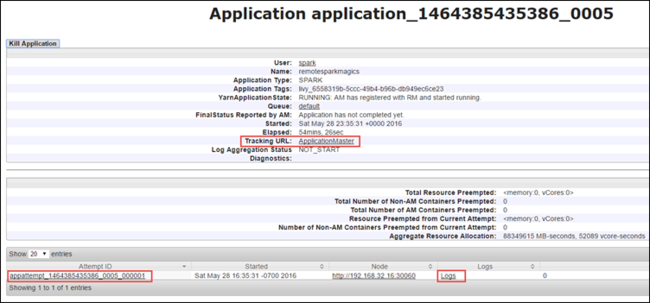

从应用程序视图中,可以进一步深入以找到与应用程序和日志 (stdout/stderr) 关联的容器。 也可以通过单击“跟踪 URL”对应的链接来启动 Spark UI,如下所示。

在 Spark UI 中跟踪应用程序

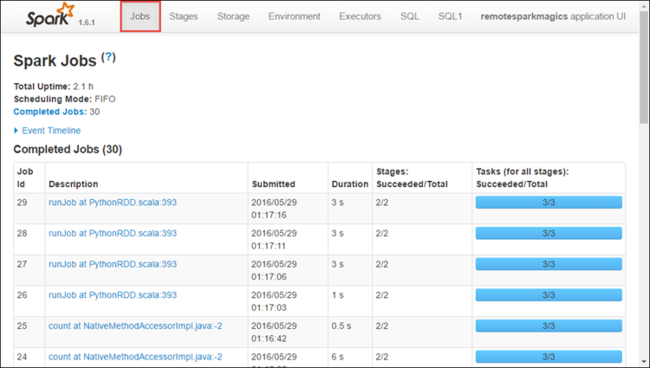

在 Spark UI 中,可以深入到前面启动的应用程序所产生的 Spark 作业。

若要启动 Spark UI,请在应用程序视图中选择针对“跟踪 URL”的链接,如上面的屏幕截图所示。 可以看到,应用程序启动的所有 Spark 作业正在 Jupyter Notebook 中运行。

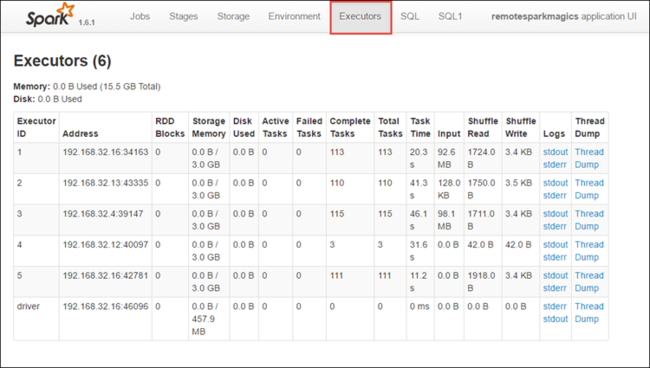

选择“执行程序”选项卡以查看每个执行程序的处理和存储信息。 还可以通过选择“线程转储”链接来检索调用堆栈。

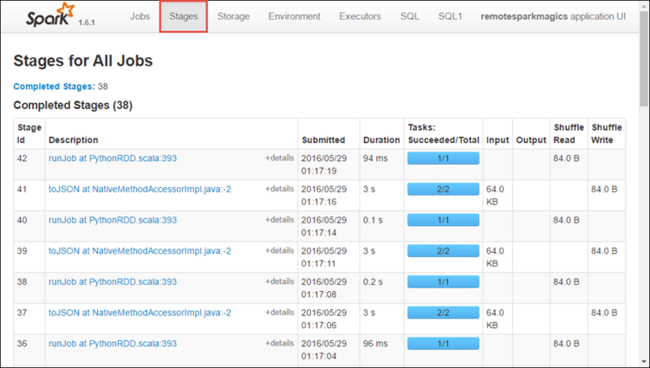

选择“阶段”选项卡以查看与应用程序关联的阶段。

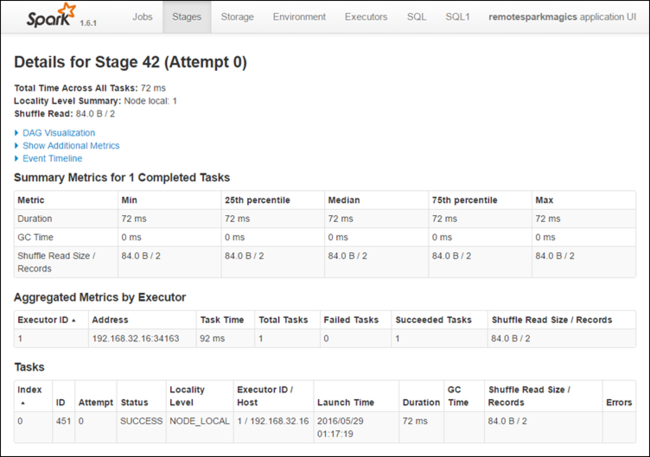

每个阶段可能有多个任务,你可以查看这些任务的执行统计信息,如下所示。

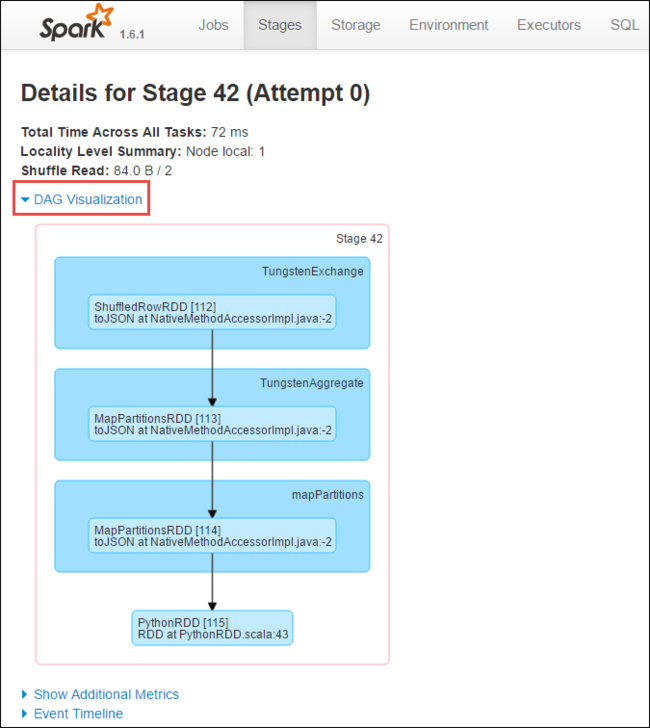

在阶段详细信息页上,可以启动 DAG 可视化。 展开页面顶部的“DAG 可视化”链接,如下所示。

DAG (Direct Aclyic Graph) 呈现了应用程序中的不同阶段。 图形中的每个蓝框表示从应用程序调用的 Spark 操作。

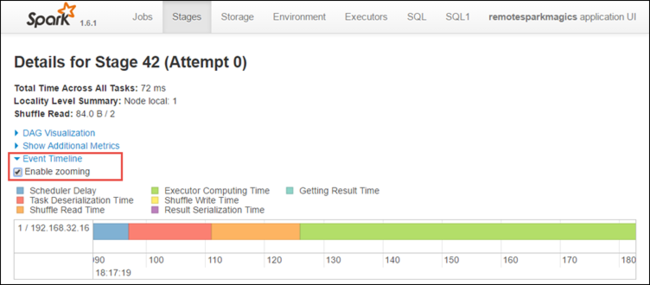

在阶段详细信息页上,还可以启动应用程序时间线视图。 展开页面顶部的“事件时间线”链接,如下所示。

此图像以时间线形式显示 Spark 事件。 时间线视图提供三个级别:跨作业、作业内和阶段内。 上图中捕获了指定阶段的时间线视图。

提示

如果选中“启用缩放”复选框,则可以在时间线视图中左右滚动。

Spark UI 中的其他选项卡也提供了有关 Spark 实例的有用信息。

- “存储”选项卡 - 如果应用程序创建了 RDD,则可以在“存储”选项卡中找到相关信息。

- “环境”选项卡 - 此选项卡提供有关 Spark 实例的有用信息,例如:

- Scala 版本

- 与群集关联的事件日志目录

- 应用程序的执行器核心数

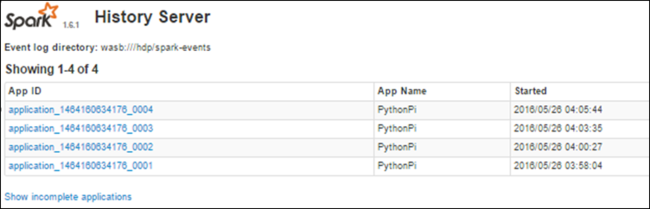

使用 Spark History Server 查找有关已完成的作业的信息

完成某个作业后,有关该作业的信息将保存在 Spark History Server 中。

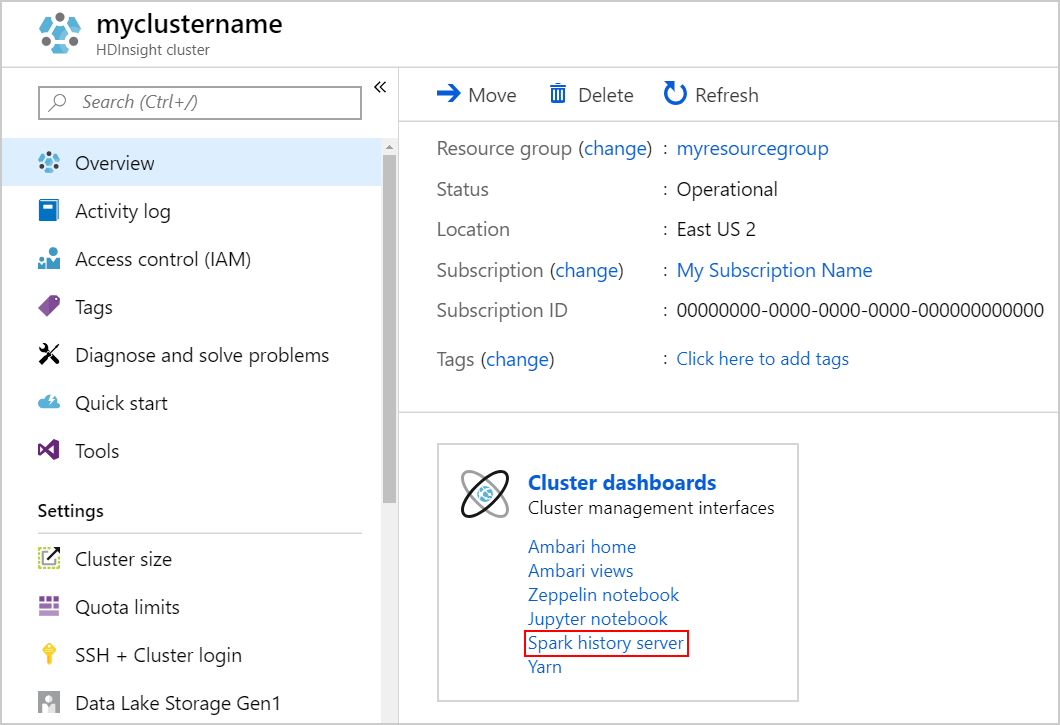

若要启动 Spark History Server,请在“概览”页的“群集仪表板”下选择“Spark History Server” 。

提示

或者,也可以从 Ambari UI 启动 Spark History Server UI。 若要启动 Ambari UI,请在“概览”边栏选项卡的“群集仪表板”下选择“Ambari 主页” 。 在 Ambari UI 中,导航到“Spark2”>“快速链接”>“Spark2 History Server UI”。

随后会看到已列出所有已完成的应用程序。 选择应用程序 ID 可深入到该应用程序中获取更多信息。