Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

APPLIES TO:  Azure Data Factory

Azure Data Factory  Azure Synapse Analytics

Azure Synapse Analytics

When you use Azure Data Factory or Synapse Analytics pipelines copy activity to copy data from source to sink, in the following scenarios, you can also preserve the metadata and ACLs along.

Preserve metadata for lake migration

When you migrate data from one data lake to another including Amazon S3, Azure Blob, Azure Data Lake Storage Gen2, and Azure Files, you can choose to preserve the file metadata along with data.

Copy activity supports preserving the following attributes during data copy:

- All the customer specified metadata

- And the following five data store built-in system properties:

contentType,contentLanguage(except for Amazon S3),contentEncoding,contentDisposition,cacheControl.

Handle differences in metadata: Amazon S3 and Azure Storage allow different sets of characters in the keys of customer specified metadata. When you choose to preserve metadata using copy activity, the service automatically replaces the invalid characters with '_'.

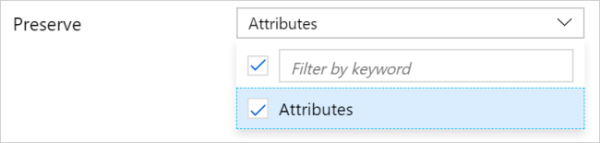

When you copy files as-is from Amazon S3/Azure Data Lake Storage Gen2/Azure Blob storage/Azure Files to Azure Data Lake Storage Gen2/Azure Blob storage/Azure Files with binary format, you can find the Preserve option on the Copy Activity > Settings tab for activity authoring or the Settings page in Copy Data Tool.

Here's an example of copy activity JSON configuration (see preserve):

"activities":[

{

"name": "CopyAndPreserveMetadata",

"type": "Copy",

"typeProperties": {

"source": {

"type": "BinarySource",

"storeSettings": {

"type": "AmazonS3ReadSettings",

"recursive": true

}

},

"sink": {

"type": "BinarySink",

"storeSettings": {

"type": "AzureBlobFSWriteSettings"

}

},

"preserve": [

"Attributes"

]

},

"inputs": [

{

"referenceName": "<Binary dataset Amazon S3/Azure Blob/ADLS Gen2 source>",

"type": "DatasetReference"

}

],

"outputs": [

{

"referenceName": "<Binary dataset for Azure Blob/ADLS Gen2 sink>",

"type": "DatasetReference"

}

]

}

]

Related content

See the other Copy Activity articles: