Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Azure Machine Learning requires access to servers and services on the public internet. When implementing network isolation, you need to understand what access is required and how to enable it.

Note

The information in this article applies to Azure Machine Learning workspace configured to use an Azure Virtual Network. When using a managed virtual network, the required inbound and outbound configuration for the workspace is automatically applied. For more information, see Azure Machine Learning managed virtual network.

Common terms and information

The following terms and information are used throughout this article:

Azure service tags: A service tag is an easy way to specify the IP ranges used by an Azure service. For example, the

AzureMachineLearningtag represents the IP addresses used by the Azure Machine Learning service.Important

Azure service tags are only supported by some Azure services. For a list of service tags supported with network security groups and Azure Firewall, see the Virtual network service tags article.

If you're using a non-Azure solution such as a 3rd party firewall, download a list of Azure IP Ranges and Service Tags. Extract the file and search for the service tag within the file. The IP addresses might change periodically.

Region: Some service tags allow you to specify an Azure region. This limits access to the service IP addresses in a specific region, usually the one that your service is in. In this article, when you see

<region>, substitute your Azure region instead. For example,BatchNodeManagement.<region>would beBatchNodeManagement.chinaeast2if your Azure Machine Learning workspace is in the China East 2 region.Azure Batch: Azure Machine Learning compute clusters and compute instances rely on a back-end Azure Batch instance. This back-end service is hosted in a Azure subscription.

Ports: The following ports are used in this article. If a port range isn't listed in this table, it's specific to the service and might not have any published information on what it's used for:

Port Description 80 Unsecured web traffic (HTTP) 443 Secured web traffic (HTTPS) 445 SMB traffic used to access file shares in Azure File storage 8787 Used when connecting to RStudio on a compute instance 18881 Used to connect to the language server to enable IntelliSense for notebooks on a compute instance. Protocol: Unless noted otherwise, all network traffic mentioned in this article uses TCP.

Basic configuration

This configuration makes the following assumptions:

- You're using docker images provided by a container registry that you provide, and don't use images provided by Microsoft.

- You're using a private Python package repository, and don't access public package repositories such as

pypi.org,*.anaconda.com, or*.anaconda.org. - The private endpoints can communicate directly with each other within the VNet. For example, all services have a private endpoint in the same VNet:

- Azure Machine Learning workspace

- Azure Storage Account (blob, file, table, queue)

Inbound traffic

| Source | Source ports |

Destination | Destinationports | Purpose |

|---|---|---|---|---|

AzureMachineLearning |

Any | VirtualNetwork |

44224 | Inbound to compute instance/cluster. Only needed if the instance/cluster is configured to use a public IP address. |

Tip

A network security group (NSG) is created by default for this traffic. For more information, see Default security rules.

Outbound traffic

| Service tags | Ports | Purpose |

|---|---|---|

AzureActiveDirectory |

80, 443 | Authentication using Microsoft Entra ID. |

AzureMachineLearning |

443, 8787, 18881 UDP: 5831 |

Using Azure Machine Learning services. |

BatchNodeManagement.<region> |

443 | Communication Azure Batch. |

AzureResourceManager |

443 | Creation of Azure resources with Azure Machine Learning. |

Storage.<region> |

443 | Access data stored in the Azure Storage Account for compute cluster and compute instance. This outbound can be used to exfiltrate data. For more information, see Data exfiltration protection. |

AzureFrontDoor.FrontEnd* Not needed in Azure operated by 21Vianet. |

443 | Global entry point for Azure Machine Learning studio. Store images and environments for AutoML. |

MicrosoftContainerRegistry |

443 | Access docker images provided by Azure. |

Frontdoor.FirstParty |

443 | Access docker images provided by Azure. |

AzureMonitor |

443 | Used to log monitoring and metrics to Azure Monitor. |

VirtualNetwork |

443 | Required when private endpoints are present in the virtual network or peered virtual networks. |

Important

If a compute instance or compute cluster is configured for no public IP, by default it can't access the internet. If it can still send outbound traffic to the internet, it is because of Azure default outbound access and you have an NSG that allows outbound to the internet. We don't recommend using the default outbound access. If you need outbound access to the internet, we recommend using one of the following options instead of the default outbound access:

- Azure Virtual Network NAT with a public IP: For more information on using Virtual Network Nat, see the Virtual Network NAT documentation.

- User-defined route and firewall: Create a user-defined route in the subnet that contains the compute. The Next hop for the route should reference the private IP address of the firewall, with an address prefix of 0.0.0.0/0.

For more information, see the Default outbound access in Azure article.

Recommended configuration for training and deploying models

Outbound traffic

| Service tag(s) | Ports | Purpose |

|---|---|---|

MicrosoftContainerRegistry and AzureFrontDoor.FirstParty |

443 | Allows use of Docker images that Azure provides for training and inference. Also sets up the Azure Machine Learning router for Azure Kubernetes Service. |

To allow installation of Python packages for training and deployment, allow outbound traffic to the following host names:

Note

The following list doesn't contain all of the hosts required for all Python resources on the internet, only the most commonly used. For example, if you need access to a GitHub repository or other host, you must identify and add the required hosts for that scenario.

| Host name | Purpose |

|---|---|

anaconda.com*.anaconda.com |

Used to install default packages. |

*.anaconda.org |

Used to get repo data. |

pypi.org |

Used to list dependencies from the default index, if any, and the index isn't overwritten by user settings. If the index is overwritten, you must also allow *.pythonhosted.org. |

pytorch.org*.pytorch.org |

Used by some examples based on PyTorch. |

*.tensorflow.org |

Used by some examples based on TensorFlow. |

Scenario: Install RStudio on compute instance

To allow installation of RStudio on a compute instance, the firewall needs to allow outbound access to the sites to pull the Docker image from. Add the following Application rule to your Azure Firewall policy:

- Name: AllowRStudioInstall

- Source Type: IP Address

- Source IP Addresses: The IP address range of the subnet where you create the compute instance. For example,

172.16.0.0/24. - Destination Type: FQDN

- Target FQDN:

ghcr.io,pkg-containers.githubusercontent.com - Protocol:

Https:443

To allow the installation of R packages, allow outbound traffic to cloud.r-project.org. This host is used for installing CRAN packages.

Note

If you need access to a GitHub repository or other host, you must identify and add the required hosts for that scenario.

Scenario: Using compute cluster or compute instance with a public IP

Important

A compute instance or compute cluster without a public IP doesn't need inbound traffic from Azure Batch management and Azure Machine Learning services. However, if you have multiple computes and some of them use a public IP address, you need to allow this traffic.

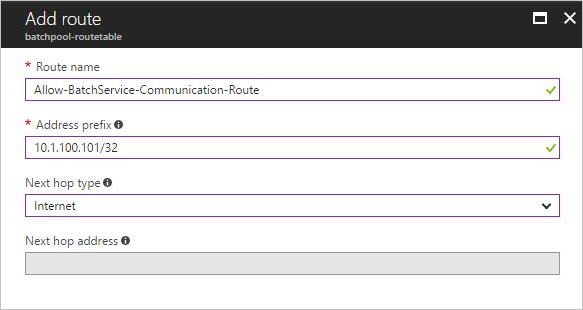

When using Azure Machine Learning compute instance or compute cluster (with a public IP address), allow inbound traffic from the Azure Machine Learning service. A compute instance or compute cluster with no public IP doesn't require this inbound communication. A Network Security Group allowing this traffic is dynamically created for you, however you might need to also create user-defined routes (UDR) if you have a firewall. When creating a UDR for this traffic, you can use either IP Addresses or service tags to route the traffic.

For the Azure Machine Learning service, you must add the IP address of both the primary and secondary regions. To find the secondary region, see the Cross-region replication in Azure. For example, if your Azure Machine Learning service is in East US 2, the secondary region is Central US.

To get a list of IP addresses of the Azure Machine Learning service, download the Azure IP Ranges and Service Tags and search the file for AzureMachineLearning.<region>, where <region> is your Azure region.

Important

The IP addresses may change over time.

When creating the UDR, set the Next hop type to Internet. This means the inbound communication from Azure skips your firewall to access the load balancers with public IPs of Compute Instance and Compute Cluster. UDR is required because Compute Instance and Compute Cluster will get random public IPs at creation, and you cannot know the public IPs before creation to register them on your firewall to allow the inbound from Azure to specific IPs for Compute Instance and Compute Cluster. The following image shows an example IP address based UDR in the Azure portal:

For information on configuring UDR, see Route network traffic with a routing table.

Scenario: Firewall between Azure Machine Learning and Azure Storage endpoints

You must also allow outbound access to Storage.<region> on port 445.

Scenario: Workspace created with the hbi_workspace flag enabled

You must also allow outbound access to Keyvault.<region>. This outbound traffic is used to access the key vault instance for the back-end Azure Batch service.

For more information on the hbi_workspace flag, see the data encryption article.

Scenario: Use Kubernetes compute

Kubernetes Cluster running behind an outbound proxy server or firewall needs extra egress network configuration.

- For AKS cluster, configure the AKS extension network requirements.

Besides above requirements, the following outbound URLs are also required for Azure Machine Learning,

| Outbound Endpoint | Port | Description | Training | Inference |

|---|---|---|---|---|

*.kusto.chinacloudapi.cn*.table.core.chinacloudapi.cn*.queue.core.chinacloudapi.cn |

443 | Required to upload system logs to Kusto. | ✓ | ✓ |

<your ACR name>.azurecr.cn<your ACR name>.<region>.data.azurecr.cn |

443 | Azure container registry, required to pull docker images used for machine learning workloads. | ✓ | ✓ |

<your storage account name>.blob.core.chinacloudapi.cn |

443 | Azure blob storage, required to fetch machine learning project scripts, data or models, and upload job logs/outputs. | ✓ | ✓ |

<your workspace ID>.workspace.<region>.api.ml.azure.cn<region>.experiments.ml.azure.cn<region>.api.ml.azure.cn |

443 | Azure Machine Learning service API. | ✓ | ✓ |

pypi.org |

443 | Python package index, to install pip packages used for training job environment initialization. | ✓ | N/A |

archive.ubuntu.comsecurity.ubuntu.comppa.launchpad.net |

80 | Required to download the necessary security patches. | ✓ | N/A |

Note

- Replace

<your workspace workspace ID>with your workspace ID. The ID can be found in Azure portal - your Machine Learning resource page - Properties - Workspace ID. - Replace

<your storage account>with the storage account name. - Replace

<your ACR name>with the name of the Azure Container Registry for your workspace. - Replace

<region>with the region of your workspace.

In-cluster communication requirements

To install the Azure Machine Learning extension on Kubernetes compute, all Azure Machine Learning related components are deployed in a azureml namespace. The following in-cluster communication is needed to ensure the ML workloads work well in the AKS cluster.

- The components in

azuremlnamespace should be able to communicate with Kubernetes API server. - The components in

azuremlnamespace should be able to communicate with each other. - The components in

azuremlnamespace should be able to communicate withkube-dnsandkonnectivity-agentinkube-systemnamespace. - If the cluster is used for real-time inferencing,

azureml-fe-xxxPODs should be able to communicate with the deployed model PODs on 5001 port in other namespace.azureml-fe-xxxPODs should open 11001, 12001, 12101, 12201, 20000, 8000, 8001, 9001 ports for internal communication. - If the cluster is used for real-time inferencing, the deployed model PODs should be able to communicate with

amlarc-identity-proxy-xxxPODs on 9999 port.

Scenario: Visual Studio Code

Visual Studio Code relies on specific hosts and ports to establish a remote connection.

Hosts

The hosts in this section are used to install Visual Studio Code packages to establish a remote connection between Visual Studio Code and compute instances in your Azure Machine Learning workspace.

Note

This is not a complete list of the hosts required for all Visual Studio Code resources on the internet, only the most commonly used. For example, if you need access to a GitHub repository or other host, you must identify and add the required hosts for that scenario. For a complete list of host names, see Network Connections in Visual Studio Code.

| Host name | Purpose |

|---|---|

*.vscode.dev*.vscode-unpkg.net*.vscode-cdn.net*.vscodeexperiments.azureedge.netdefault.exp-tas.com |

Required to access vscode.dev (Visual Studio Code for the Web) |

code.visualstudio.com |

Required to download and install VS Code desktop. This host isn't required for VS Code Web. |

update.code.visualstudio.com*.vo.msecnd.net |

Used to retrieve VS Code server bits that are installed on the compute instance through a setup script. |

marketplace.visualstudio.comvscode.blob.core.chinacloudapi.cn*.gallerycdn.vsassets.io |

Required to download and install VS Code extensions. These hosts enable the remote connection to compute instances using the Azure Machine Learning extension for VS Code. For more information, see Connect to an Azure Machine Learning compute instance in Visual Studio Code |

https://github.com/microsoft/vscode-tools-for-ai/tree/master/azureml_remote_websocket_server/* |

Used to retrieve websocket server bits that are installed on the compute instance. The websocket server is used to transmit requests from Visual Studio Code client (desktop application) to Visual Studio Code server running on the compute instance. The azureml_websocket_server is required only when connecting to an Interactive Job, see Interact with your jobs (debug and monitor) |

vscode.download.prss.microsoft.com |

Used for Visual Studio Code download CDN |

Ports

You must allow network traffic to ports 8704 to 8710. The VS Code server dynamically selects the first available port within this range.

Scenario: Third party firewall or Azure Firewall without service tags

The guidance in this section is generic, as each firewall has its own terminology and specific configurations. If you have questions, check the documentation for the firewall you're using.

Tip

If you're using Azure Firewall, and want to use the FQDNs listed in this section instead of using service tags, use the following guidance:

- FQDNs that use HTTP/S ports (80 and 443) should be configured as application rules.

- FQDNs that use other ports should be configured as network rules.

For more information, see Differences in application rules vs. network rules.

If not configured correctly, the firewall can cause problems using your workspace. There are various host names that are used both by the Azure Machine Learning workspace. The following sections list hosts that are required for Azure Machine Learning.

Dependencies API

You can also use the Azure Machine Learning REST API to get a list of hosts and ports that you must allow outbound traffic to. To use this API, use the following steps:

Get an authentication token. The following command demonstrates using the Azure CLI to get an authentication token and subscription ID:

TOKEN=$(az account get-access-token --query accessToken -o tsv) SUBSCRIPTION=$(az account show --query id -o tsv)Call the API. In the following command, replace the following values:

- Replace

<region>with the Azure region your workspace is in. For example,chinaeast2. - Replace

<resource-group>with the resource group that contains your workspace. - Replace

<workspace-name>with the name of your workspace.

az rest --method GET \ --url "https://<region>.api.ml.azure.cn/rp/workspaces/subscriptions/$SUBSCRIPTION/resourceGroups/<resource-group>/providers/Microsoft.MachineLearningServices/workspaces/<workspace-name>/outboundNetworkDependenciesEndpoints?api-version=2018-03-01-preview" \ --header Authorization="Bearer $TOKEN"- Replace

The result of the API call is a JSON document. The following snippet is an excerpt of this document:

{

"value": [

{

"properties": {

"category": "Azure Active Directory",

"endpoints": [

{

"domainName": "login.chinacloudapi.cn",

"endpointDetails": [

{

"port": 80

},

{

"port": 443

}

]

}

]

}

},

{

"properties": {

"category": "Azure portal",

"endpoints": [

{

"domainName": "management.chinacloudapi.cn",

"endpointDetails": [

{

"port": 443

}

]

}

]

}

},

...

Azure hosts

The hosts in the following tables are owned by Microsoft, and provide services required for the proper functioning of your workspace. The tables list hosts for Azure operated by 21Vianet regions.

Important

Azure Machine Learning uses Azure Storage Accounts in your subscription and in Azure-managed subscriptions. Where applicable, the following terms are used to differentiate between them in this section:

- Your storage: The Azure Storage Account(s) in your subscription, which is used to store your data and artifacts such as models, training data, training logs, and Python scripts.>

- Microsoft storage: The Azure Machine Learning compute instance and compute clusters rely on Azure Batch, and must access storage located in a Azure subscription. This storage is used only for the management of the compute instances. None of your data is stored here.

General Azure hosts

| Required for | Hosts | Protocol | Ports |

|---|---|---|---|

| Microsoft Entra ID | login.chinacloudapi.cn |

TCP | 80, 443 |

| Azure portal | management.azure.cn |

TCP | 443 |

| Azure Resource Manager | management.chinacloudapi.cn |

TCP | 443 |

Azure Machine Learning hosts

Important

In the following table, replace <storage> with the name of the default storage account for your Azure Machine Learning workspace. Replace <region> with the region of your workspace.

| Required for | Hosts | Protocol | Ports |

|---|---|---|---|

| Azure Machine Learning studio | studio.ml.azure.cn |

TCP | 443 |

| API | *.ml.azure.cn |

TCP | 443 |

| API | *.azureml.cn |

TCP | 443 |

| Model management | *.modelmanagement.ml.azure.cn |

TCP | 443 |

| Integrated notebook | *.notebooks.chinacloudapi.cn |

TCP | 443 |

| Integrated notebook | <storage>.file.core.chinacloudapi.cn |

TCP | 443, 445 |

| Integrated notebook | <storage>.dfs.core.chinacloudapi.cn |

TCP | 443 |

| Integrated notebook | <storage>.blob.core.chinacloudapi.cn |

TCP | 443 |

| Integrated notebook | microsoftgraph.chinacloudapi.cn |

TCP | 443 |

| Integrated notebook | *.aznbcontent.net |

TCP | 443 |

Azure Machine Learning compute instance and compute cluster hosts

Tip

- The host for Azure Key Vault is only needed if your workspace was created with the hbi_workspace flag enabled.

- Ports 8787 and 18881 for compute instance are only needed when your Azure Machine workspace has a private endpoint.

- In the following table, replace

<storage>with the name of the default storage account for your Azure Machine Learning workspace. - In the following table, replace

<region>with the Azure region that contains your Azure Machine Learning workspace. - WebSocket communication must be allowed to the compute instance. If you block websocket traffic, Jupyter notebooks won't work correctly.

| Required for | Hosts | Protocol | Ports |

|---|---|---|---|

| Compute cluster/instance | microsoftgraph.chinacloudapi.cn |

TCP | 443 |

| Compute instance | *.instances.azureml.cn |

TCP | 443 |

| Compute instance | *.instances.ml.azure.cn |

TCP | 443, 8787, 18881 |

| Compute instance | <region>.tundra.azureml.cn |

UDP | 5831 |

| Azure storage access | *.blob.core.chinacloudapi.cn |

TCP | 443 |

| Azure storage access | *.table.core.chinacloudapi.cn |

TCP | 443 |

| Azure storage access | *.queue.core.chinacloudapi.cn |

TCP | 443 |

| Your storage account | <storage>.file.core.chinacloudapi.cn |

TCP | 443, 445 |

| Your storage account | <storage>.blob.core.chinacloudapi.cn |

TCP | 443 |

| Azure Key Vault | *.vault.azure.cn |

TCP | 443 |

Docker images maintained by Azure Machine Learning

| Required for | Hosts | Protocol | Ports |

|---|---|---|---|

| Azure Container Registry | mcr.microsoft.com *.data.mcr.microsoft.com |

TCP | 443 |

Tip

- Azure Container Registry is required for any custom Docker image. This includes small modifications (such as additional packages) to base images provided by Microsoft. It is also required by the internal training job submission process of Azure Machine Learning. Furthermore, Microsoft Container Registry is always needed regardless of the scenario.

- If you plan on using federated identity, follow the Best practices for securing Active Directory Federation Services article.

Also, use the information in the compute with public IP section to add IP addresses for BatchNodeManagement and AzureMachineLearning.

For information on restricting access to models deployed to AKS, see Restrict egress traffic in Azure Kubernetes Service.

Monitoring, metrics, and diagnostics

If you haven't secured Azure Monitor for the workspace, you must allow outbound traffic to the following hosts:

If you haven't secured Azure Monitor for the workspace, you must allow outbound traffic to the following hosts:

Note

The information logged to these hosts is also used by Azure Support to be able to diagnose any problems you run into with your workspace.

dc.applicationinsights.azure.comdc.applicationinsights.microsoft.comdc.services.visualstudio.com*.in.applicationinsights.azure.com

For a list of IP addresses for these hosts, see IP addresses used by Azure Monitor.

Next steps

This article is part of a series on securing an Azure Machine Learning workflow. See the other articles in this series:

For more information on configuring Azure Firewall, see Tutorial: Deploy and configure Azure Firewall using the Azure portal.