Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

APPLIES TO:

Azure CLI ml extension v2 (current)

Azure CLI ml extension v2 (current)

Python SDK azure-ai-ml v2 (current)

Python SDK azure-ai-ml v2 (current)

Tip

You can use Azure Machine Learning managed virtual networks instead of the steps in this article. With a managed virtual network, Azure Machine Learning handles the job of network isolation for your workspace and managed computes. You can also add private endpoints for resources needed by the workspace, such as Azure Storage Account. For more information, see Workspace managed network isolation.

In this article, you learn how to secure an Azure Machine Learning workspace and its associated resources in an Azure Virtual Network.

This article is part of a series on securing an Azure Machine Learning workflow. See the other articles in this series:

- Virtual network overview

- Secure the training environment

- Secure the inference environment

- Enable studio functionality

- Use custom DNS

- Use a firewall

- API platform network isolation

For a tutorial on creating a secure workspace, see Tutorial: Create a secure workspace, Bicep template, or Terraform template.

In this article you learn how to enable the following workspaces resources in a virtual network:

- Azure Machine Learning workspace

- Azure Storage accounts

- Azure Key Vault

- Azure Container Registry

Prerequisites

Read the Network security overview article to understand common virtual network scenarios and overall virtual network architecture.

Read the Azure Machine Learning best practices for enterprise security article to learn about best practices.

An existing virtual network and subnet to use with your compute resources.

Warning

Do not use the 172.17.0.0/16 IP address range for your VNet. This is the default subnet range used by the Docker bridge network, and will result in errors if used for your VNet. Other ranges may also conflict depending on what you want to connect to the virtual network. For example, if you plan to connect your on premises network to the VNet, and your on-premises network also uses the 172.16.0.0/16 range. Ultimately, it is up to you to plan your network infrastructure.

To deploy resources into a virtual network or subnet, your user account must have permissions to the following actions in Azure role-based access control (Azure RBAC):

- "Microsoft.Network/*/read" on the virtual network resource. This permission isn't needed for Azure Resource Manager (ARM) template deployments.

- "Microsoft.Network/virtualNetworks/join/action" on the virtual network resource.

- "Microsoft.Network/virtualNetworks/subnets/join/action" on the subnet resource.

For more information on Azure RBAC with networking, see the Networking built-in roles

Azure Container Registry

Your Azure Container Registry must be Premium version. For more information on upgrading, see Changing SKUs.

If your Azure Container Registry uses a private endpoint, we recommend that it be in the same virtual network as the storage account and compute targets used for training or inference. However it can also be in a peered virtual network.

If it uses a service endpoint, it must be in the same virtual network and subnet as the storage account and compute targets.

Your Azure Machine Learning workspace must contain an Azure Machine Learning compute cluster.

Limitations

Azure storage account

If you plan to use Azure Machine Learning studio and the storage account is also in the virtual network, there are extra validation requirements:

- If the storage account uses a service endpoint, the workspace private endpoint and storage service endpoint must be in the same subnet of the virtual network.

- If the storage account uses a private endpoint, the workspace private endpoint and storage private endpoint must be in the same virtual network. In this case, they can be in different subnets.

Azure Container Instances

When your Azure Machine Learning workspace is configured with a private endpoint, deploying to Azure Container Instances in a virtual network isn't supported. Instead, consider using a Managed online endpoint with network isolation.

Azure Container Registry

When your Azure Machine Learning workspace or any resource is configured with a private endpoint it may be required to setup a user managed compute cluster for Azure Machine Learning Environment image builds. Default scenario is leveraging serverless compute and currently intended for scenarios with no network restrictions on resources associated with Azure Machine Learning Workspace.

Important

The compute cluster used to build Docker images needs to be able to access the package repositories that are used to train and deploy your models. You might need to add network security rules that allow access to public repos, use private Python packages, or use custom Docker images (SDK v1) that already include the packages.

Azure Monitor

Warning

Azure Monitor supports using Azure Private Link to connect to a VNet. However, you must use the open Private Link mode in Azure Monitor. For more information, see Private Link access modes: Private only vs. Open.

Required public internet access

Azure Machine Learning requires both inbound and outbound access to the public internet. The following tables provide an overview of the required access and what purpose it serves. For service tags that end in .region, replace region with the Azure region that contains your workspace. For example, Storage.chinanorth:

Tip

The required tab lists the required inbound and outbound configuration. The situational tab lists optional inbound and outbound configurations required by specific configurations you might want to enable.

| Direction | Protocol & ports |

Service tag | Purpose |

|---|---|---|---|

| Outbound | TCP: 80, 443 | AzureActiveDirectory |

Authentication using Microsoft Entra ID. |

| Outbound | TCP: 443, 18881 UDP: 5831 |

AzureMachineLearning |

Using Azure Machine Learning services. Python intellisense in notebooks uses port 18881. Creating, updating, and deleting an Azure Machine Learning compute instance uses port 5831. |

| Outbound | ANY: 443 | BatchNodeManagement.region |

Communication with Azure Batch back-end for Azure Machine Learning compute instances/clusters. |

| Outbound | TCP: 443 | AzureResourceManager |

Creation of Azure resources with Azure Machine Learning, Azure CLI, and Azure Machine Learning SDK. |

| Outbound | TCP: 443 | Storage.region |

Access data stored in the Azure Storage Account for compute cluster and compute instance. For information on preventing data exfiltration over this outbound, see Data exfiltration protection. |

| Outbound | TCP: 443 | AzureFrontDoor.FrontEnd* Not needed in Azure operated by 21Vianet. |

Global entry point for Azure Machine Learning studio. Store images and environments for AutoML. For information on preventing data exfiltration over this outbound, see Data exfiltration protection. |

| Outbound | TCP: 443 | MicrosoftContainerRegistry.regionNote that this tag has a dependency on the AzureFrontDoor.FirstParty tag |

Access docker images provided by Microsoft. Setup of the Azure Machine Learning router for Azure Kubernetes Service. |

Tip

If you need the IP addresses instead of service tags, use one of the following options:

- Download a list from Azure IP Ranges and Service Tags.

- Use the Azure CLI az network list-service-tags command.

- Use the Azure PowerShell Get-AzNetworkServiceTag command.

The IP addresses may change periodically.

You may also need to allow outbound traffic to Visual Studio Code and non-Microsoft sites for the installation of packages required by your machine learning project. The following table lists commonly used repositories for machine learning:

| Host name | Purpose |

|---|---|

anaconda.com*.anaconda.com |

Used to install default packages. |

*.anaconda.org |

Used to get repo data. |

pypi.org |

Used to list dependencies from the default index, if any, and the index isn't overwritten by user settings. If the index is overwritten, you must also allow *.pythonhosted.org. |

cloud.r-project.org |

Used when installing CRAN packages for R development. |

*.pytorch.org |

Used by some examples based on PyTorch. |

*.tensorflow.org |

Used by some examples based on TensorFlow. |

code.visualstudio.com |

Required to download and install Visual Studio Code desktop. This isn't required for Visual Studio Code Web. |

update.code.visualstudio.com*.vo.msecnd.net |

Used to retrieve Visual Studio Code server bits that are installed on the compute instance through a setup script. |

marketplace.visualstudio.comvscode.blob.core.chinacloudapi.cn*.gallerycdn.vsassets.io |

Required to download and install Visual Studio Code extensions. These hosts enable the remote connection to Compute Instances provided by the Azure ML extension for Visual Studio Code. For more information, see Connect to an Azure Machine Learning compute instance in Visual Studio Code. |

raw.githubusercontent.com/microsoft/vscode-tools-for-ai/master/azureml_remote_websocket_server/* |

Used to retrieve websocket server bits, which are installed on the compute instance. The websocket server is used to transmit requests from Visual Studio Code client (desktop application) to Visual Studio Code server running on the compute instance. |

Note

When using the Azure Machine Learning VS Code extension the remote compute instance will require an access to public repositories to install the packages required by the extension. If the compute instance requires a proxy to access these public repositories or the Internet, you will need to set and export the HTTP_PROXY and HTTPS_PROXY environment variables in the ~/.bashrc file of the compute instance. This process can be automated at provisioning time by using a custom script.

When using Azure Kubernetes Service (AKS) with Azure Machine Learning, allow the following traffic to the AKS VNet:

- General inbound/outbound requirements for AKS as described in the Restrict egress traffic in Azure Kubernetes Service article.

- Outbound to mcr.microsoft.com.

- When deploying a model to an AKS cluster, use the guidance in the Deploy ML models to Azure Kubernetes Service article.

For information on using a firewall solution, see Configure required input and output communication.

Secure the workspace with private endpoint

Azure Private Link lets you connect to your workspace using a private endpoint. The private endpoint is a set of private IP addresses within your virtual network. You can then limit access to your workspace to only occur over the private IP addresses. A private endpoint helps reduce the risk of data exfiltration.

For more information on configuring a private endpoint for your workspace, see How to configure a private endpoint.

Warning

Securing a workspace with private endpoints does not ensure end-to-end security by itself. You must follow the steps in the rest of this article, and the VNet series, to secure individual components of your solution. For example, if you use a private endpoint for the workspace, but your Azure Storage Account is not behind the VNet, traffic between the workspace and storage does not use the VNet for security.

Secure Azure storage accounts

Azure Machine Learning supports storage accounts configured to use either a private endpoint or service endpoint.

In the Azure portal, select the Azure Storage Account.

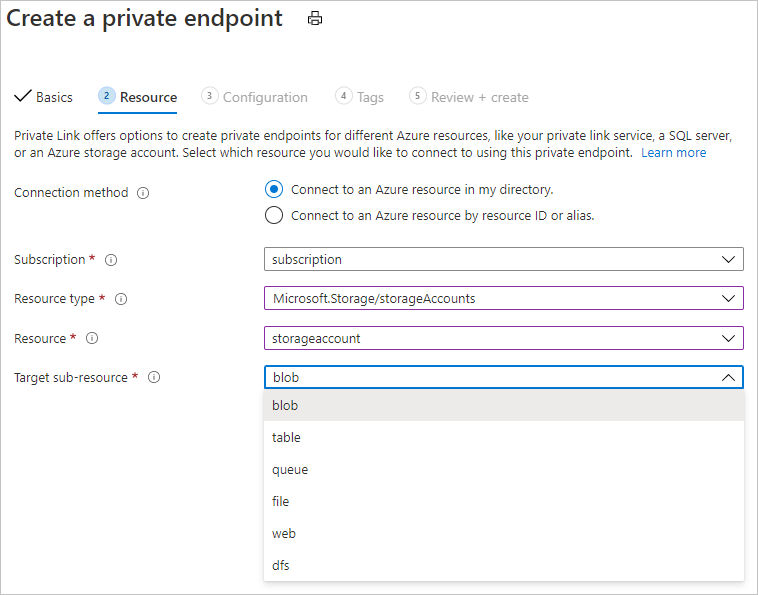

Use the information in Use private endpoints for Azure Storage to add private endpoints for the following storage resources:

- Blob

- File

- Queue - Only needed if you plan to use Batch endpoints or the ParallelRunStep in an Azure Machine Learning pipeline.

- Table - Only needed if you plan to use Batch endpoints or the ParallelRunStep in an Azure Machine Learning pipeline.

Tip

When configuring a storage account that is not the default storage, select the Target subresource type that corresponds to the storage account you want to add.

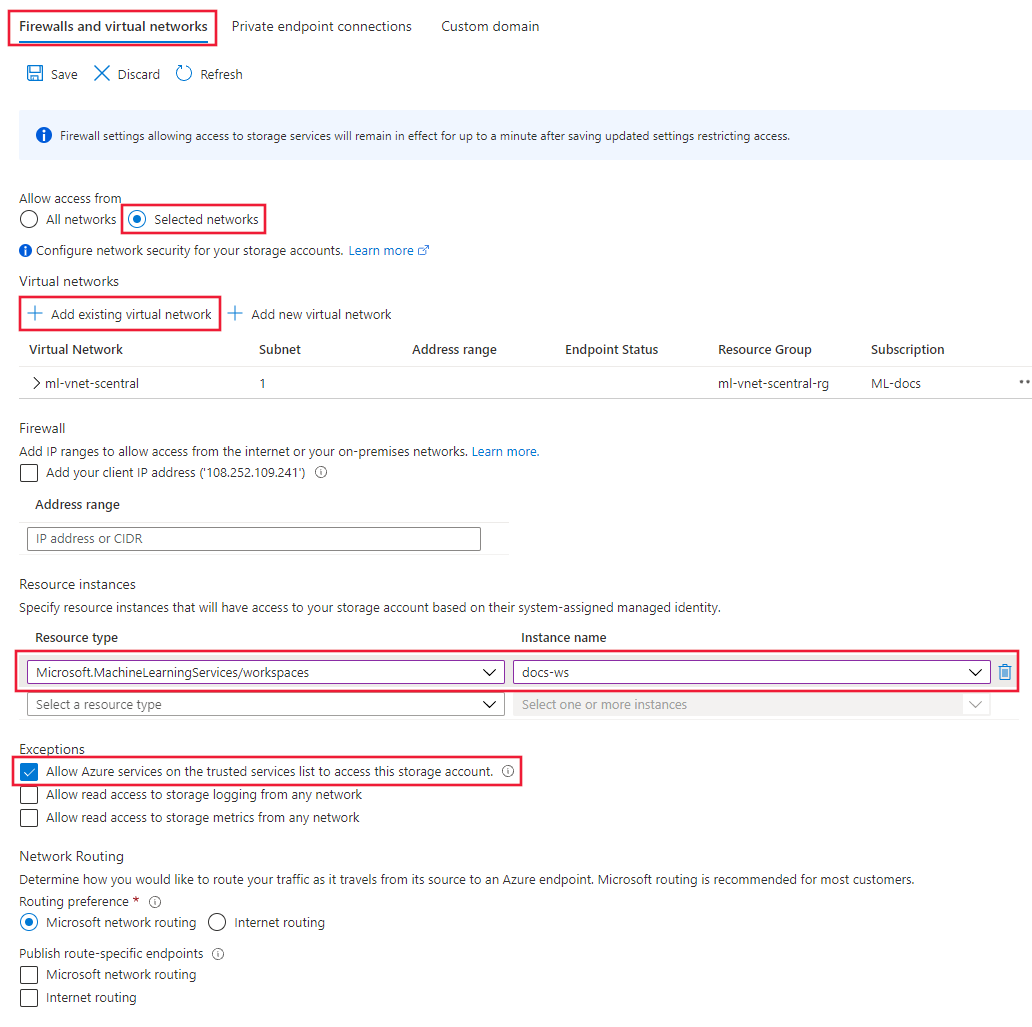

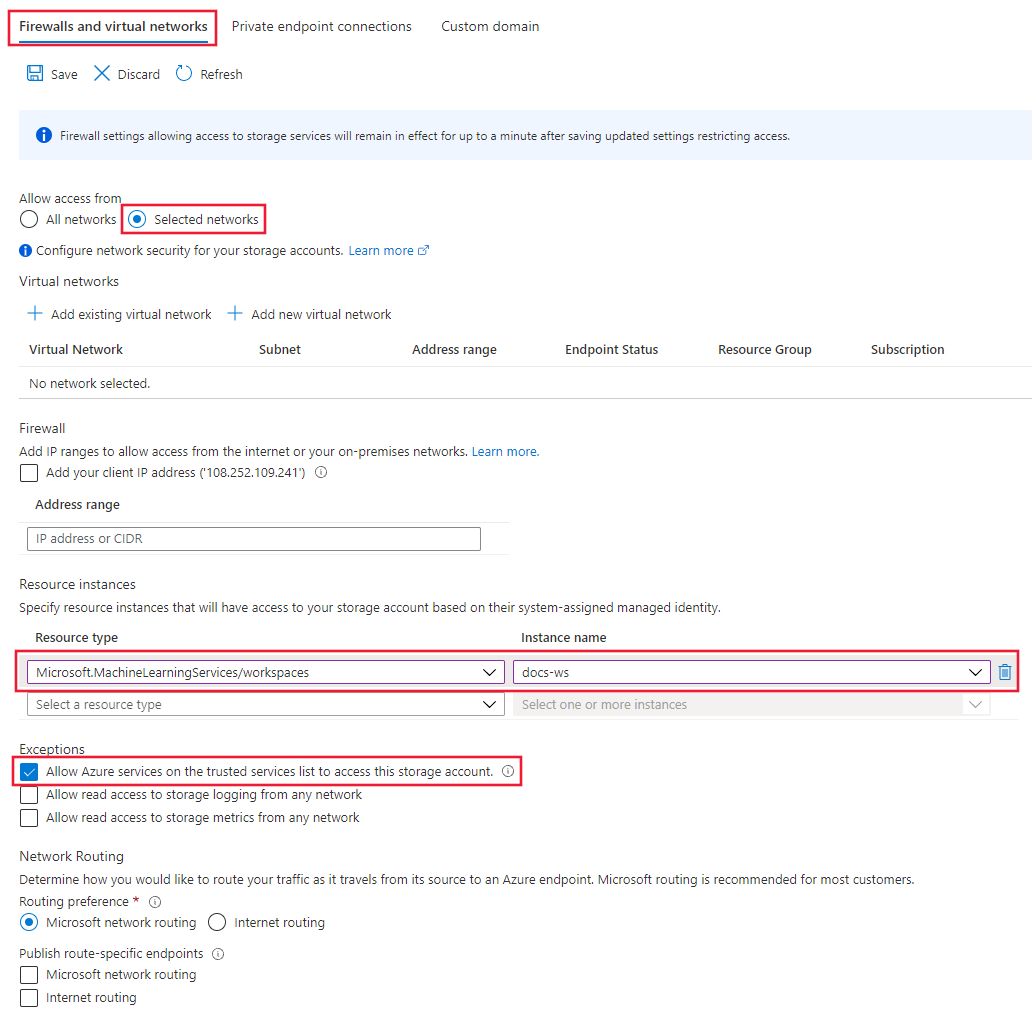

After creating the private endpoints for the storage resources, select the Firewalls and virtual networks tab under Networking for the storage account.

Select Selected networks, and then under Resource instances, select

Microsoft.MachineLearningServices/Workspaceas the Resource type. Select your workspace using Instance name. For more information, see Trusted access based on system-assigned managed identity.Tip

Alternatively, you can select Allow Azure services on the trusted services list to access this storage account to more broadly allow access from trusted services. For more information, see Configure Azure Storage firewalls and virtual networks.

Select Save to save the configuration.

Tip

When using a private endpoint, you can also disable anonymous access. For more information, see disallow anonymous access.

Secure Azure Key Vault

Azure Machine Learning uses an associated Key Vault instance to store the following credentials:

- The associated storage account connection string

- Passwords to Azure Container Repository instances

- Connection strings to data stores

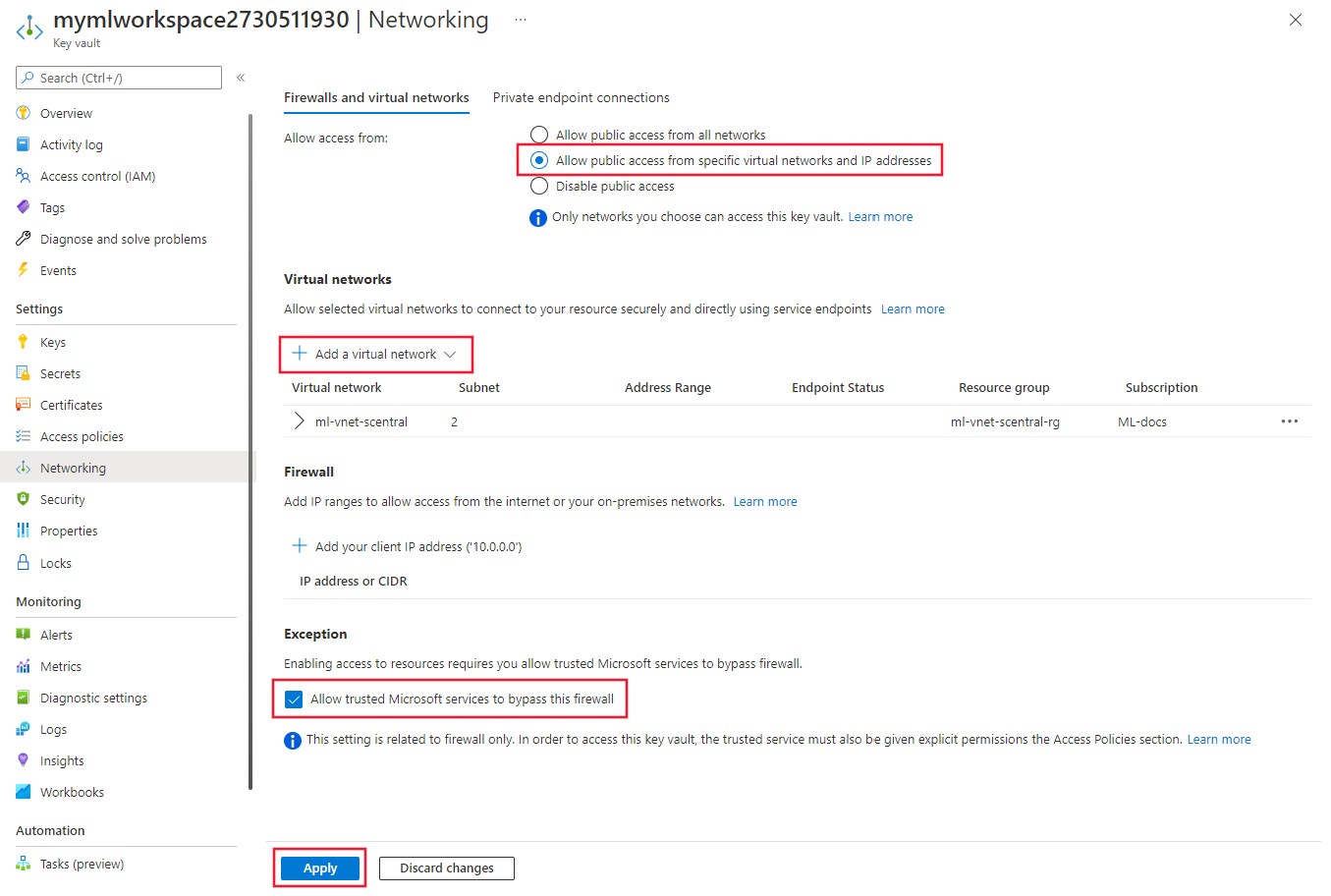

Azure key vault can be configured to use either a private endpoint or service endpoint. To use Azure Machine Learning experimentation capabilities with Azure Key Vault behind a virtual network, use the following steps:

Tip

We recommend that the key vault be in the same VNet as the workspace, however it can be in a peered VNet.

For information on using a private endpoint with Azure Key Vault, see Integrate Key Vault with Azure Private Link.

Enable Azure Container Registry (ACR)

Tip

If you did not use an existing Azure Container Registry when creating the workspace, one may not exist. By default, the workspace will not create an ACR instance until it needs one. To force the creation of one, train or deploy a model using your workspace before using the steps in this section.

Azure Container Registry can be configured to use a private endpoint. Use the following steps to configure your workspace to use ACR when it is in the virtual network:

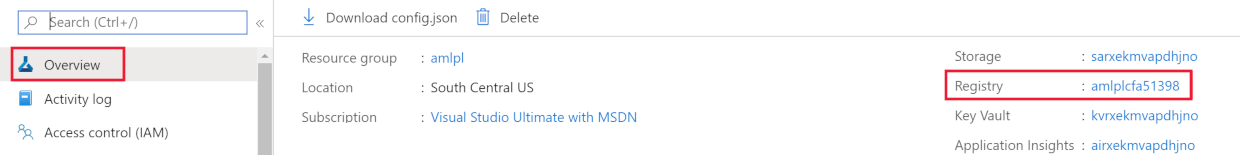

Find the name of the Azure Container Registry for your workspace, using one of the following methods:

APPLIES TO:

Azure CLI ml extension v2 (current)

Azure CLI ml extension v2 (current)If you've installed the Machine Learning extension v2 for Azure CLI, you can use the

az ml workspace showcommand to show the workspace information. The v1 extension doesn't return this information.az ml workspace show -n yourworkspacename -g resourcegroupname --query 'container_registry'This command returns a value similar to

"/subscriptions/{GUID}/resourceGroups/{resourcegroupname}/providers/Microsoft.ContainerRegistry/registries/{ACRname}". The last part of the string is the name of the Azure Container Registry for the workspace.Limit access to your virtual network using the steps in Connect privately to an Azure Container Registry. When adding the virtual network, select the virtual network and subnet for your Azure Machine Learning resources.

Configure the ACR for the workspace to Allow access by trusted services.

By default, Azure Machine Learning will try to use a serverless compute to build the image. This works only when the workspace-dependent resources such as Storage Account or Container Registry are not under any network restriction (private endpoints). If your workspace-dependent resources are network restricted, use an image-build-compute instead.

To set up an image-build compute, create an Azure Machine Learning CPU SKU compute cluster in the same VNet as your workspace-dependent resources. This cluster can then be set as the default image-build compute and will be used to build every image in your workspace from that point onwards. Use one of the following methods to configure the workspace to build Docker images using the compute cluster.

Important

The following limitations apply When using a compute cluster for image builds:

- Only a CPU SKU is supported.

- If you use a compute cluster configured for no public IP address, you must provide some way for the cluster to access the public internet. Internet access is required when accessing images stored on the Microsoft Container Registry, packages installed on Pypi, Conda, etc. You need to configure User Defined Routing (UDR) to reach to a public IP to access the internet. For example, you can use the public IP of your firewall, or you can use Virtual Network NAT with a public IP. For more information, see How to securely train in a VNet.

You can use the

az ml workspace updatecommand to set a build compute. The command is the same for both the v1 and v2 Azure CLI extensions for machine learning. In the following command, replacemyworkspacewith your workspace name,myresourcegroupwith the resource group that contains the workspace, andmycomputeclusterwith the compute cluster name:az ml workspace update --name myworkspace --resource-group myresourcegroup --image-build-compute mycomputeclusterYou can switch back to serverless compute by executing the same command and referencing the compute as an empty space:

--image-build-compute ''.

Tip

When ACR is behind a VNet, you can also disable public access to it.

Secure Azure Monitor and Application Insights

To enable network isolation for Azure Monitor and the Application Insights instance for the workspace, use the following steps:

Open your Application Insights resource in the Azure portal. The Overview tab may or may not have a Workspace property. If it doesn't have the property, perform step 2. If it does, then you can proceed directly to step 3.

Tip

New workspaces create a workspace-based Application Insights resource by default. If your workspace was recently created, then you would not need to perform step 2.

Upgrade the Application Insights instance for your workspace. For steps on how to upgrade, see Migrate to workspace-based Application Insights resources.

Create an Azure Monitor Private Link Scope and add the Application Insights instance from step 1 to the scope. For more information, see Configure your Azure Monitor private link.

Securely connect to your workspace

To connect to a workspace that's secured behind a VNet, use one of the following methods:

Azure VPN gateway - Connects on-premises networks to the VNet over a private connection. Connection is made over the public internet. There are two types of VPN gateways that you might use:

- Point-to-site: Each client computer uses a VPN client to connect to the VNet.

- Site-to-site: A VPN device connects the VNet to your on-premises network.

ExpressRoute - Connects on-premises networks into the cloud over a private connection. Connection is made using a connectivity provider.

Azure Bastion - In this scenario, you create an Azure Virtual Machine (sometimes called a jump box) inside the VNet. You then connect to the VM using Azure Bastion. Bastion allows you to connect to the VM using either an RDP or SSH session from your local web browser. You then use the jump box as your development environment. Since it is inside the VNet, it can directly access the workspace. For an example of using a jump box, see Tutorial: Create a secure workspace.

Important

When using a VPN gateway or ExpressRoute, you will need to plan how name resolution works between your on-premises resources and those in the VNet. For more information, see Use a custom DNS server.

If you have problems connecting to the workspace, see Troubleshoot secure workspace connectivity.

Workspace diagnostics

You can run diagnostics on your workspace from Azure Machine Learning studio or the Python SDK. After diagnostics run, a list of any detected problems is returned. This list includes links to possible solutions. For more information, see How to use workspace diagnostics.

Public access to workspace

Important

While this is a supported configuration for Azure Machine Learning, Azure doesn't recommend it. You should verify this configuration with your security team before using it in production.

In some cases, you might need to allow access to the workspace from the public network (without connecting through the virtual network using the methods detailed the Securely connect to your workspace section). Access over the public internet is secured using TLS.

To enable public network access to the workspace, use the following steps:

- Enable public access to the workspace after configuring the workspace's private endpoint.

- Configure the Azure Storage firewall to allow communication with the IP address of clients that connect over the public internet. You might need to change the allowed IP address if the clients don't have a static IP. For example, if one of your Data Scientists is working from home and can't establish a VPN connection to the VNet.

Next steps

This article is part of a series on securing an Azure Machine Learning workflow. See the other articles in this series: