Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

An Azure Machine Learning managed feature store lets you discover, create, and operationalize features. Features serve as the connective tissue in the machine learning lifecycle, starting from the prototyping phase, where you experiment with various features. That lifecycle continues to the operationalization phase, where you deploy your models, and inference steps look up the feature data. For more information about feature stores, read the feature store concepts document.

This tutorial describes how to configure secure ingress through a private endpoint, and secure egress through a managed virtual network.

Part 1 of this tutorial series showed how to create a feature set specification with custom transformations, and use that feature set to generate training data. Part 2 of the series showed how to enable materialization, and perform a backfill. Additionally, Part 2 showed how to experiment with features, as a way to improve model performance. Part 3 showed how a feature store increases agility in the experimentation and training flows. Part 3 also described how to run batch inference. Tutorial 4 explained how to use feature store for online/realtime inference use cases. Tutorial 5 demonstrated how to develop a feature set with a custom data source. Tutorial 6 shows how to

- Set up the necessary resources for network isolation of a managed feature store.

- Create a new feature store resource.

- Set up your feature store to support network isolation scenarios.

- Update your project workspace (current workspace) to support network isolation scenarios .

Prerequisites

Note

This tutorial uses an Azure Machine Learning notebook with Serverless Spark Compute.

An Azure Machine Learning workspace, enabled with Managed virtual network for serverless spark jobs

To configure your project workspace:

Create a YAML file named

network.yml:managed_network: isolation_mode: allow_internet_outboundExecute these commands to update the workspace and provision the managed virtual network for serverless Spark jobs:

az ml workspace update --file network.yml --resource-group my_resource_group --name my_workspace_name az ml workspace provision-network --resource-group my_resource_group --name my_workspace_name --include-spark

For more information, visit Configure for serverless spark job.

Your user account must have the

OwnerorContributorrole assigned to the resource group where you create the feature store. Your user account also needs theUser Access Administratorrole.

Important

For your Azure Machine Learning workspace, set the isolation_mode to allow_internet_outbound. This is the only supported network isolation mode. This tutorial will show how to connect to sources, materialization store and observation data securely through private endpoints.

Set up

This tutorial uses the Python feature store core SDK (azureml-featurestore). The Python SDK is used for feature set development and testing only. The CLI is used for create, read, update, and delete (CRUD) operations, on feature stores, feature sets, and feature store entities. This is useful in continuous integration and continuous delivery (CI/CD) or GitOps scenarios where CLI/YAML is preferred.

You don't need to explicitly install these resources for this tutorial, because in the set-up instructions shown here, the conda.yaml file covers them.

To prepare the notebook environment for development:

Clone the azureml-examples repository to your local GitHub resources with this command:

git clone --depth 1 https://github.com/Azure/azureml-examplesYou can also download a zip file from the azureml-examples repository. At this page, first select the

codedropdown, and then selectDownload ZIP. Then, unzip the contents into a folder on your local device.Upload the feature store samples directory to the project workspace

- In the Azure Machine Learning workspace, open the Azure Machine Learning studio UI

- Select Notebooks in left panel

- Select your user name in the directory listing

- Select ellipses (...) and then select Upload folder

- Select the feature store samples folder from the cloned directory path:

azureml-examples/sdk/python/featurestore-sample

Run the tutorial

Option 1: Create a new notebook, and execute the instructions in this document, step by step

Option 2: Open existing notebook

featurestore_sample/notebooks/sdk_and_cli/network_isolation/Network-isolation-feature-store.ipynb. You can keep this document open and refer to it for more explanation and documentation links- Select Serverless Spark Compute in the top navigation Compute dropdown. This operation might take one to two minutes. Wait for a status bar in the top to display Configure session

- Select Configure session in the top status bar

- Select Python packages

- Select Upload conda file

- Select file

azureml-examples/sdk/python/featurestore-sample/project/env/conda.ymllocated on your local device - (Optional) Increase the session time-out (idle time in minutes) to reduce the serverless spark cluster startup time

This code cell starts the Spark session. It needs about 10 minutes to install all dependencies and start the Spark session.

# Run this cell to start the spark session (any code block will start the session ). This can take around 10 mins. print("start spark session")Set up the root directory for the samples

import os # Please update your alias below (or any custom directory you have uploaded the samples to). # You can find the name from the directory structure in the left navigation. root_dir = "./Users/<your user alias>/featurestore_sample" if os.path.isdir(root_dir): print("The folder exists.") else: print("The folder does not exist. Please create or fix the path")Set up the Azure Machine Learning CLI:

Install the Azure Machine Learning CLI extension

# install azure ml cli extension !az extension add --name mlAuthenticate

# authenticate !az loginSet the default subscription

# Set default subscription import os subscription_id = os.environ["AZUREML_ARM_SUBSCRIPTION"] !az account set -s $subscription_id

Note

A feature store workspace supports feature reuse across projects. A project workspace - the current workspace in use - leverages features from a specific feature store, to train and inference models. Many project workspaces can share and reuse the same feature store workspace.

Provision the necessary resources

You can create a new Azure Data Lake Storage (ADLS) Gen2 storage account and containers, or reuse existing storage account and container resources for the feature store. In a real-world situation, different storage accounts can host the ADLS Gen2 containers. Both options work, depending on your specific requirements.

For this tutorial, you create three separate storage containers in the same ADLS Gen2 storage account:

- Source data

- Offline store

- Observation data

Create an ADLS Gen2 storage account for source data, offline store, and observation data.

Provide the name of an Azure Data Lake Storage Gen2 storage account in the following code sample. You can execute the following code cell with the provided default settings. Optionally, you can override the default settings.

## Default Setting # We use the subscription, resource group, region of this active project workspace, # We hard-coded default resource names for creating new resources ## Overwrite # You can replace them if you want to create the resources in a different subsciprtion/resourceGroup, or use existing resources # At the minimum, provide an ADLS Gen2 storage account name for `storage_account_name` storage_subscription_id = os.environ["AZUREML_ARM_SUBSCRIPTION"] storage_resource_group_name = os.environ["AZUREML_ARM_RESOURCEGROUP"] storage_account_name = "<STORAGE_ACCOUNT_NAME>" storage_location = "eastus" storage_file_system_name_offline_store = "offline-store" storage_file_system_name_source_data = "source-data" storage_file_system_name_observation_data = "observation-data"This code cell creates the ADLS Gen2 storage account defined in the above code cell.

# Create new storage account !az storage account create --name $storage_account_name --enable-hierarchical-namespace true --resource-group $storage_resource_group_name --location $storage_location --subscription $storage_subscription_idThis code cell creates a new storage container for offline store.

# Create a new storage container for offline store !az storage fs create --name $storage_file_system_name_offline_store --account-name $storage_account_name --subscription $storage_subscription_idThis code cell creates a new storage container for source data.

# Create a new storage container for source data !az storage fs create --name $storage_file_system_name_source_data --account-name $storage_account_name --subscription $storage_subscription_idThis code cell creates a new storage container for observation data.

# Create a new storage container for observation data !az storage fs create --name $storage_file_system_name_observation_data --account-name $storage_account_name --subscription $storage_subscription_id

Copy the sample data required for this tutorial series into the newly created storage containers.

To write data to the storage containers, ensure that Contributor and Storage Blob Data Contributor roles are assigned to the user identity on the created ADLS Gen2 storage account in the Azure portal following these steps.

Important

Once you ensure that the Contributor and Storage Blob Data Contributor roles are assigned to the user identity, wait for a few minutes after role assignment, to let permissions propagate before you proceed with the next steps. To learn more about access control, visit role-based access control (RBAC) for Azure storage accounts

The next code cells copy sample source data for transactions feature set used in this tutorial from a public storage account to the newly created storage account.

# Copy sample source data for transactions feature set used in this tutorial series from the public storage account to the newly created storage account transactions_source_data_path = "wasbs://data@azuremlexampledata.blob.core.windows.net/feature-store-prp/datasources/transactions-source/*.parquet" transactions_src_df = spark.read.parquet(transactions_source_data_path) transactions_src_df.write.parquet( f"abfss://{storage_file_system_name_source_data}@{storage_account_name}.dfs.core.windows.net/transactions-source/" )For the account feature set used in this tutorial, copy the sample source data for the account feature set to the newly created storage account.

# Copy sample source data for account feature set used in this tutorial series from the public storage account to the newly created storage account accounts_data_path = "wasbs://data@azuremlexampledata.blob.core.windows.net/feature-store-prp/datasources/accounts-precalculated/*.parquet" accounts_data_df = spark.read.parquet(accounts_data_path) accounts_data_df.write.parquet( f"abfss://{storage_file_system_name_source_data}@{storage_account_name}.dfs.core.windows.net/accounts-precalculated/" )Copy the sample observation data used for training from a public storage account to the newly created storage account.

# Copy sample observation data used for training from the public storage account to the newly created storage account observation_data_train_path = "wasbs://data@azuremlexampledata.blob.core.windows.net/feature-store-prp/observation_data/train/*.parquet" observation_data_train_df = spark.read.parquet(observation_data_train_path) observation_data_train_df.write.parquet( f"abfss://{storage_file_system_name_observation_data}@{storage_account_name}.dfs.core.windows.net/train/" )Copy the sample observation data used for batch inference from a public storage account to the newly created storage account.

# Copy sample observation data used for batch inference from a public storage account to the newly created storage account observation_data_inference_path = "wasbs://data@azuremlexampledata.blob.core.windows.net/feature-store-prp/observation_data/batch_inference/*.parquet" observation_data_inference_df = spark.read.parquet(observation_data_inference_path) observation_data_inference_df.write.parquet( f"abfss://{storage_file_system_name_observation_data}@{storage_account_name}.dfs.core.windows.net/batch_inference/" )

Disable the public network access on the newly created storage account.

This code cell disables public network access for the ADLS Gen2 storage account created earlier.

# Disable the public network access for the above created ADLS Gen2 storage account !az storage account update --name $storage_account_name --resource-group $storage_resource_group_name --subscription $storage_subscription_id --public-network-access disabledSet ARM IDs for the offline store, source data, and observation data containers.

# set the container arm id offline_store_gen2_container_arm_id = "/subscriptions/{sub_id}/resourceGroups/{rg}/providers/Microsoft.Storage/storageAccounts/{account}/blobServices/default/containers/{container}".format( sub_id=storage_subscription_id, rg=storage_resource_group_name, account=storage_account_name, container=storage_file_system_name_offline_store, ) print(offline_store_gen2_container_arm_id) source_data_gen2_container_arm_id = "/subscriptions/{sub_id}/resourceGroups/{rg}/providers/Microsoft.Storage/storageAccounts/{account}/blobServices/default/containers/{container}".format( sub_id=storage_subscription_id, rg=storage_resource_group_name, account=storage_account_name, container=storage_file_system_name_source_data, ) print(source_data_gen2_container_arm_id) observation_data_gen2_container_arm_id = "/subscriptions/{sub_id}/resourceGroups/{rg}/providers/Microsoft.Storage/storageAccounts/{account}/blobServices/default/containers/{container}".format( sub_id=storage_subscription_id, rg=storage_resource_group_name, account=storage_account_name, container=storage_file_system_name_observation_data, ) print(observation_data_gen2_container_arm_id)

Provision the user-assigned managed identity (UAI)

Create a new User-assigned managed identity.

In the following code cell, provide a name for the user-assigned managed identity that you would like to create.

# User assigned managed identity values. Optionally you may change the values. uai_subscription_id = os.environ["AZUREML_ARM_SUBSCRIPTION"] uai_resource_group_name = os.environ["AZUREML_ARM_RESOURCEGROUP"] uai_name = "<UAI_NAME>" # feature store location is used by default. You can change it. uai_location = storage_locationThis code cell creates the UAI.

!az identity create --subscription $uai_subscription_id --resource-group $uai_resource_group_name --location $uai_location --name $uai_nameThis code cell retrieves the principal ID, client ID, and ARM ID property values for the created UAI.

from azure.mgmt.msi import ManagedServiceIdentityClient from azure.mgmt.msi.models import Identity from azure.ai.ml.identity import AzureMLOnBehalfOfCredential msi_client = ManagedServiceIdentityClient( AzureMLOnBehalfOfCredential(), uai_subscription_id ) managed_identity = msi_client.user_assigned_identities.get( resource_name=uai_name, resource_group_name=uai_resource_group_name ) uai_principal_id = managed_identity.principal_id uai_client_id = managed_identity.client_id uai_arm_id = managed_identity.id

Grant RBAC permission to the user-assigned managed identity (UAI)

The UAI is assigned to the feature store, and requires the following permissions:

Scope Action/Role Feature store Azure Machine Learning Data Scientist role Storage account of feature store offline store Storage Blob Data Contributor role Storage accounts of source data Storage Blob Data Contributor role The next CLI commands will assign the Storage Blob Data Contributor role to the UAI. In this example, "Storage accounts of source data" doesn't apply because you read the sample data from a public access blob storage. To use your own data sources, you must assign the required roles to the UAI. To learn more about access control, see role-based access control for Azure storage accounts and Azure Machine Learning workspace.

!az role assignment create --role "Storage Blob Data Contributor" --assignee-object-id $uai_principal_id --assignee-principal-type ServicePrincipal --scope $offline_store_gen2_container_arm_id!az role assignment create --role "Storage Blob Data Contributor" --assignee-object-id $uai_principal_id --assignee-principal-type ServicePrincipal --scope $source_data_gen2_container_arm_id!az role assignment create --role "Storage Blob Data Contributor" --assignee-object-id $uai_principal_id --assignee-principal-type ServicePrincipal --scope $observation_data_gen2_container_arm_id

Create a feature store with materialization enabled

Set the feature store parameters

Set the feature store name, location, subscription ID, group name, and ARM ID values, as shown in this code cell sample:

# We use the subscription, resource group, region of this active project workspace.

# Optionally, you can replace them to create the resources in a different subsciprtion/resourceGroup, or use existing resources

import os

# At the minimum, define a name for the feature store

featurestore_name = "<YOUR_FEATURE_STORE_NAME>"

# It is recommended to create featurestore in the same location as the storage

featurestore_location = storage_location

featurestore_subscription_id = os.environ["AZUREML_ARM_SUBSCRIPTION"]

featurestore_resource_group_name = os.environ["AZUREML_ARM_RESOURCEGROUP"]

feature_store_arm_id = "/subscriptions/{sub_id}/resourceGroups/{rg}/providers/Microsoft.MachineLearningServices/workspaces/{ws_name}".format(

sub_id=featurestore_subscription_id,

rg=featurestore_resource_group_name,

ws_name=featurestore_name,

)

This code cell generates a YAML specification file for a feature store, with materialization enabled.

# The below code creates a feature store with enabled materialization

import yaml

config = {

"$schema": "http://azureml/sdk-2-0/FeatureStore.json",

"name": featurestore_name,

"location": featurestore_location,

"compute_runtime": {"spark_runtime_version": "3.2"},

"offline_store": {

"type": "azure_data_lake_gen2",

"target": offline_store_gen2_container_arm_id,

},

"materialization_identity": {"client_id": uai_client_id, "resource_id": uai_arm_id},

}

feature_store_yaml = root_dir + "/featurestore/featurestore_with_offline_setting.yaml"

with open(feature_store_yaml, "w") as outfile:

yaml.dump(config, outfile, default_flow_style=False)

Create the feature store

This code cell uses the YAML specification file generated in the previous step to create a feature store with materialization enabled.

!az ml feature-store create --file $feature_store_yaml --subscription $featurestore_subscription_id --resource-group $featurestore_resource_group_name

Initialize the Azure Machine Learning feature store core SDK client

The SDK client initialized in this cell facilitates development and consumption of features:

# feature store client

from azureml.featurestore import FeatureStoreClient

from azure.ai.ml.identity import AzureMLOnBehalfOfCredential

featurestore = FeatureStoreClient(

credential=AzureMLOnBehalfOfCredential(),

subscription_id=featurestore_subscription_id,

resource_group_name=featurestore_resource_group_name,

name=featurestore_name,

)

Assign roles to user identity on the feature store

This code cell assigns AzureML Data Scientist role to the UAI on the created feature store. To learn more about access control, see role-based access control for Azure storage accounts and Azure Machine Learning workspace.

!az role assignment create --role "AzureML Data Scientist" --assignee-object-id $uai_principal_id --assignee-principal-type ServicePrincipal --scope $feature_store_arm_id

Follow these instructions to get the Microsoft Entra Object ID for your user identity. Then, use your Microsoft Entra Object ID in the next command to assign the AzureML Data Scientist role to your user identity on the created feature store.

your_aad_objectid = "<YOUR_AAD_OBJECT_ID>"

!az role assignment create --role "AzureML Data Scientist" --assignee-object-id $your_aad_objectid --assignee-principal-type User --scope $feature_store_arm_id

Obtain the default storage account and key vault for the feature store, and disable public network access to the corresponding resources

The next code cell returns the feature store object for the following steps.

fs = featurestore.feature_stores.get()

This code cell returns the names of the default storage account and key vault for the feature store.

# Copy the properties storage_account and key_vault from the response returned in feature store show command respectively

default_fs_storage_account_name = fs.storage_account.rsplit("/", 1)[-1]

default_key_vault_name = fs.key_vault.rsplit("/", 1)[-1]

This code cell disables public network access to the default storage account for the feature store.

# Disable the public network access for the above created default ADLS Gen2 storage account for the feature store

!az storage account update --name $default_fs_storage_account_name --resource-group $featurestore_resource_group_name --subscription $featurestore_subscription_id --public-network-access disabled

The next cell prints the name of the default key vault for the feature store.

print(default_key_vault_name)

Disable the public network access for the default feature store key vault created earlier

- In the Azure portal, open the default key vault that you created in the previous cell.

- Select the Networking tab.

- Select Disable public access, and then select Apply on the bottom left of the page.

Enable the managed virtual network for the feature store workspace

Update the feature store with the necessary outbound rules

The next code cell creates a YAML specification file for the outbound rules defined for the feature store.

# The below code creates a configuration for managed virtual network for the feature store

import yaml

config = {

"public_network_access": "disabled",

"managed_network": {

"isolation_mode": "allow_internet_outbound",

"outbound_rules": [

# You need to add multiple rules here if you have separate storage account for source, observation data and offline store.

{

"name": "sourcerulefs",

"destination": {

"spark_enabled": "true",

"subresource_target": "dfs",

"service_resource_id": f"/subscriptions/{storage_subscription_id}/resourcegroups/{storage_resource_group_name}/providers/Microsoft.Storage/storageAccounts/{storage_account_name}",

},

"type": "private_endpoint",

},

# This rule is added currently because serverless Spark doesn't automatically create a private endpoint to default key vault.

{

"name": "defaultkeyvault",

"destination": {

"spark_enabled": "true",

"subresource_target": "vault",

"service_resource_id": f"/subscriptions/{featurestore_subscription_id}/resourcegroups/{featurestore_resource_group_name}/providers/Microsoft.Keyvault/vaults/{default_key_vault_name}",

},

"type": "private_endpoint",

},

],

},

}

feature_store_managed_vnet_yaml = (

root_dir + "/featurestore/feature_store_managed_vnet_config.yaml"

)

with open(feature_store_managed_vnet_yaml, "w") as outfile:

yaml.dump(config, outfile, default_flow_style=False)

This code cell uses the generated YAML specification file to update the feature store.

# This command will change to `az ml featurestore update` in future for parity.

!az ml workspace update --file $feature_store_managed_vnet_yaml --name $featurestore_name --resource-group $featurestore_resource_group_name

Create private endpoints for the defined outbound rules

A provision-network command creates private endpoints from the managed virtual network, where the materialization job executes to the source, offline store, observation data, default storage account, and the default key vault for the feature store. This command might need about 20 minutes to complete.

#### Provision network to create necessary private endpoints (it may take approximately 20 minutes)

!az ml workspace provision-network --name $featurestore_name --resource-group $featurestore_resource_group_name --include-spark

This code cell confirms the creation of private endpoints defined by the outbound rules.

### Check that managed virtual network is correctly enabled

### After provisioning the network, all the outbound rules should become active

### For this tutorial, you will see 5 outbound rules

!az ml workspace show --name $featurestore_name --resource-group $featurestore_resource_group_name

Update the managed virtual network for the project workspace

Next, update the managed virtual network for the project workspace. First, obtain the subscription ID, resource group, and workspace name for the project workspace.

# lookup the subscription id, resource group and workspace name of the current workspace

project_ws_sub_id = os.environ["AZUREML_ARM_SUBSCRIPTION"]

project_ws_rg = os.environ["AZUREML_ARM_RESOURCEGROUP"]

project_ws_name = os.environ["AZUREML_ARM_WORKSPACE_NAME"]

Update the project workspace with the necessary outbound rules

The project workspace needs access to these resources:

- Source data

- Offline store

- Observation data

- Feature store

- Default storage account of feature store

This code cell updates the project workspace using the generated YAML specification file with required outbound rules.

# The below code creates a configuration for managed virtual network for the project workspace

import yaml

config = {

"managed_network": {

"isolation_mode": "allow_internet_outbound",

"outbound_rules": [

# Incase you have separate storage accounts for source, observation data and offline store, you need to add multiple rules here. No action needed otherwise.

{

"name": "projectsourcerule",

"destination": {

"spark_enabled": "true",

"subresource_target": "dfs",

"service_resource_id": f"/subscriptions/{storage_subscription_id}/resourcegroups/{storage_resource_group_name}/providers/Microsoft.Storage/storageAccounts/{storage_account_name}",

},

"type": "private_endpoint",

},

# Rule to create private endpoint to default storage of feature store

{

"name": "defaultfsstoragerule",

"destination": {

"spark_enabled": "true",

"subresource_target": "blob",

"service_resource_id": f"/subscriptions/{featurestore_subscription_id}/resourcegroups/{featurestore_resource_group_name}/providers/Microsoft.Storage/storageAccounts/{default_fs_storage_account_name}",

},

"type": "private_endpoint",

},

# Rule to create private endpoint to default key vault of feature store

{

"name": "defaultfskeyvaultrule",

"destination": {

"spark_enabled": "true",

"subresource_target": "vault",

"service_resource_id": f"/subscriptions/{featurestore_subscription_id}/resourcegroups/{featurestore_resource_group_name}/providers/Microsoft.Keyvault/vaults/{default_key_vault_name}",

},

"type": "private_endpoint",

},

# Rule to create private endpoint to feature store

{

"name": "featurestorerule",

"destination": {

"spark_enabled": "true",

"subresource_target": "amlworkspace",

"service_resource_id": f"/subscriptions/{featurestore_subscription_id}/resourcegroups/{featurestore_resource_group_name}/providers/Microsoft.MachineLearningServices/workspaces/{featurestore_name}",

},

"type": "private_endpoint",

},

],

}

}

project_ws_managed_vnet_yaml = (

root_dir + "/featurestore/project_ws_managed_vnet_config.yaml"

)

with open(project_ws_managed_vnet_yaml, "w") as outfile:

yaml.dump(config, outfile, default_flow_style=False)

This code cell updates the project workspace using the generated YAML specification file with the outbound rules.

#### Update project workspace to create private endpoints for the defined outbound rules (it may take approximately 15 minutes)

!az ml workspace update --file $project_ws_managed_vnet_yaml --name $project_ws_name --resource-group $project_ws_rg

This code cell confirms the creation of private endpoints defined by the outbound rules.

!az ml workspace show --name $project_ws_name --resource-group $project_ws_rg

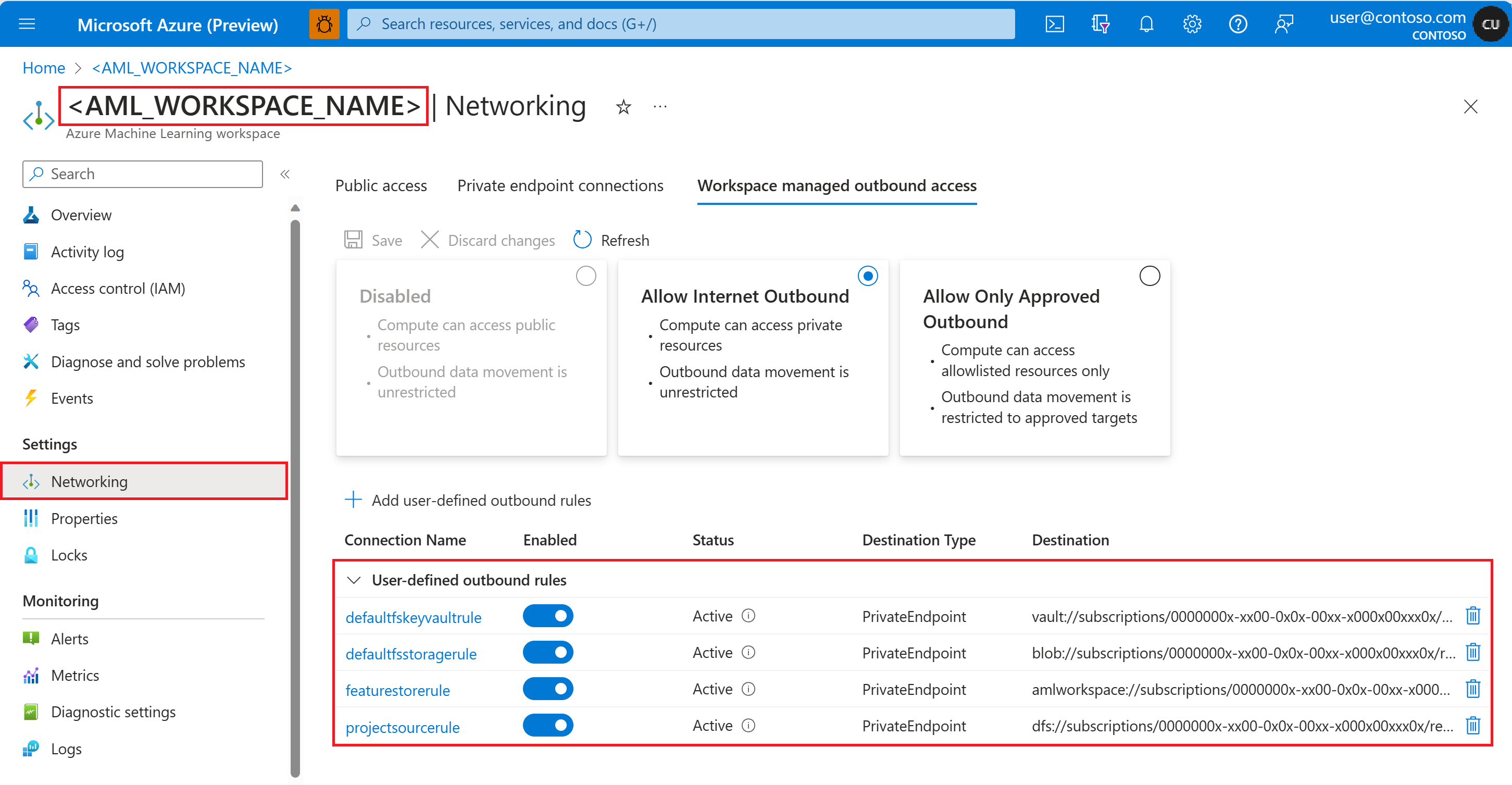

You can also verify the outbound rules from the Azure portal. Navigate to Networking from left panel for the project workspace and then open the Workspace managed outbound access tab.

Prototype and develop a transaction rolling aggregation feature set

Explore the transactions source data

Note

A publicly-accessible blob container hosts the sample data used in this tutorial. It can only be read in Spark via wasbs driver. When you create feature sets using your own source data, please host them in an ADLS Gen2 account, and use an abfss driver in the data path.

# remove the "." in the root directory path as we need to generate absolute path to read from Spark

transactions_source_data_path = f"abfss://{storage_file_system_name_source_data}@{storage_account_name}.dfs.core.windows.net/transactions-source/*.parquet"

transactions_src_df = spark.read.parquet(transactions_source_data_path)

display(transactions_src_df.head(5))

# Note: display(training_df.head(5)) displays the timestamp column in a different format. You can can call transactions_src_df.show() to see correctly formatted value

Locally develop a transactions feature set

A feature set specification is a self-contained feature set definition that can be developed and tested locally.

Create the following rolling window aggregate features:

- transactions three-day count

- transactions amount three-day sum

- transactions amount three-day avg

- transactions seven-day count

- transactions amount seven-day sum

- transactions amount seven-day avg

Inspect the feature transformation code file featurestore/featuresets/transactions/spec/transformation_code/transaction_transform.py. This spark transformer performs the rolling aggregation defined for the features.

For more information about the feature set and transformations in more detail, visit feature store concepts.

from azureml.featurestore import create_feature_set_spec, FeatureSetSpec

from azureml.featurestore.contracts import (

DateTimeOffset,

FeatureSource,

TransformationCode,

Column,

ColumnType,

SourceType,

TimestampColumn,

)

transactions_featureset_code_path = (

root_dir + "/featurestore/featuresets/transactions/transformation_code"

)

transactions_featureset_spec = create_feature_set_spec(

source=FeatureSource(

type=SourceType.parquet,

path=f"abfss://{storage_file_system_name_source_data}@{storage_account_name}.dfs.core.windows.net/transactions-source/*.parquet",

timestamp_column=TimestampColumn(name="timestamp"),

source_delay=DateTimeOffset(days=0, hours=0, minutes=20),

),

transformation_code=TransformationCode(

path=transactions_featureset_code_path,

transformer_class="transaction_transform.TransactionFeatureTransformer",

),

index_columns=[Column(name="accountID", type=ColumnType.string)],

source_lookback=DateTimeOffset(days=7, hours=0, minutes=0),

temporal_join_lookback=DateTimeOffset(days=1, hours=0, minutes=0),

infer_schema=True,

)

# Generate a spark dataframe from the feature set specification

transactions_fset_df = transactions_featureset_spec.to_spark_dataframe()

# display few records

display(transactions_fset_df.head(5))

Export a feature set specification

To register a feature set specification with the feature store, that specification must be saved in a specific format.

To inspect the generated transactions feature set specification, open this file from the file tree to view the specification:

featurestore/featuresets/accounts/spec/FeaturesetSpec.yaml

The specification contains these elements:

source: a reference to a storage resource - in this case a parquet file in a blob storage resourcefeatures: a list of features and their datatypes. If you provide transformation codeindex_columns: the join keys required to access values from the feature set

As another benefit of persisting a feature set specification as a YAML file, the specification can be version controlled. Learn more about feature set specification in the top level feature store entities document and the feature set specification YAML reference.

import os

# create a new folder to dump the feature set spec

transactions_featureset_spec_folder = (

root_dir + "/featurestore/featuresets/transactions/spec"

)

# check if the folder exists, create one if not

if not os.path.exists(transactions_featureset_spec_folder):

os.makedirs(transactions_featureset_spec_folder)

transactions_featureset_spec.dump(transactions_featureset_spec_folder)

Register a feature-store entity

Entities help enforce use of the same join key definitions across feature sets that use the same logical entities. Entity examples could include account entities, customer entities, etc. Entities are typically created once and then reused across feature sets. For more information, visit the top level feature store entities document.

This code cell creates an account entity for the feature store.

account_entity_path = root_dir + "/featurestore/entities/account.yaml"

!az ml feature-store-entity create --file $account_entity_path --resource-group $featurestore_resource_group_name --workspace-name $featurestore_name

Register the transaction feature set with the feature store, and submit a materialization job

To share and reuse a feature set asset, you must first register that asset with the feature store. Feature set asset registration offers managed capabilities including versioning and materialization. This tutorial series covers these topics.

The feature set asset references both the feature set spec that you created earlier, and other properties - for example, version and materialization settings.

Create a feature set

The next code cell uses a predefined YAML specification file to create a feature set.

transactions_featureset_path = (

root_dir

+ "/featurestore/featuresets/transactions/featureset_asset_offline_enabled.yaml"

)

!az ml feature-set create --file $transactions_featureset_path --resource-group $featurestore_resource_group_name --workspace-name $featurestore_name

This code cell previews the newly created feature set.

# Preview the newly created feature set

!az ml feature-set show --resource-group $featurestore_resource_group_name --workspace-name $featurestore_name -n transactions -v 1

Submit a backfill materialization job

The next code cell defines start and end time values for the feature materialization window, and submits a backfill materialization job.

This code cell provides <JOB_ID_FROM_PREVIOUS_COMMAND>, to check the status of the backfill materialization job.

This code cell lists all the materialization jobs for the current feature set.

Attach Azure Cache for Redis as an online store

Create an Azure Cache for Redis

In the next code cell, define the name of the Azure Cache for Redis that you want to create or reuse. Optionally, you can override other default settings.

redis_subscription_id = os.environ["AZUREML_ARM_SUBSCRIPTION"]

redis_resource_group_name = os.environ["AZUREML_ARM_RESOURCEGROUP"]

redis_name = "my-redis"

redis_location = storage_location

You can select the Redis cache tier (basic, standard, or premium). You should choose a SKU family that is available for the selected cache tier. Visit this documentation resource for more information about how selection of different tiers can impact cache performance. Visit this documentation resource for more information about pricing for different SKU tiers and families of Azure Cache for Redis.

Execute the following code cell to create an Azure Cache for Redis with premium tier, SKU family P and cache capacity 2. It may take approximately 5-10 minutes to provision the Redis instance.

# Create new redis cache

from azure.mgmt.redis import RedisManagementClient

from azure.mgmt.redis.models import RedisCreateParameters, Sku, SkuFamily, SkuName

management_client = RedisManagementClient(

AzureMLOnBehalfOfCredential(), redis_subscription_id

)

# It usually takes about 5 - 10 min to finish the provision of the Redis instance.

# If the following begin_create() call still hangs for longer than that,

# please check the status of the Redis instance on the Azure portal and cancel the cell if the provision has completed.

# This sample uses a PREMIUM tier Redis SKU from family P, which may cost more than a STANDARD tier SKU from family C.

# Please choose the SKU tier and family according to your performance and pricing requirements.

redis_arm_id = (

management_client.redis.begin_create(

resource_group_name=redis_resource_group_name,

name=redis_name,

parameters=RedisCreateParameters(

location=redis_location,

sku=Sku(name=SkuName.PREMIUM, family=SkuFamily.P, capacity=2),

public_network_access="Disabled", # can only disable PNA to redis cache during creation

),

)

.result()

.id

)

print(redis_arm_id)

Update feature store with the online store

Attach the Azure Cache for Redis to the feature store, to use it as the online materialization store. The next code cell creates a YAML specification file with online store outbound rules, defined for the feature store.

# The following code cell creates a YAML specification file for outbound rules that are defined for the feature store.

## rule 1: PE to online store (redis cache): this is optional if online store is not used

import yaml

config = {

"public_network_access": "disabled",

"managed_network": {

"isolation_mode": "allow_internet_outbound",

"outbound_rules": [

{

"name": "sourceruleredis",

"destination": {

"spark_enabled": "true",

"subresource_target": "redisCache",

"service_resource_id": f"/subscriptions/{storage_subscription_id}/resourcegroups/{storage_resource_group_name}/providers/Microsoft.Cache/Redis/{redis_name}",

},

"type": "private_endpoint",

},

],

},

"online_store": {"target": f"{redis_arm_id}", "type": "redis"},

}

feature_store_managed_vnet_yaml = (

root_dir + "/featurestore/feature_store_managed_vnet_config.yaml"

)

with open(feature_store_managed_vnet_yaml, "w") as outfile:

yaml.dump(config, outfile, default_flow_style=False)

The next code cell updates the feature store with the generated YAML specification file with the outbound rules for the online store.

!az ml feature-store update --file $feature_store_managed_vnet_yaml --name $featurestore_name --resource-group $featurestore_resource_group_name

Update project workspace outbound rules

The project workspace needs access to the online store. The following code cell creates a YAML specification file with required outbound rules for the project workspace.

import yaml

config = {

"managed_network": {

"isolation_mode": "allow_internet_outbound",

"outbound_rules": [

{

"name": "onlineruleredis",

"destination": {

"spark_enabled": "true",

"subresource_target": "redisCache",

"service_resource_id": f"/subscriptions/{storage_subscription_id}/resourcegroups/{storage_resource_group_name}/providers/Microsoft.Cache/Redis/{redis_name}",

},

"type": "private_endpoint",

},

],

}

}

project_ws_managed_vnet_yaml = (

root_dir + "/featurestore/project_ws_managed_vnet_config.yaml"

)

with open(project_ws_managed_vnet_yaml, "w") as outfile:

yaml.dump(config, outfile, default_flow_style=False)

Execute the next code cell to update the project workspace with the generated YAML specification file with the outbound rules for the online store.

#### Update project workspace to create private endpoints for the defined outbound rules (it may take approximately 15 minutes)

!az ml workspace update --file $project_ws_managed_vnet_yaml --name $project_ws_name --resource-group $project_ws_rg

Materialize transactions feature set to online store

The next code cell enables online materialization for the transactions feature set.

# Update featureset to enable online materialization

transactions_featureset_path = (

root_dir

+ "/featurestore/featuresets/transactions/featureset_asset_online_enabled.yaml"

)

!az ml feature-set update --file $transactions_featureset_path --resource-group $featurestore_resource_group_name --workspace-name $featurestore_name

The next code cell defines the start and end times for feature materialization window, and submits a backfill materialization job.

feature_window_start_time = "2024-01-24T00:00.000Z"

feature_window_end_time = "2024-01-25T00:00.000Z"

!az ml feature-set backfill --name transactions --version 1 --by-data-status "['None']" --feature-window-start-time $feature_window_start_time --feature-window-end-time $feature_window_end_time --feature-store-name $featurestore_name --resource-group $featurestore_resource_group_name

Use the registered features to generate training data

Load observation data

First, explore the observation data. The core data used for training and inference typically involves observation data. That data is then joined with feature data, to create a full training data resource. Observation data is the data captured during the time of the event. In this case, it has core transaction data including transaction ID, account ID, and transaction amount values. Here, since the observation data is used for training, it also has the target variable appended (is_fraud).

observation_data_path = f"abfss://{storage_file_system_name_observation_data}@{storage_account_name}.dfs.core.windows.net/train/*.parquet"

observation_data_df = spark.read.parquet(observation_data_path)

obs_data_timestamp_column = "timestamp"

display(observation_data_df)

# Note: the timestamp column is displayed in a different format. Optionally, you can can call training_df.show() to see correctly formatted value

Get the registered feature set, and list its features

Next, get a feature set by providing its name and version, and then list features in this feature set. Also, print some sample feature values.

# look up the featureset by providing name and version

transactions_featureset = featurestore.feature_sets.get("transactions", "1")

# list its features

transactions_featureset.features

# print sample values

display(transactions_featureset.to_spark_dataframe().head(5))

Select features, and generate training data

Select features for the training data, and use the feature store SDK to generate the training data.

from azureml.featurestore import get_offline_features

# you can select features in pythonic way

features = [

transactions_featureset.get_feature("transaction_amount_7d_sum"),

transactions_featureset.get_feature("transaction_amount_7d_avg"),

]

# you can also specify features in string form: featurestore:featureset:version:feature

more_features = [

"transactions:1:transaction_3d_count",

"transactions:1:transaction_amount_3d_avg",

]

more_features = featurestore.resolve_feature_uri(more_features)

features.extend(more_features)

# generate training dataframe by using feature data and observation data

training_df = get_offline_features(

features=features,

observation_data=observation_data_df,

timestamp_column=obs_data_timestamp_column,

)

# Ignore the message that says feature set is not materialized (materialization is optional). We will enable materialization in the next part of the tutorial.

display(training_df)

# Note: the timestamp column is displayed in a different format. Optionally, you can can call training_df.show() to see correctly formatted value

A point-in-time join appended the features to the training data.

Optional next steps

Now that you successfully created a secure feature store and submitted a successful materialization run, you can proceed through the tutorial series to build an understanding of the feature store.

This tutorial contains a mixture of steps from tutorials 1 and 2 of this series. Remember to replace the necessary public storage containers used in the other tutorial notebooks with those created in this tutorial notebook, for the network isolation.

This concludes the tutorial. Your training data uses features from a feature store. You can either save it to storage for later use, or directly run model training on it.