适用于: Azure 数据工厂

Azure 数据工厂  Azure Synapse Analytics

Azure Synapse Analytics

本文介绍如何使用数据工厂将 Microsoft 365 (Office 365) 中的数据载入 Azure Blob 存储。 可以遵循类似的步骤将数据复制到 Azure Data Lake Gen2。 请参阅 Microsoft 365 (Office 365) 连接器文章,了解如何从 Microsoft 365 (Office 365) 复制数据。

创建数据工厂

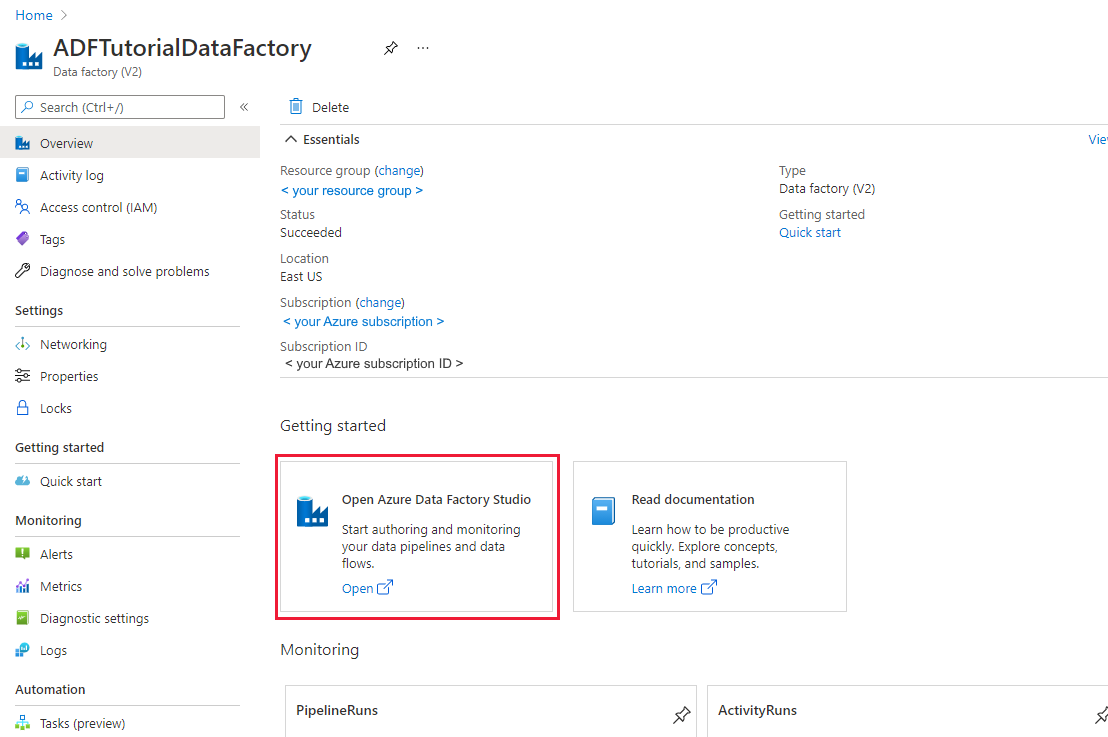

如果尚未创建数据工厂,请按照快速入门:使用 Azure 门户和 Azure 数据工厂工作室创建数据工厂中的步骤进行创建。 创建后,浏览到 Azure 门户中的数据工厂。

在“打开 Azure 数据工厂工作室”磁贴上选择“打开”,以便在单独选项卡中启动“数据集成应用程序”。

创建管道

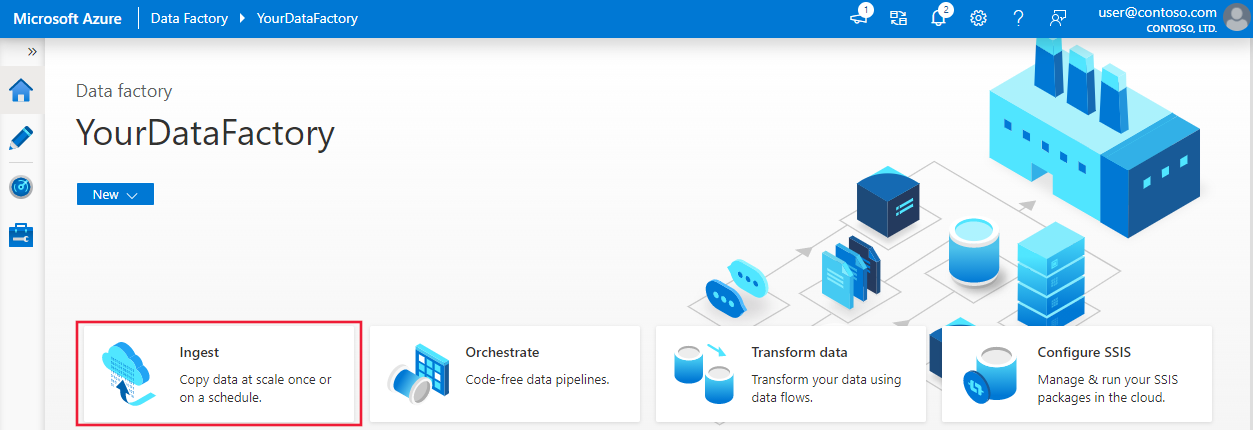

在主页上,选择“协调”。

在管道的“常规” 选项卡中,输入“CopyPipeline”作为管道的名称。

在“活动”工具箱的“移动和转换”类别中,将“复制活动”从工具箱拖放到管道设计器图面。 指定“CopyFromOffice365ToBlob”作为活动名称。

注意

请在源和接收器链接服务中都使用 Azure 集成运行时。 不支持自承载集成运行时和托管的虚拟网络集成运行时。

配置源

转到管道 >“源”选项卡,选择“+ 新建”创建源数据集。

在“新建数据集”窗口中,选择“Microsoft 365 (Office 365)”,然后选择“继续”。

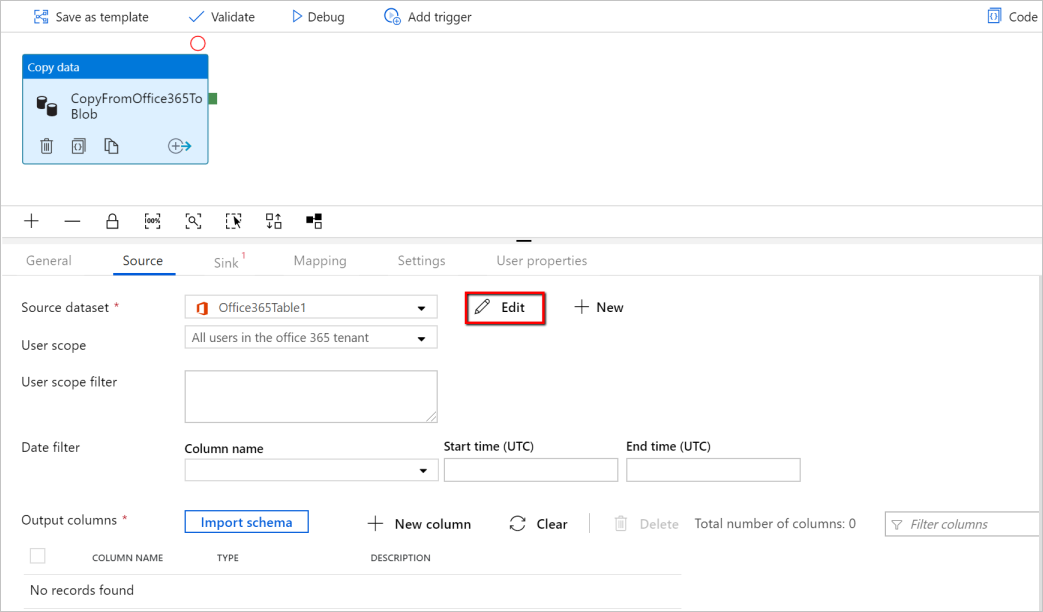

现在位于“复制活动配置”选项卡中。选择 Microsoft 365 (Office 365) 数据集旁边的“编辑”按钮以继续配置数据。

此时可以看到为 Microsoft 365 (Office 365) 数据集打开了新选项卡。 在“属性”窗口底部的“常规”选项卡中,输入“SourceOffice365Dataset”作为名称。

转到“属性”窗口的“连接”选项卡。 在“链接服务”文本框旁边,选择“+ 新建”。

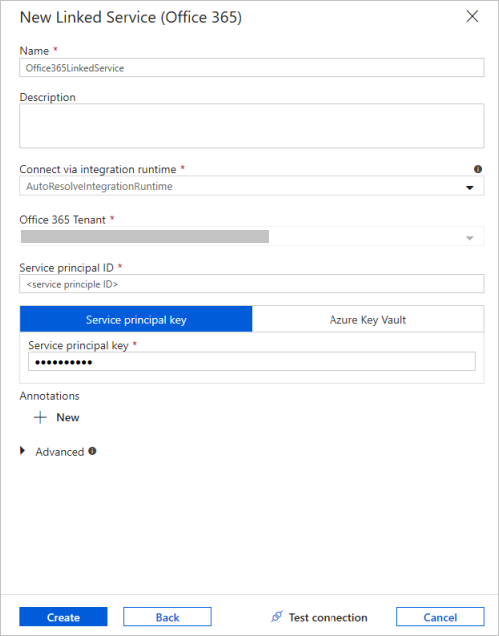

在“新建链接服务”窗口中,输入“Office365LinkedService”作为名称,输入服务主体 ID 和服务主体密钥,然后测试连接并选择“创建” 以部署链接服务。

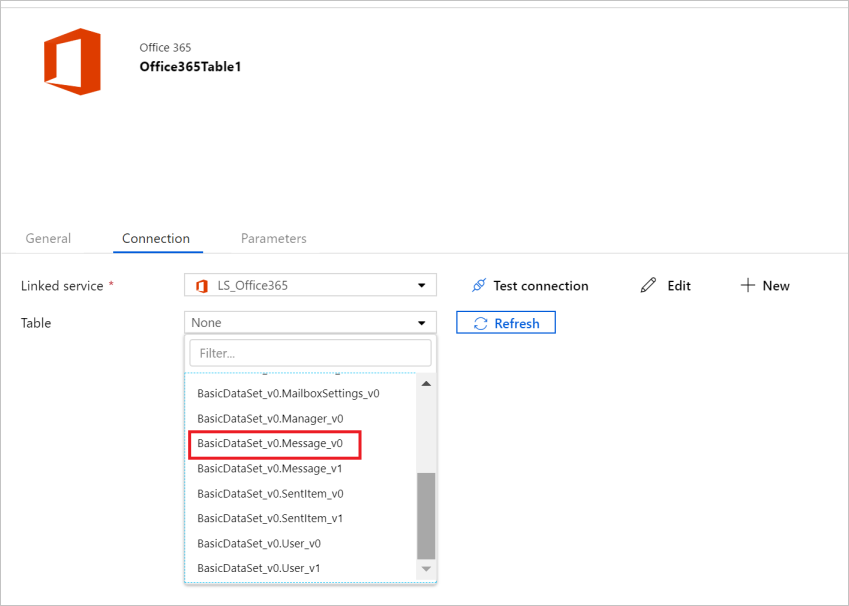

在创建链接服务后,会返回到数据集设置。 在“表”旁边,选择向下箭头展开可用的 Microsoft 365 (Office 365) 数据集列表,然后从下拉列表中选择“BasicDataSet_v0.Message_v0”:

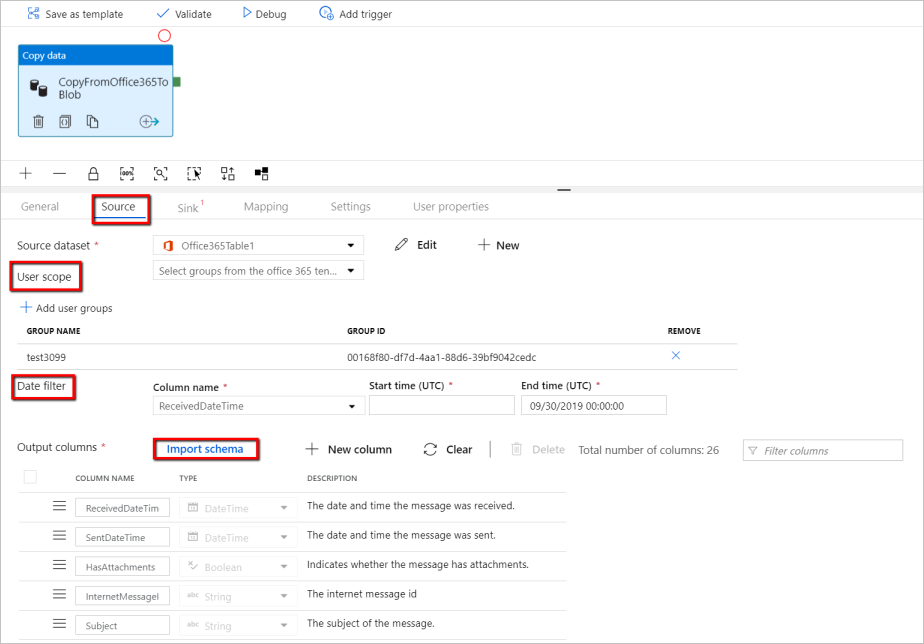

现在返回到“管道”>“源”选项卡,以继续配置用于 Microsoft 365 (Office 365) 数据提取的其他属性。 用户范围和用户范围筛选器是可选谓词,你可以定义这些谓词来限制要从 Microsoft 365 (Office 365) 中提取的数据。 请参阅 Microsoft 365 (Office 365) 数据集属性部分,了解如何配置这些设置。

需要选择一个日期筛选器,并提供开始时间和结束时间值。

选择“导入架构”选项卡以导入消息数据集的架构。

配置接收器

转到管道 >“接收器”选项卡,选择“+ 新建”以创建一个接收器数据集。

在“新建数据集”窗口中可以看到,从 Microsoft 365 (Office 365) 复制时仅选择了受支持的目标。 选择“Azure Blob 存储” ,选择“二进制格式”,然后选择“继续” 。 在本教程中,将 Microsoft 365 (Office 365) 数据复制到 Azure Blob 存储。

选择“Azure Blob 存储”数据集旁边的“编辑”按钮以继续配置数据。

在“属性”窗口的“常规”选项卡的“名称”中,输入“OutputBlobDataset”。

转到“属性”窗口的“连接”选项卡。 在“链接服务”文本框旁边,选择“+ 新建”。

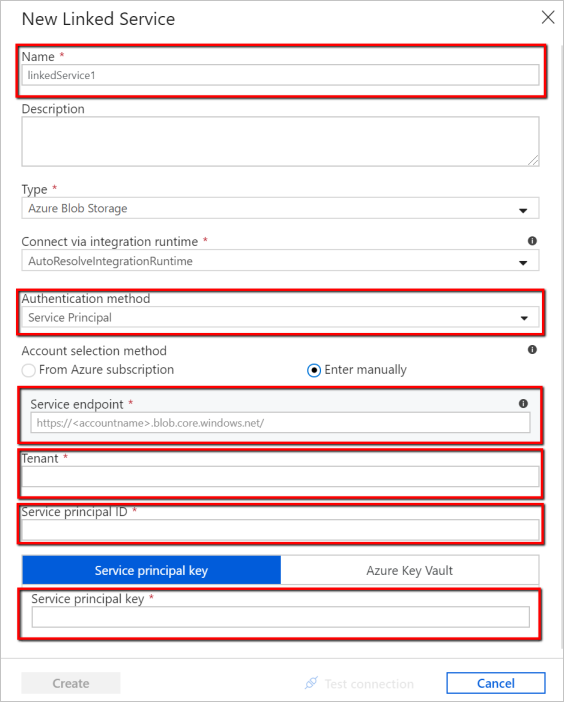

在“新建链接服务”窗口中,输入“AzureStorageLinkedService”作为名称,从身份验证方法下拉列表中选择“服务主体”,填写“服务终结点”、“租户”、“服务主体 ID”和“服务主体密钥”,然后选择“保存”以部署链接服务。 请参阅此文,了解如何为 Azure Blob 存储设置服务主体身份验证。

验证管道

若要验证管道,请从工具栏中选择“验证” 。

还可以通过单击右上角的“代码”来查看与管道关联的 JSON 代码。

发布管道

在顶部工具栏中,选择“全部发布” 。 此操作将所创建的实体(数据集和管道)发布到数据工厂。

手动触发管道

选择工具栏中的“添加触发器”,然后选择“立即触发”。 在“管道运行”页上选择“完成”。

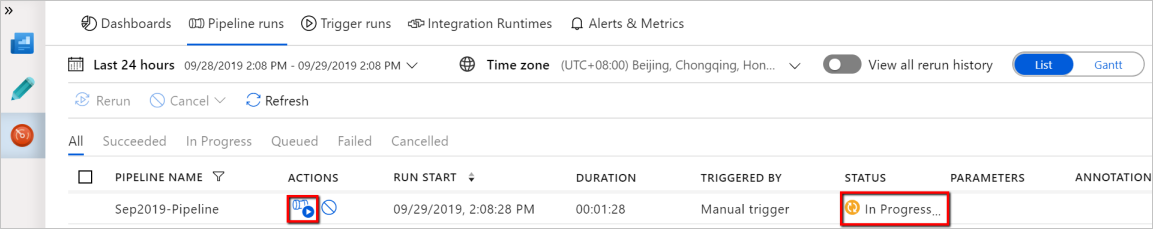

监视管道

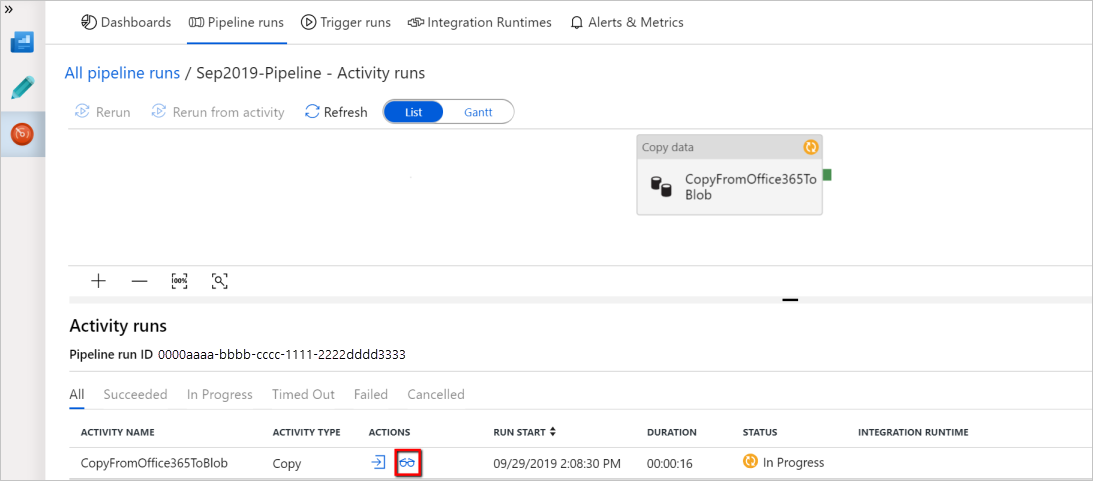

转到左侧的“监视”选项卡。 此时会看到由手动触发器触发的管道运行。 可以使用“操作”列中的链接来查看活动详细信息以及重新运行该管道。

若要查看与管道运行关联的活动运行,请选择“操作”列中的“查看活动运行”链接。 此示例中只有一个活动,因此列表中只看到一个条目。 有关复制操作的详细信息,请选择“操作”列中的“详细信息”链接(眼镜图标)。

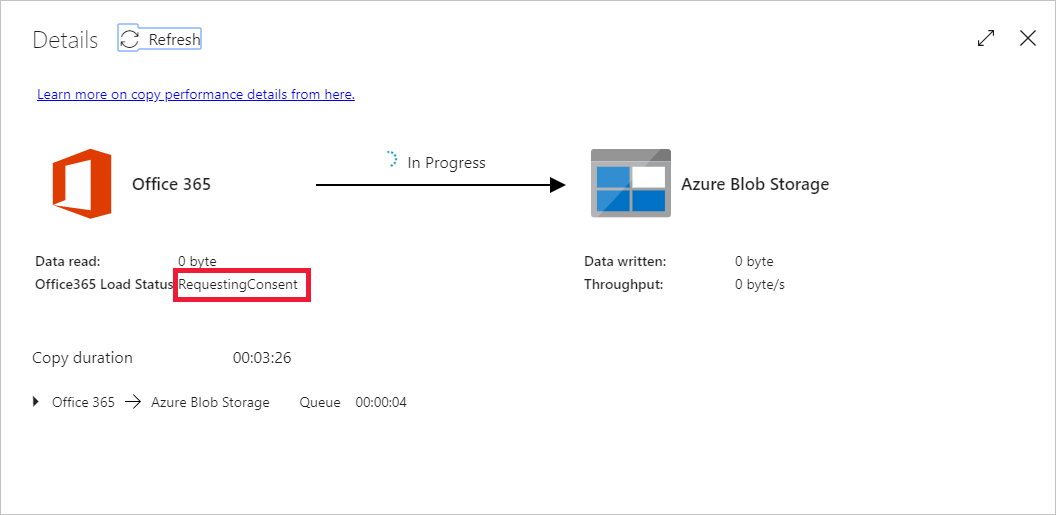

如果这是你首次请求此上下文(要访问的数据表、要将数据加载到的目标帐户和发出数据访问请求的用户标识的组合)的数据,则复制活动状态将显示为“正在进行”,并且仅当选择“操作”下的“详细信息”链接时,才会看到状态显示为“RequestingConsent”。 在继续执行数据提取之前,数据访问审批者组的成员需要在 Privileged Access Management 中审批该请求。

正在请求许可状态:

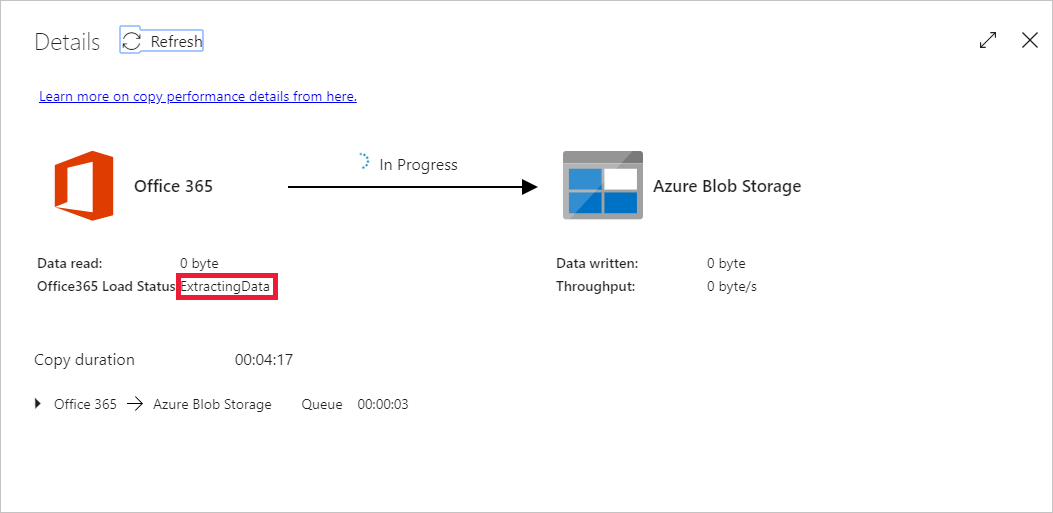

正在提取数据状态:

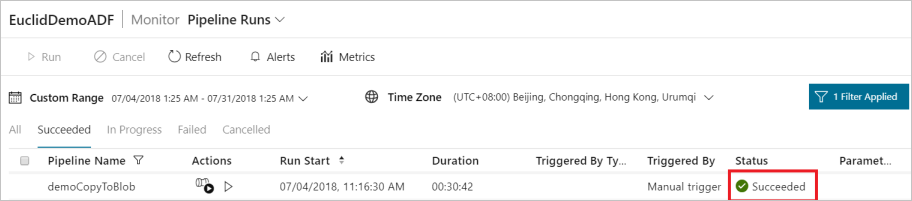

提供许可后,数据提取将会继续,一段时间后,管道运行将显示为“成功”。

现在,请转到目标 Azure Blob 存储,并验证是否已提取二进制格式的 Microsoft 365 (Office 365) 数据。

相关内容

请转至下列文章,了解 Azure Synapse Analytics 支持: