将数据复制到 Azure Data Box Disk 并进行验证

连接并解锁磁盘后,可以将数据从源数据服务器复制到磁盘。 在数据复制完成后,应该验证数据以确保它将成功上传到 Azure。

重要

Azure Data Box 现在支持 Blob 级别的访问层分配。 本教程中包含的步骤反映了已更新的数据复制过程,并且是专门针对块 blob 的。

使用 Data Box 拆分复制工具复制数据时,不支持访问层分配。 如果用例需要访问层分配,请按照将数据复制到磁盘节中包含的步骤使用 Robocopy 实用工具将数据复制到适当的访问层。

要获取有关为块 blob 数据确定合适访问层的帮助,请参阅确定块 blob 的合适访问层部分。

本部分中包含的信息适用于在 2024 年 4 月 1 日之后下达的订单。

注意

本文引用了 CentOS,这是一个处于生命周期结束 (EOL) 状态的 Linux 发行版。 请相应地考虑你的使用和规划。

本教程介绍如何从主计算机复制数据,并生成校验和来验证数据完整性。

本教程介绍如何执行下列操作:

- 为块 Blob 确定适合的访问层

- 将数据复制到 Data Box 磁盘

- 验证数据

先决条件

在开始之前,请确保:

- 已完成教程:安装和配置 Azure Data Box Disk。

- 磁盘已解锁,并且已连接到客户端计算机。

- 用来将数据复制到磁盘的客户端计算机正在运行支持的操作系统。

- 数据的预期存储类型与支持的存储类型匹配。

- 已查看 Azure 对象大小限制中的“托管磁盘限制”。

为块 blob 确定适合的访问层

重要

本部分中包含的信息适用于在 2024 年 4 月 1 日之后下达的订单。

Azure 存储支持在同一存储帐户的不同访问层中存储块 blob 数据。 通过此功能,可根据数据的访问频率更高效地组织和存储数据。 下表包含有关 Azure 存储访问层的信息和建议。

| 层级 | 建议 | 最佳做法 |

|---|---|---|

| 热 | 对于经常访问或修改的在线数据很有用。 此层的存储成本最高,但访问成本最低。 | 此层中的数据应是定期且积极使用的数据。 |

| 酷 | 对于不经常访问或修改的在线数据很有用。 相较于热层,此层的存储成本更低,而访问成本更高。 | 此层中的数据应至少存储 30 天。 |

| 冷 | 对于很少访问或修改但仍需要快速检索的在线数据很有用。 此层与冷层相比,存储成本较低,访问成本较高。 | 此层中的数据应至少存储 90 天。 |

| 存档 | 对于很少访问且对延迟要求较低的离线数据很有用。 | 此层中的数据应至少存储 180 天。 对于 180 天内从存档层删除的数据,需支付提前删除费。 |

有关 Blob 访问层的详细信息,请参阅 Blob 数据的访问层。 有关更详细的最佳做法,请参阅使用 blob 访问层的最佳做法。

可以通过将块 blob 数据复制到 Data Box Disk 内的相应文件夹,将其转移到合适的访问层。 将数据复制到磁盘部分更详细地讨论了此过程。

将数据复制到磁盘

在将数据复制到磁盘之前,请查看以下注意事项:

你有责任将本地数据复制到对应于相应数据格式的共享中。 例如,将块 blob 数据复制到“BlockBlob”文件夹。 将 VHD 复制到 PageBlob 共享。 如果本地数据格式与所选存储类型的相应文件夹不匹配,则在后面的步骤中将数据上传到 Azure 将失败。

无法将数据直接复制到共享的根文件夹中。 在相应的共享中创建一个文件夹,并将数据复制到其中。

位于 PageBlob 共享的根文件夹的文件夹对应于存储帐户中的容器。 将为名称与存储帐户中的现有容器不匹配的所有文件夹创建新的容器。

位于 AzFile 共享的根文件夹的文件夹对应于 Azure 文件共享。 将为名称与存储帐户中的现有文件共享不匹配的所有文件夹创建新的文件共享。

BlockBlob 共享的根文件夹级别包含一个对应于每个访问层的文件夹。 将数据复制到 BlockBlob 共享时,请在与所需访问层对应的顶级文件夹中创建一个子文件夹。 与 PageBlob 共享一样,将为名称与现有容器不匹配的所有文件夹创建新的容器。 容器中的数据将复制到与子文件夹的顶级父级相对应的层。

还将为位于 BlockBlob 共享的根的任意文件夹创建容器,且文件中包含的数据将复制到容器的默认访问层。 若要确保数据复制到所需的访问层,请不要在根级别创建文件夹。

重要

上传到存档层的数据保持脱机状态,需要在读取或修改之前解除冻结。 复制到存档层的数据必须保留至少 180 天,否则将收取提前删除费。 ZRS、GZRS 或 RA-GZRS 帐户不支持存档层。

复制数据时,请确保数据大小符合 Azure 存储和 Data Box Disk 限制一文中所述的大小限制。

不要在 Data Box Disk 上禁用 BitLocker 加密。 禁用 BitLocker 加密会导致返回磁盘后上传失败。 禁用 BitLocker 还会使磁盘处于解锁状态,从而造成安全隐患。

在将数据传输到 Azure 文件时,如果要保留元数据(ACL、时间戳和文件属性),请遵循使用 Azure Data Box Disk 保留文件 ACL、属性和时间戳一文中的指导。

如果同时使用 Data Box Disk 和其他应用程序上传数据,则可能会遇到上传作业失败和数据损坏的问题。

重要

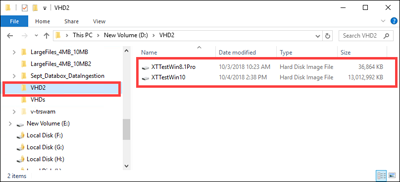

如果已在创建订单的过程中将托管磁盘指定为存储目标之一,那么以下部分就是适用的。

请确保上传到预创建文件夹的虚拟硬盘 (VHD) 在资源组中具有唯一的名称。 托管磁盘在 Data Box Disk 上跨所有预创建文件夹的资源组中必须具有唯一的名称。 如果使用多个 Data Box Disk,则托管磁盘名称在所有文件夹和磁盘中都必须是唯一的。 如果找到具有重复名称的 VHD 时,仅会转换其中之一为具有该名称的托管磁盘。 其他 VHD 将作为页 blob 上传到临时存储帐户中。

始终将 VHD 复制到某个预先创建的文件夹。 放置在这些文件夹外部或你创建的文件夹中的 VHD 将作为页 blob(而不是托管磁盘)上传到 Azure 存储帐户。

只能上传固定的 VHD 来创建托管磁盘。 不支持动态 VHD 文件、差异 VHD 文件和 VHDX 文件。

Data Box Disk 拆分复制和验证工具(

DataBoxDiskSplitCopy.exe和DataBoxDiskValidation.cmd)在处理长路径时报告失败。 当客户端上未启用长路径,并且数据副本的路径和文件名超过 256 个字符,则这些失败很常见。 若要避免这些失败,请参阅 Windows 客户端上启用长路径一文中的指南进行操作。

重要

Data Box Disk Tools 不支持 PowerShell ISE

从计算机连接和复制数据到 Data Box 磁盘,请执行以下步骤。

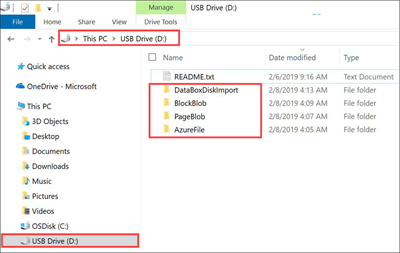

查看已解锁的驱动器的内容。 根据在创建 Data Box Disk 订单时选择选项的不同,驱动器中预创建的文件夹和子文件夹的列表也会有所差异。 不允许创建额外的文件夹,因为将数据复制到用户创建的文件夹会导致上传失败。

所选的存储目标 存储帐户类型 临时存储帐户类型 文件夹和子文件夹 存储帐户 GPv1 或 GPv2 不可用 BlockBlob - 存档

- 冷

- 冷

- 热

AzureFile存储帐户 Blob 存储帐户 不可用 BlockBlob - 存档

- 冷

- 冷

- 热

托管磁盘 不可用 GPv1 或 GPv2 ManagedDisk - PremiumSSD

- StandardSSD

- StandardHDD

存储帐户

托管磁盘GPv1 或 GPv2 GPv1 或 GPv2 BlockBlob - 存档

- 冷

- 冷

- 热

AzureFile

ManagedDisk- PremiumSSD

- StandardSSD

- StandardHDD

存储帐户

托管磁盘Blob 存储帐户 GPv1 或 GPv2 BlockBlob - 存档

- 冷

- 冷

- 热

- PremiumSSD

- StandardSSD

- StandardHDD

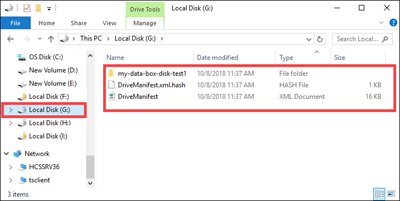

以下屏幕截图显示了指定 GPv2 存储帐户和存档层的顺序:

将 VHD 或 VHDX 数据复制到 PageBlob 文件夹。 复制到 PageBlob 文件夹中的所有文件将复制到 Azure 存储帐户中的默认

$root容器中。 在 Azure 存储帐户中为 PageBlob 文件夹中的每个子文件夹创建一个容器。将要放置在 Azure 文件共享中的数据复制到“AzureFile”文件夹中的子文件夹。 所有复制到“AzureFile”文件夹的文件都将作为文件复制到类型为 的默认容器,例如

databox-format-[GUID]databox-azurefile-7ee19cfb3304122d940461783e97bf7b4290a1d7无法将文件直接复制到 BlockBlob 的根文件夹中。 在根文件夹内,可以找到对应于每个可用访问层的子文件夹。 若要复制 blob 数据,必须先选择对应于其中一个访问层的文件夹。 接下来,在该层的文件夹中创建一个子文件夹来存储数据。 最后,将数据复制到新创建的子文件夹。 新的子文件夹表示引入期间在存储帐户中创建的容器。 数据以 Blob 的形式上传到此容器。 与 AzureFile 共享一样,将为位于 BlockBlob根文件夹的每个子文件夹创建新的 blob 存储容器。 这些文件夹中的数据将按照存储帐户的默认访问层进行保存。

在开始复制数据之前,需要将根目录中存在的任何文件和文件夹移动到其他文件夹。

重要

所有容器、Blob 和文件名都应符合 Azure 命名约定。 如果不遵循这些规则,则无法将数据上传到 Azure。

复制文件时,请确保块 Blob 文件不超过 4.7 TiB,页 Blob 文件不超过大约 8 TiB,Azure 文件不超过 1 TiB。

可以使用文件资源管理器的拖放功能来复制数据。 也可以使用与 SMB 兼容的任何文件复制工具(例如 Robocopy)复制数据。

使用文件复制工具的一个好处是能够启动多个复制作业,如以下使用 Robocopy 工具的示例所示:

Robocopy <source> <destination> * /MT:64 /E /R:1 /W:1 /NFL /NDL /FFT /Log:c:\RobocopyLog.txt注意

此示例中使用的参数基于内部测试期间使用的环境。 你遇到的参数和值可能有所不同。

该命令使用的参数和选项如下所示:

参数/选项 说明 源 指定源目录的路径。 目标 指定目标目录的路径。 /E 复制包括空目录的子目录。 /MT[:n] 使用 n 个线程创建多线程副本,其中 n 是介于 1 和 128 之间的整数。

n 的默认值为 8。/R: <n> 指定复制失败时的重试次数。

n 的默认值为 1,000,000 次重试。/W: <n> 指定等待重试的间隔时间,以秒为单位。

n 的默认值为 30,等效于等待时间 30 秒。/NFL 指定不记录文件名。 /NDL 指定不记录目录名称。 /FFT 假定 FAT 文件时间的分辨率精度为 2 秒。 /Log:<日志文件> 将状态输出写入日志文件。

将覆盖任何现有日志文件。多块磁盘可以并行使用,每块磁盘上可以运行多个作业。 请记住,重复的文件名将被覆盖或导致复制错误。

当作业正在进行时检查复制状态。 以下示例显示了将文件复制到 Data Box 磁盘的 robocopy 命令的输出。

C:\Users>robocopy ------------------------------------------------------------------------------- ROBOCOPY :: Robust File Copy for Windows ------------------------------------------------------------------------------- Started : Thursday, March 8, 2018 2:34:53 PM Simple Usage :: ROBOCOPY source destination /MIR source :: Source Directory (drive:\path or \\server\share\path). destination :: Destination Dir (drive:\path or \\server\share\path). /MIR :: Mirror a complete directory tree. For more usage information run ROBOCOPY /? **** /MIR can DELETE files as well as copy them ! C:\Users>Robocopy C:\Repository\guides \\10.126.76.172\AzFileUL\templates /MT:64 /E /R:1 /W:1 /FFT ------------------------------------------------------------------------------- ROBOCOPY :: Robust File Copy for Windows ------------------------------------------------------------------------------- Started : Thursday, March 8, 2018 2:34:58 PM Source : C:\Repository\guides\ Dest : \\10.126.76.172\devicemanagertest1_AzFile\templates\ Files : *.* Options : *.* /DCOPY:DA /COPY:DAT /MT:8 /R:1000000 /W:30 ------------------------------------------------------------------------------ 100% New File 206 C:\Repository\guides\article-metadata.md 100% New File 209 C:\Repository\guides\content-channel-guidance.md 100% New File 732 C:\Repository\guides\index.md 100% New File 199 C:\Repository\guides\pr-criteria.md 100% New File 178 C:\Repository\guides\pull-request-co.md 100% New File 250 C:\Repository\guides\pull-request-ete.md 100% New File 174 C:\Repository\guides\create-images-markdown.md 100% New File 197 C:\Repository\guides\create-links-markdown.md 100% New File 184 C:\Repository\guides\create-tables-markdown.md 100% New File 208 C:\Repository\guides\custom-markdown-extensions.md 100% New File 210 C:\Repository\guides\file-names-and-locations.md 100% New File 234 C:\Repository\guides\git-commands-for-master.md 100% New File 186 C:\Repository\guides\release-branches.md 100% New File 240 C:\Repository\guides\retire-or-rename-an-article.md 100% New File 215 C:\Repository\guides\style-and-voice.md 100% New File 212 C:\Repository\guides\syntax-highlighting-markdown.md 100% New File 207 C:\Repository\guides\tools-and-setup.md ------------------------------------------------------------------------------ Total Copied Skipped Mismatch FAILED Extras Dirs : 1 1 1 0 0 0 Files : 17 17 0 0 0 0 Bytes : 3.9 k 3.9 k 0 0 0 0 Times : 0:00:05 0:00:00 0:00:00 0:00:00 Speed : 5620 Bytes/sec. Speed : 0.321 MegaBytes/min. Ended : Thursday, August 31, 2023 2:34:59 PM若要优化性能,请在复制数据时使用以下 robocopy 参数。

平台 大多为 < 512 KB 的小型文件 大多为 512 KB-1 MB 的中型文件 大多为 > 1 MB 的大型文件 Data Box Disk 4 个 Robocopy 会话*

每个会话 16 个线程2 个 Robocopy 会话*

每个会话 16 个线程2 个 Robocopy 会话*

每个会话 16 个线程*每个 Robocopy 会话最多可包含 7,000 个目录和 1.5 亿个文件。

有关 Robocopy 命令的详细信息,请阅读 Robocopy 和几个示例文章。

打开目标文件夹,然后查看并验证复制的文件。 如果复制过程中遇到任何错误,请下载用于故障排除的日志文件。 robocopy 命令的输出可指定日志文件的位置。

拆分数据并将其复制到磁盘

Data Box 拆分复制工具帮助跨两个或多个 Azure Data Box Disk 拆分和复制数据。 该工具仅在 Windows 计算机上可用。 如果有需要跨多个磁盘拆分和复制的大型数据集,则此可选过程非常有用。

重要

Data Box 拆分复制工具还可以验证数据。 如果使用 Data Box 拆分复制工具复制数据,则可以跳过验证步骤。

使用 Data Box 拆分复制工具复制数据时,不支持访问层分配。 如果用例需要访问层分配,请按照将数据复制到磁盘节中包含的步骤使用 Robocopy 实用工具将数据复制到适当的访问层。

托管磁盘不支持 Data Box 拆分复制工具。

在 Windows 计算机上,请确保将 Data Box 拆分复制工具下载并提取到某个本地文件夹中。 此工具包含在适用于 Windows 的 Data Box Disk 工具集中。

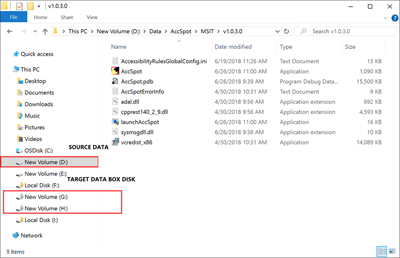

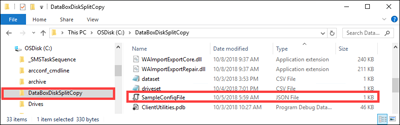

打开文件资源管理器。 记下分配给 Data Box Disk 的数据源驱动器和驱动器号。

标识要复制的源数据。 例如,在本例中:

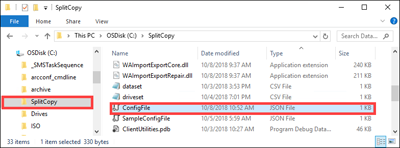

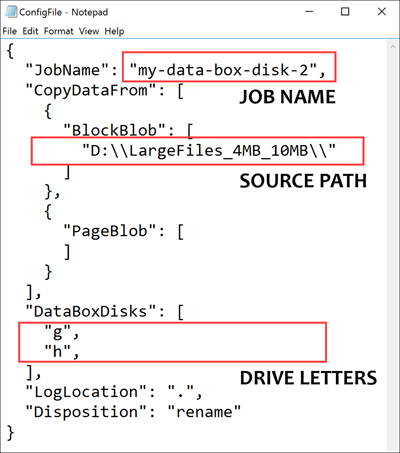

导航到提取软件的文件夹并找到

SampleConfig.json文件。 该文件是一个只读文件,可进行修改和保存。修改

SampleConfig.json文件。提供作业名称。 在 Data Box Disk 上创建了一个具有此名称的文件夹。 该名称还用于在与这些磁盘关联的 Azure 存储帐户中创建容器。 作业名称必须遵循 Azure 容器命名约定。

提供一个源路径,并注意

SampleConfigFile.json中的路径格式。输入对应于目标磁盘的驱动器号。 从源路径获取数据,并将其在多个磁盘之间复制。

提供日志文件的路径。 默认情况下,日志文件将发送到

.exe文件所在的目录。若要验证文件格式,请转到

JSONlint。

将文件另存为

ConfigFile.json。

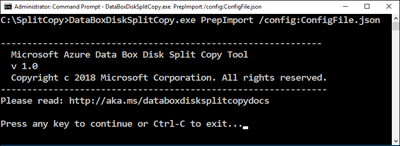

通过提升的权限打开命令提示符窗口,并使用以下命令运行

DataBoxDiskSplitCopy.exe。DataBoxDiskSplitCopy.exe PrepImport /config:ConfigFile.json出现提示时,按任意键继续运行该工具。

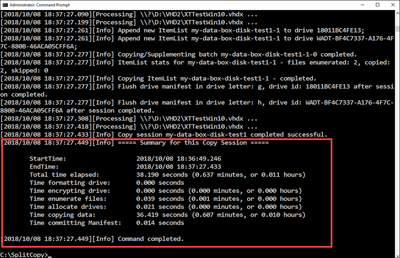

拆分和复制数据集之后,将显示用于复制会话的拆分复制工具摘要,如下面示例输出中所示。

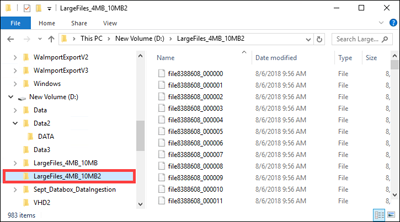

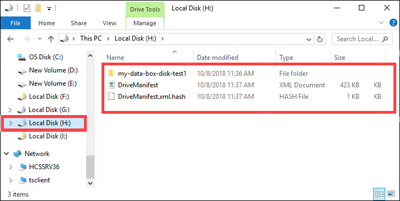

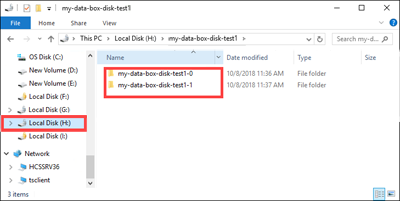

验证是否在目标磁盘之间正确拆分了数据。

检查

H:驱动器内容,并确保已创建两个对应于块 Blob 和页 Blob 格式数据的子文件夹。如果复制会话失败,可使用以下命令恢复并继续:

DataBoxDiskSplitCopy.exe PrepImport /config:ConfigFile.json /ResumeSession

如果在使用拆分复制工具时遇到错误,请按照拆分复制工具错误故障排除一文中的步骤操作。

重要

Data Box 拆分复制工具还会验证数据。 如果使用 Data Box 拆分复制工具复制数据,则可以跳过验证步骤。 托管磁盘不支持拆分复制工具。

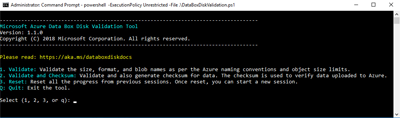

验证数据

如果没有使用 Data Box 拆分复制工具复制数据,则需要验证数据。 对每个 Data Box Disk 执行以下步骤来验证数据。 如果在验证过程中遇到错误,请按照故障排除验证错误文章中的步骤操作。

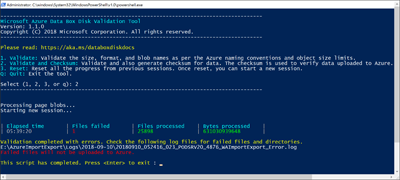

运行

DataBoxDiskValidation.cmd以在驱动器的“DataBoxDiskImport”文件夹中进行校验和验证。 此工具仅适用于 Windows 环境。 Linux 用户需要验证复制到磁盘的源数据是否符合 Azure Data Box 先决条件。出现提示时,请选择相应的验证选项。 建议你始终选择选项 2 来验证文件并生成校验和。 该脚本完成后,退出命令窗口。 完成验证所需的时间取决于数据的大小。 该工具会通知你在验证和校验和生成过程中遇到的任何错误,并提供指向错误日志的链接。

提示

- 在两次运行之间请重置工具。

- 如果有一个大型数据集,其中包含许多占用相对较少存储容量的文件,则校验和过程可能需要更多时间。 如果验证文件并跳过校验和创建,则你应在删除任何副本之前单独验证 Data Box Disk 上的数据完整性。 理想情况下,此验证包括生成校验和。

后续步骤

在本教程中,你了解了如何使用 Azure Data Box Disk 完成以下任务:

- 将数据复制到 Data Box 磁盘

- 验证数据完整性

请继续学习下一篇教程,了解如何退回 Data Box 磁盘和验证向 Azure 上传数据的结果。

将数据复制到磁盘

请按照以下步骤操作,将 Data Box Disk 连接到您的计算机,并复制计算机上的数据。

查看已解锁的驱动器的内容。 根据订购 Data Box Disk 时选择的选项,驱动器中预先创建的文件夹和子文件夹列表会有所不同。

将数据复制到与适当数据格式对应的文件夹中。 例如,将非结构化数据复制到 BlockBlob 文件夹,将 VHD 或 VHDX 数据复制到 PageBlob 文件夹,并将文件复制到 AzureFile 文件夹。 如果数据格式与相应的文件夹(存储类型)不匹配,则在后续步骤中,数据将无法上传到 Azure。

- 请确保所有容器、blob 和文件都符合 Azure 命名约定和 Azure 对象大小限制。 如果不遵循这些规则或限制,则无法将数据上传到 Azure。

- 如果你的订单将托管磁盘作为存储目标之一,请参阅托管磁盘的命名约定。

- 在 Azure 存储帐户中,为“BlockBlob”和“PageBlob”文件夹中的每个子文件夹创建一个容器。 BlockBlob 和 PageBlob 文件夹中的所有文件都将复制到 Azure 存储帐户中的默认 $root 容器中。 $root 容器中的所有文件将始终作为块 blob 上传。

- 在 AzureFile 文件夹内创建子文件夹。 此子文件夹将映射到云中的文件共享。 将文件复制到子文件夹。 直接将文件复制到 AzureFile 文件夹会失败,因此文件会作为块 Blob 上传。

- 如果根目录中存在文件和文件夹,则必须先将它们移到另一个文件夹,然后再开始复制数据。

使用文件资源管理器或任何与 SMB 兼容的文件复制工具(如 Robocopy)通过拖放来复制数据。 可以使用以下命令启动多个复制作业:

Robocopy <source> <destination> * /MT:64 /E /R:1 /W:1 /NFL /NDL /FFT /Log:c:\RobocopyLog.txt打开目标文件夹,查看并验证复制的文件。 如果复制过程中遇到任何错误,请下载用于故障排除的日志文件。 日志文件位于 robocopy 命令中指定的位置。

如果使用多个磁盘,并且需要拆分大型数据集并将其复制到所有磁盘中,请使用拆分和复制的可选过程。

验证数据

按照以下步骤验证数据: