重要

2025 年 9 月 30 日,基本负载均衡器将停用。 如果当前使用的是基本负载均衡器,请确保在停用日期之前升级到标准负载均衡器。 有关升级的指导,请访问 从基本负载均衡器升级 - 指南。

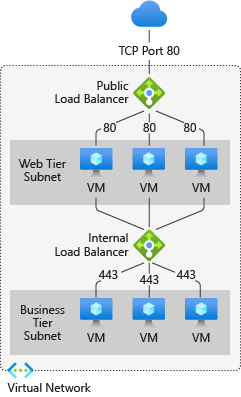

Azure 负载均衡器是一项云服务,用于跨后端虚拟机(VM)或虚拟机规模集(VMSS)分配传入的网络流量。 本文介绍 Azure 负载均衡器的关键功能、体系结构和方案,帮助你确定它是否符合组织的可缩放高可用性工作负载的负载均衡需求。

负载均衡 是指跨一组后端虚拟机(VM)或虚拟机规模集(VMSS)有效地分发传入的网络流量。

负载均衡器概述

Azure 负载均衡器在开放式系统互连 (OSI) 模型的第 4 层上运行。 它是客户端的单一联系点。 服务将抵达负载均衡器前端的入站流分配到后端池实例。 这些流的分布依据为所配置的负载均衡规则和运行状况探测。 后端池实例可以是 Azure 虚拟机 (VM) 或虚拟机规模集。

公共负载均衡器可以为虚拟网络内的虚拟机提供入站和出站连接。 对于入站流量方案,Azure 负载均衡器可以对发到 VM 的 Internet 流量进行负载均衡。 对于出站流量方案,该服务可以将虚拟机的私有 IP 地址转换为公共 IP 地址,以供来自虚拟机的任何出站连接使用。

或者, 内部(或专用)负载均衡器 用于对虚拟网络内的流量进行负载均衡。 使用内部负载均衡器,可以在专用网络连接方案中提供与 VM 的入站连接,例如在混合方案中从本地网络访问负载均衡器前端。

有关服务的各个组件的详细信息,请参阅 Azure 负载均衡器组件。

Azure 负载均衡器有三个库存单位 (SKU) - 基本、标准和网关。 每个 SKU 都面向特定方案,并且规模、功能和定价存在差异。 有关详细信息,请参阅 Azure 负载均衡器 SKU。

为何使用 Azure 负载均衡器

使用 Azure 负载均衡器可以缩放应用程序,并创建高度可用的服务。 该服务支持入站和出站场景,提供低延迟和高吞吐量,为所有 TCP 和 UDP 应用程序扩展到数百万个流。

核心功能

Azure 负载均衡器提供:

- 高可用性: 在 可用性区域中和 跨 可用性区域分配资源

- 可伸缩性:处理 TCP 和 UDP 应用程序的数百万个流

- 低延迟:对超低延迟使用直通负载均衡

- 灵活性:支持 多个端口、多个 IP 地址或两者

- 运行状况监视:使用 运行状况探测 来确保流量仅发送到正常运行的实例

流量分布方案

- 对 Azure 虚拟机的内部和外部流量进行负载均衡

- 为 Azure 虚拟机配置出站连接

- 使用高可用性端口同时在所有端口上对 TCP 和 UDP 流进行负载均衡

- 启用 端口转发 以按公共 IP 地址和端口访问虚拟机

高级功能

- IPv6 支持:启用 IPv6 流量的负载均衡

- 网关负载均衡器集成:标准负载均衡器与 网关负载均衡器

- 全局负载均衡集成: 跨多个 Azure 区域 分配流量,用于全局应用程序

- 管理状态:覆盖维护与运营管理的运行状况探测行为

监视和洞察

- 综合指标:通过 Azure Monitor 使用多维指标

- 预构建的仪表板:通过有用的可视化效果访问 Azure 负载均衡器的见解

- 诊断:查看 标准负载均衡器诊断 以获取性能见解

- 运行状况事件日志:监视负载均衡器 运行状况事件 和状态更改,以便进行主动管理

- 负载均衡器运行状况:通过运行状况监视深入了解负载均衡器的运行状况

安全功能

Azure 负载均衡器通过多层实现安全性:

零信任安全模型

- 标准负载均衡器 基于零信任网络安全模型构建

- 虚拟网络的一部分,默认情况下是专用和隔离的

网络访问控件

- 默认情况下,标准负载均衡器和公共 IP 地址对入站连接是关闭的。

- 网络安全组(NSG) 必须显式允许被允许的流量

- 如果子网或 NIC 上不存在 NSG,则会阻止流量

数据保护

- Azure 负载均衡器 不存储客户数据

- 所有流量处理都实时发生,无需数据持久性

重要

基本负载均衡器默认对 Internet 开放,将于 2025 年 9 月 30 日停用。 迁移到标准负载均衡器以提高安全性。

若要了解 NSG 以及如何将它们应用于你的方案,请参阅网络安全组。

定价和 SLA

有关 标准负载均衡器 定价信息,请参阅 负载均衡器定价。 有关服务级别协议 (SLA),请参阅 Microsoft 在线服务许可信息。

基本负载均衡器为免费提供,并且不附带任何 SLA。 它于2025年9月30日退休。

新增功能

订阅 RSS 源,并在 Azure 更新页上查看最新的 Azure 负载均衡器更新。