了解如何使用 用于 .NET 的 Azure SDK 在编制索引期间创建用于内容提取和转换的 AI 扩充管道 。

技能集将 AI 处理添加到原始内容,使其更加统一和可搜索。 了解技能集的工作原理后,可以支持各种转换,从图像分析到自然语言处理,到从外部提供的自定义处理。

在本教程中,你将:

- 在扩充管道中定义对象。

- 构建技能集。 调用 OCR、语言检测、实体识别和关键短语提取。

- 执行流水线。 创建和加载搜索索引。

- 使用全文搜索检查结果。

概述

本教程使用 C# 和 Azure.Search.Documents 客户端库创建数据源、索引器、索引器和技能集。

索引器驱动管道中的每个步骤,从 Azure 存储上的 Blob 容器中示例数据(非结构化文本和图像)的内容提取开始。

提取内容后, 技能集 将从 Azure 执行内置技能以查找和提取信息。 这些技能包括图像上的光学字符识别(OCR),文本的语言检测,关键短语提取和实体识别(组织)。 技能组创建的新信息将发送到 索引中的字段。 索引生成后,可以在查询、分面和筛选中使用字段。

先决条件

拥有有效订阅的 Azure 帐户。 创建试用版订阅。

注释

可以使用本教程的免费搜索服务。 免费层将你限制为三个索引、三个索引器和三个数据源。 本教程创建每种各一个。 在开始之前,请确保服务上有空间以接受新资源。

下载文件

下载示例数据存储库的 zip 文件并提取内容。 了解如何操作。

下载 示例数据文件(混合媒体)

将示例数据上传到 Azure 存储

在 Azure 存储 中,创建新的容器 并将其命名为 混合内容类型。

上传示例数据文件。

获取存储连接字符串,以便可以在 Azure AI 搜索中构建连接。

在左侧,选择 “访问”键。

复制一个或两个密钥的连接字符串。 连接字符串类似于以下示例:

DefaultEndpointsProtocol=https;AccountName=<your account name>;AccountKey=<your account key>;EndpointSuffix=core.chinacloudapi.cn

Foundry 工具

内置 AI 扩充由 Foundry 工具提供支持,包括 Azure 语言和 Azure 视觉,用于自然语言和图像处理。 对于像本教程这样的小型工作负载,可以利用每个索引器提供的20个事务的免费配额。 对于较大的工作负荷, 将 Azure Foundry 资源附加到标准定价技能集 。

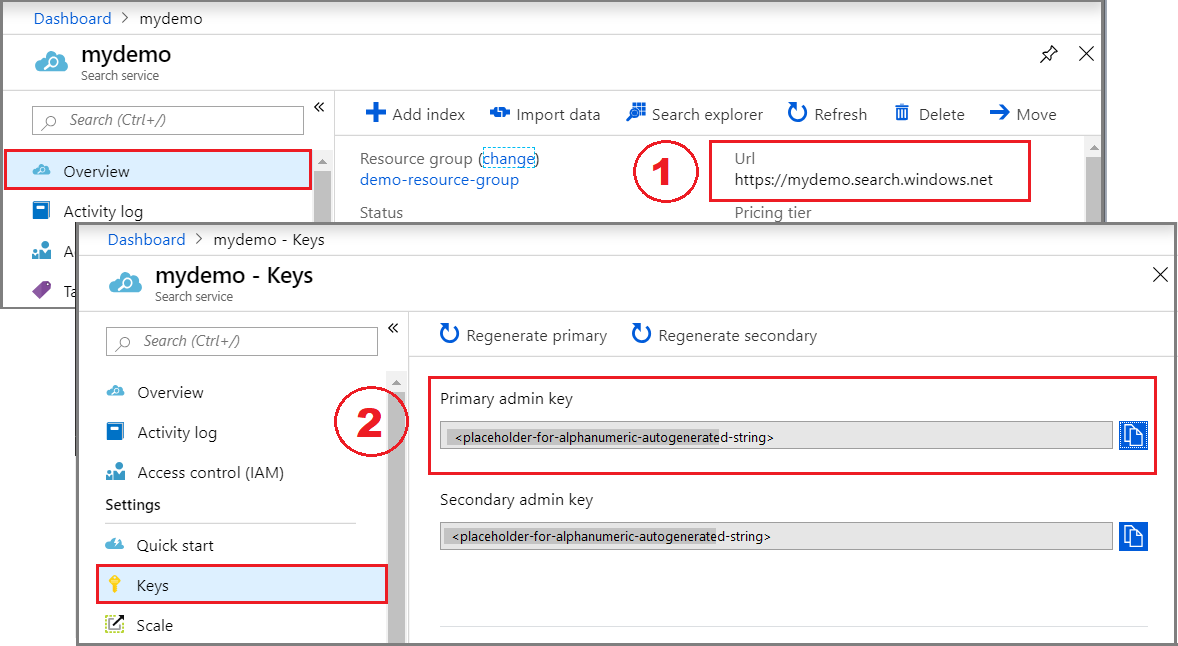

复制搜索服务 URL 和 API 密钥

在本教程中,连接到 Azure AI 搜索需要终结点和 API 密钥。 可以从 Azure 门户获取这些值。

登录到 Azure 门户 并选择搜索服务。

在左窗格中,选择“ 概述 ”并复制终结点。 它应采用以下格式:

https://my-service.search.azure.cn在左窗格中,选择“设置>”,并复制管理密钥以获取服务的完整权限。 有两个可交换的管理员密钥,为保证业务连续性而提供,以防需要滚动一个密钥。 可以对请求使用任一键来添加、修改或删除对象。

配置你的环境

首先打开 Visual Studio 并创建新的控制台应用项目。

安装 Azure.Search.Documents

Azure AI 搜索 .NET SDK 包含一个客户端库,可用于管理索引、数据源、索引器和技能集,以及上传和管理文档和执行查询,而无需处理 HTTP 和 JSON 的详细信息。 此客户端库作为 NuGet 包分发。

对于此项目,请安装Azure.Search.Documents的版本 11 或更高版本,以及Microsoft.Extensions.Configuration的最新版本。

在 Visual Studio 中,选择 “工具>NuGet 包管理器>管理解决方案的 NuGet 包...”

选择最新版本,然后选择“ 安装”。

重复前面的步骤,安装 Microsoft.Extensions.Configuration 和 Microsoft.Extensions.Configuration.Json。

添加服务连接信息

在解决方案资源管理器中右键单击项目,然后选择“ 添加新>项...” 。

为文件

appsettings.json命名,然后选择“ 添加”。将此文件包含在输出目录中。

- 右键单击

appsettings.json并选择“ 属性”。 - 将 “复制到输出目录” 的值更改为 “如果较新则复制”。

- 右键单击

将以下 JSON 复制到新的 JSON 文件中。

{ "SearchServiceUri": "<YourSearchServiceUri>", "SearchServiceAdminApiKey": "<YourSearchServiceAdminApiKey>", "SearchServiceQueryApiKey": "<YourSearchServiceQueryApiKey>", "AzureAIServicesKey": "<YourMultiRegionAzureAIServicesKey>", "AzureBlobConnectionString": "<YourAzureBlobConnectionString>" }

添加搜索服务和 Blob 存储帐户信息。 回想一下,可以从上一部分指示的服务预配步骤中获取此信息。

对于 SearchServiceUri,请输入完整的 URL。

添加命名空间

在 Program.cs中添加以下命名空间。

using Azure;

using Azure.Search.Documents.Indexes;

using Azure.Search.Documents.Indexes.Models;

using Microsoft.Extensions.Configuration;

using System;

using System.Collections.Generic;

using System.Linq;

namespace EnrichwithAI

创建客户端

创建SearchIndexClient下的SearchIndexerClient和Main的实例。

public static void Main(string[] args)

{

// Create service client

IConfigurationBuilder builder = new ConfigurationBuilder().AddJsonFile("appsettings.json");

IConfigurationRoot configuration = builder.Build();

string searchServiceUri = configuration["SearchServiceUri"];

string adminApiKey = configuration["SearchServiceAdminApiKey"];

string azureAiServicesKey = configuration["AzureAIServicesKey"];

SearchIndexClient indexClient = new SearchIndexClient(new Uri(searchServiceUri), new AzureKeyCredential(adminApiKey));

SearchIndexerClient indexerClient = new SearchIndexerClient(new Uri(searchServiceUri), new AzureKeyCredential(adminApiKey));

}

注释

客户端连接到您的搜索服务。 为了避免打开过多的连接,应尽量在应用程序中共享单个实例。 这些方法是线程安全的,以支持此类共享。

添加函数以在失败期间退出程序

本教程旨在帮助你了解索引管道的每个步骤。 如果存在阻止程序创建数据源、技能集、索引或索引器的关键问题,程序将输出错误消息并退出,以便可以理解并解决该问题。

添加ExitProgram到Main以处理需要程序退出的情境。

private static void ExitProgram(string message)

{

Console.WriteLine("{0}", message);

Console.WriteLine("Press any key to exit the program...");

Console.ReadKey();

Environment.Exit(0);

}

创建管道

在 Azure AI 搜索中,AI 处理发生在索引编制(或数据引入)期间。 本演练的这一部分创建四个对象:数据源、索引定义、技能集、索引器。

步骤 1:创建数据源

SearchIndexerClient具有一个DataSourceName属性,可以设置为SearchIndexerDataSourceConnection对象。 此对象提供创建、列出、更新或删除 Azure AI 搜索数据源所需的所有方法。

通过调用SearchIndexerDataSourceConnection创建新indexerClient.CreateOrUpdateDataSourceConnection(dataSource)实例。 以下代码创建类型为 AzureBlob类型的数据源。

private static SearchIndexerDataSourceConnection CreateOrUpdateDataSource(SearchIndexerClient indexerClient, IConfigurationRoot configuration)

{

SearchIndexerDataSourceConnection dataSource = new SearchIndexerDataSourceConnection(

name: "demodata",

type: SearchIndexerDataSourceType.AzureBlob,

connectionString: configuration["AzureBlobConnectionString"],

container: new SearchIndexerDataContainer("mixed-content-type"))

{

Description = "Demo files to demonstrate Azure AI Search capabilities."

};

// The data source does not need to be deleted if it was already created

// since we are using the CreateOrUpdate method

try

{

indexerClient.CreateOrUpdateDataSourceConnection(dataSource);

}

catch (Exception ex)

{

Console.WriteLine("Failed to create or update the data source\n Exception message: {0}\n", ex.Message);

ExitProgram("Cannot continue without a data source");

}

return dataSource;

}

对于成功的请求,该方法返回创建的数据源。 如果请求出现问题,例如无效参数,该方法将引发异常。

现在添加一行 Main 以调用刚刚添加的 CreateOrUpdateDataSource 函数。

// Create or Update the data source

Console.WriteLine("Creating or updating the data source...");

SearchIndexerDataSourceConnection dataSource = CreateOrUpdateDataSource(indexerClient, configuration);

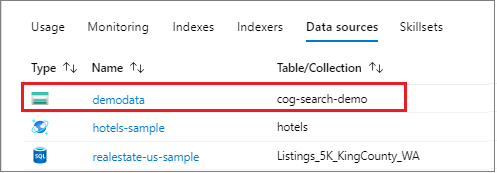

生成并运行解决方案。 由于这是你的第一个请求,请检查 Azure 门户以确认数据源是在 Azure AI 搜索中创建的。 在搜索服务概述页上,验证数据源列表是否有新项。 可能需要等待几分钟才能刷新 Azure 门户页面。

步骤 2:创建技能集

在本部分中,将定义一组要应用于数据的扩充步骤。 每个扩充步骤称为 技能 以及一组扩充步骤,即 技能集。 本教程使用 技能集的内置技能 :

光学字符识别 ,用于识别图像文件中的印刷文本和手写文本。

文本合并 ,用于将字段集合中的文本合并为单个“合并内容”字段。

语言检测以识别内容的语言。

用于从 Blob 容器中的内容中提取组织名称的实体识别。

文本拆分将大块内容拆分为较小的区块,然后调用关键短语提取技能和实体识别技能。 关键短语提取和实体识别接受 50,000 个字符或更少字符的输入。 一些示例文件需要拆分以适应此限制。

关键短语提取 以拉取顶级关键短语。

在初始处理期间,Azure AI 搜索会破解每个文档,以从不同的文件格式中提取内容。 源自源文件的文本放置在生成的 content 字段中,每个文档对应一个。 因此,将输入设置为 "/document/content",以使用此文本。 图像内容将放置在生成的 normalized_images 字段中,在技能集中指定为 /document/normalized_images/*。

输出可以映射到一个索引,作为下游功能的输入,或同时用来做这两者,正如语言代码的情况一样。 在索引中,语言代码可用于筛选。 作为输入,文本分析技能使用语言代码来告知有关断字的语言规则。

有关技能组基础知识的详细信息,请参阅 如何定义技能集。

OCR 技能

从 OcrSkill 图像中提取文本。 此技能假定存在normalized_images字段。 若要生成此字段,在本教程的后面部分,我们将索引器定义中的配置设置为 "imageAction""generateNormalizedImages"。

private static OcrSkill CreateOcrSkill()

{

List<InputFieldMappingEntry> inputMappings = new List<InputFieldMappingEntry>();

inputMappings.Add(new InputFieldMappingEntry("image")

{

Source = "/document/normalized_images/*"

});

List<OutputFieldMappingEntry> outputMappings = new List<OutputFieldMappingEntry>();

outputMappings.Add(new OutputFieldMappingEntry("text")

{

TargetName = "text"

});

OcrSkill ocrSkill = new OcrSkill(inputMappings, outputMappings)

{

Description = "Extract text (plain and structured) from image",

Context = "/document/normalized_images/*",

DefaultLanguageCode = OcrSkillLanguage.En,

ShouldDetectOrientation = true

};

return ocrSkill;

}

合并技能

在本部分中,你将创建一个 MergeSkill 将文档内容字段与 OCR 技能生成的文本合并。

private static MergeSkill CreateMergeSkill()

{

List<InputFieldMappingEntry> inputMappings = new List<InputFieldMappingEntry>();

inputMappings.Add(new InputFieldMappingEntry("text")

{

Source = "/document/content"

});

inputMappings.Add(new InputFieldMappingEntry("itemsToInsert")

{

Source = "/document/normalized_images/*/text"

});

inputMappings.Add(new InputFieldMappingEntry("offsets")

{

Source = "/document/normalized_images/*/contentOffset"

});

List<OutputFieldMappingEntry> outputMappings = new List<OutputFieldMappingEntry>();

outputMappings.Add(new OutputFieldMappingEntry("mergedText")

{

TargetName = "merged_text"

});

MergeSkill mergeSkill = new MergeSkill(inputMappings, outputMappings)

{

Description = "Create merged_text which includes all the textual representation of each image inserted at the right location in the content field.",

Context = "/document",

InsertPreTag = " ",

InsertPostTag = " "

};

return mergeSkill;

}

语言检测技能

检测LanguageDetectionSkill所输入文本的语言,并在请求中报告提交的每个文档的单个语言代码。 我们将 语言检测 技能的输出用作 文本拆分 技能输入的一部分。

private static LanguageDetectionSkill CreateLanguageDetectionSkill()

{

List<InputFieldMappingEntry> inputMappings = new List<InputFieldMappingEntry>();

inputMappings.Add(new InputFieldMappingEntry("text")

{

Source = "/document/merged_text"

});

List<OutputFieldMappingEntry> outputMappings = new List<OutputFieldMappingEntry>();

outputMappings.Add(new OutputFieldMappingEntry("languageCode")

{

TargetName = "languageCode"

});

LanguageDetectionSkill languageDetectionSkill = new LanguageDetectionSkill(inputMappings, outputMappings)

{

Description = "Detect the language used in the document",

Context = "/document"

};

return languageDetectionSkill;

}

文本拆分技能

下面 SplitSkill 将文本按页面进行拆分,并将页面长度限制为 4,000 个字符,根据 String.Length。 该算法尝试将文本拆分为大小最多 maximumPageLength 的区块。 在这种情况下,该算法尽力打破句子边界上的句子,因此区块的大小可能略小于 maximumPageLength。

private static SplitSkill CreateSplitSkill()

{

List<InputFieldMappingEntry> inputMappings = new List<InputFieldMappingEntry>();

inputMappings.Add(new InputFieldMappingEntry("text")

{

Source = "/document/merged_text"

});

inputMappings.Add(new InputFieldMappingEntry("languageCode")

{

Source = "/document/languageCode"

});

List<OutputFieldMappingEntry> outputMappings = new List<OutputFieldMappingEntry>();

outputMappings.Add(new OutputFieldMappingEntry("textItems")

{

TargetName = "pages",

});

SplitSkill splitSkill = new SplitSkill(inputMappings, outputMappings)

{

Description = "Split content into pages",

Context = "/document",

TextSplitMode = TextSplitMode.Pages,

MaximumPageLength = 4000,

DefaultLanguageCode = SplitSkillLanguage.En

};

return splitSkill;

}

实体识别技能

此 EntityRecognitionSkill 实例设置为识别类别类型 organization。

EntityRecognitionSkill 还可以识别 person 和 location 类别。

请注意,“上下文”字段设置为 "/document/pages/*" 带有星号,这意味着对下面的 "/document/pages"每个页面调用扩充步骤。

private static EntityRecognitionSkill CreateEntityRecognitionSkill()

{

List<InputFieldMappingEntry> inputMappings = new List<InputFieldMappingEntry>();

inputMappings.Add(new InputFieldMappingEntry("text")

{

Source = "/document/pages/*"

});

List<OutputFieldMappingEntry> outputMappings = new List<OutputFieldMappingEntry>();

outputMappings.Add(new OutputFieldMappingEntry("organizations")

{

TargetName = "organizations"

});

// Specify the V3 version of the EntityRecognitionSkill

var skillVersion = EntityRecognitionSkill.SkillVersion.V3;

var entityRecognitionSkill = new EntityRecognitionSkill(inputMappings, outputMappings, skillVersion)

{

Description = "Recognize organizations",

Context = "/document/pages/*",

DefaultLanguageCode = EntityRecognitionSkillLanguage.En

};

entityRecognitionSkill.Categories.Add(EntityCategory.Organization);

return entityRecognitionSkill;

}

关键短语提取技能

与 EntityRecognitionSkill 刚刚创建的实例一样, KeyPhraseExtractionSkill 将为文档的每个页面调用该实例。

private static KeyPhraseExtractionSkill CreateKeyPhraseExtractionSkill()

{

List<InputFieldMappingEntry> inputMappings = new List<InputFieldMappingEntry>();

inputMappings.Add(new InputFieldMappingEntry("text")

{

Source = "/document/pages/*"

});

inputMappings.Add(new InputFieldMappingEntry("languageCode")

{

Source = "/document/languageCode"

});

List<OutputFieldMappingEntry> outputMappings = new List<OutputFieldMappingEntry>();

outputMappings.Add(new OutputFieldMappingEntry("keyPhrases")

{

TargetName = "keyPhrases"

});

KeyPhraseExtractionSkill keyPhraseExtractionSkill = new KeyPhraseExtractionSkill(inputMappings, outputMappings)

{

Description = "Extract the key phrases",

Context = "/document/pages/*",

DefaultLanguageCode = KeyPhraseExtractionSkillLanguage.En

};

return keyPhraseExtractionSkill;

}

生成和创建技能集

使用你创造的技能来构建SearchIndexerSkillset。

private static SearchIndexerSkillset CreateOrUpdateDemoSkillSet(SearchIndexerClient indexerClient, IList<SearchIndexerSkill> skills,string azureAiServicesKey)

{

SearchIndexerSkillset skillset = new SearchIndexerSkillset("demoskillset", skills)

{

// Foundry Tools was formerly known as Cognitive Services.

// The APIs still use the old name, so we need to create a CognitiveServicesAccountKey object.

Description = "Demo skillset",

CognitiveServicesAccount = new CognitiveServicesAccountKey(azureAiServicesKey)

};

// Create the skillset in your search service.

// The skillset does not need to be deleted if it was already created

// since we are using the CreateOrUpdate method

try

{

indexerClient.CreateOrUpdateSkillset(skillset);

}

catch (RequestFailedException ex)

{

Console.WriteLine("Failed to create the skillset\n Exception message: {0}\n", ex.Message);

ExitProgram("Cannot continue without a skillset");

}

return skillset;

}

将以下行添加到 Main。

// Create the skills

Console.WriteLine("Creating the skills...");

OcrSkill ocrSkill = CreateOcrSkill();

MergeSkill mergeSkill = CreateMergeSkill();

EntityRecognitionSkill entityRecognitionSkill = CreateEntityRecognitionSkill();

LanguageDetectionSkill languageDetectionSkill = CreateLanguageDetectionSkill();

SplitSkill splitSkill = CreateSplitSkill();

KeyPhraseExtractionSkill keyPhraseExtractionSkill = CreateKeyPhraseExtractionSkill();

// Create the skillset

Console.WriteLine("Creating or updating the skillset...");

List<SearchIndexerSkill> skills = new List<SearchIndexerSkill>();

skills.Add(ocrSkill);

skills.Add(mergeSkill);

skills.Add(languageDetectionSkill);

skills.Add(splitSkill);

skills.Add(entityRecognitionSkill);

skills.Add(keyPhraseExtractionSkill);

SearchIndexerSkillset skillset = CreateOrUpdateDemoSkillSet(indexerClient, skills, azureAiServicesKey);

步骤 3:创建索引

在本部分中,通过指定要包含在可搜索索引中的字段以及每个字段的搜索属性来定义索引架构。 字段具有类型,可以采用属性来确定字段的使用方式(可搜索、可排序等)。 索引中的字段名称不需要与源中的字段名称完全匹配。 在后面的步骤中,在索引器中添加字段映射以连接源目标字段。 对于此步骤,请使用与搜索应用程序相关的字段命名约定定义索引。

本练习使用以下字段和字段类型:

| 字段名 | 字段类型 |

|---|---|

id |

Edm.String |

content |

Edm.String |

languageCode |

Edm.String |

keyPhrases |

列表<Edm.String> |

organizations |

列表<Edm.String> |

创建 DemoIndex 类

此索引的字段是使用模型类定义的。 模型类的每个属性都有确定相应索引字段的搜索相关行为的属性。

我们将模型类添加到新的 C# 文件。 右键单击项目并选择“ 添加新>项...”,选择“类”并命名该文件 DemoIndex.cs,然后选择“ 添加”。

请确保指示要使用来自 Azure.Search.Documents.Indexes 和 System.Text.Json.Serialization 命名空间的类型。

添加以下模型类定义并将其 DemoIndex.cs 包含在创建索引的同一命名空间中。

using Azure.Search.Documents.Indexes;

using System.Text.Json.Serialization;

namespace EnrichwithAI

{

// The SerializePropertyNamesAsCamelCase is currently unsupported as of this writing.

// Replace it with JsonPropertyName

public class DemoIndex

{

[SearchableField(IsSortable = true, IsKey = true)]

[JsonPropertyName("id")]

public string Id { get; set; }

[SearchableField]

[JsonPropertyName("content")]

public string Content { get; set; }

[SearchableField]

[JsonPropertyName("languageCode")]

public string LanguageCode { get; set; }

[SearchableField]

[JsonPropertyName("keyPhrases")]

public string[] KeyPhrases { get; set; }

[SearchableField]

[JsonPropertyName("organizations")]

public string[] Organizations { get; set; }

}

}

定义了模型类后,您可以返回到Program.cs,在其中相当轻松地创建索引定义。 此索引的名称将为 demoindex。 如果已存在具有该名称的索引,则会将其删除。

private static SearchIndex CreateDemoIndex(SearchIndexClient indexClient)

{

FieldBuilder builder = new FieldBuilder();

var index = new SearchIndex("demoindex")

{

Fields = builder.Build(typeof(DemoIndex))

};

try

{

indexClient.GetIndex(index.Name);

indexClient.DeleteIndex(index.Name);

}

catch (RequestFailedException ex) when (ex.Status == 404)

{

//if the specified index not exist, 404 will be thrown.

}

try

{

indexClient.CreateIndex(index);

}

catch (RequestFailedException ex)

{

Console.WriteLine("Failed to create the index\n Exception message: {0}\n", ex.Message);

ExitProgram("Cannot continue without an index");

}

return index;

}

在测试期间,你可能会发现自己无意中尝试多次创建索引。 因此,在尝试创建索引之前,请检查要创建的索引是否已存在。

将以下行添加到 Main。

// Create the index

Console.WriteLine("Creating the index...");

SearchIndex demoIndex = CreateDemoIndex(indexClient);

添加以下 using 语句以解析消歧引用。

using Index = Azure.Search.Documents.Indexes.Models;

若要了解有关索引概念的详细信息,请参阅“创建索引”(REST API)。

步骤 4:创建并运行索引器

到目前为止,你已创建数据源、技能集和索引。 这三个组件将成为 索引器 的一部分,该索引器将每个部分汇聚成一个单一的多阶段操作。 若要在索引器中将这些绑定在一起,必须定义字段映射。

在技能集之前处理 fieldMappings,将数据源中的源字段映射到索引中的目标字段。 如果两端的字段名称和类型相同,则无需映射。

outputFieldMappings 在技能集处理后被执行,引用的 sourceFieldNames 在文档破译或数据富化创建它们之前并不存在。 targetFieldName 是索引中的字段。

除了将输入挂钩到输出之外,还可以使用字段映射来平展数据结构。 有关详细信息,请参阅 如何将扩充字段映射到可搜索索引。

private static SearchIndexer CreateDemoIndexer(SearchIndexerClient indexerClient, SearchIndexerDataSourceConnection dataSource, SearchIndexerSkillset skillSet, SearchIndex index)

{

IndexingParameters indexingParameters = new IndexingParameters()

{

MaxFailedItems = -1,

MaxFailedItemsPerBatch = -1,

};

indexingParameters.Configuration.Add("dataToExtract", "contentAndMetadata");

indexingParameters.Configuration.Add("imageAction", "generateNormalizedImages");

SearchIndexer indexer = new SearchIndexer("demoindexer", dataSource.Name, index.Name)

{

Description = "Demo Indexer",

SkillsetName = skillSet.Name,

Parameters = indexingParameters

};

FieldMappingFunction mappingFunction = new FieldMappingFunction("base64Encode");

mappingFunction.Parameters.Add("useHttpServerUtilityUrlTokenEncode", true);

indexer.FieldMappings.Add(new FieldMapping("metadata_storage_path")

{

TargetFieldName = "id",

MappingFunction = mappingFunction

});

indexer.FieldMappings.Add(new FieldMapping("content")

{

TargetFieldName = "content"

});

indexer.OutputFieldMappings.Add(new FieldMapping("/document/pages/*/organizations/*")

{

TargetFieldName = "organizations"

});

indexer.OutputFieldMappings.Add(new FieldMapping("/document/pages/*/keyPhrases/*")

{

TargetFieldName = "keyPhrases"

});

indexer.OutputFieldMappings.Add(new FieldMapping("/document/languageCode")

{

TargetFieldName = "languageCode"

});

try

{

indexerClient.GetIndexer(indexer.Name);

indexerClient.DeleteIndexer(indexer.Name);

}

catch (RequestFailedException ex) when (ex.Status == 404)

{

//if the specified indexer not exist, 404 will be thrown.

}

try

{

indexerClient.CreateIndexer(indexer);

}

catch (RequestFailedException ex)

{

Console.WriteLine("Failed to create the indexer\n Exception message: {0}\n", ex.Message);

ExitProgram("Cannot continue without creating an indexer");

}

return indexer;

}

将以下行添加到 Main。

// Create the indexer, map fields, and execute transformations

Console.WriteLine("Creating the indexer and executing the pipeline...");

SearchIndexer demoIndexer = CreateDemoIndexer(indexerClient, dataSource, skillset, demoIndex);

预期索引器处理需要一些时间才能完成。 尽管数据集很小,但分析技能是计算密集型的。 某些技能(如图像分析)耗时较长。

小窍门

创建索引器会调用管道。 如果在数据传输、输入输出映射或操作顺序方面出现问题,它们会在此阶段显示出来。

了解如何创建索引器

代码设置为 "maxFailedItems" -1,指示索引引擎在数据导入期间忽略错误。 这很有用,因为演示数据源中的文档很少。 对于较大的数据源,请将该值设置为大于 0。

另请注意,"dataToExtract" 设置为 "contentAndMetadata"。 此语句告知索引器从不同的文件格式以及与每个文件相关的元数据中自动提取内容。

提取内容后,可以设置为 imageAction 从数据源中找到的图像中提取文本。 设置为"imageAction""generateNormalizedImages"配置,结合使用 OCR 技能和文本合并技能,告知索引器从图像中提取文本(例如,从交通标志中提取的“停止”一词),并将其整体嵌入到内容字段中。 此行为适用于嵌入在文档中的图像(在 PDF 中考虑图像),以及数据源中找到的图像(例如 JPG 文件)。

监视索引编制

定义索引器后,它会在提交请求时自动运行。 根据定义的技能,索引可能需要比预期更长的时间。 若要了解索引器是否仍在运行,请使用 GetStatus 该方法。

private static void CheckIndexerOverallStatus(SearchIndexerClient indexerClient, SearchIndexer indexer)

{

try

{

var demoIndexerExecutionInfo = indexerClient.GetIndexerStatus(indexer.Name);

switch (demoIndexerExecutionInfo.Value.Status)

{

case IndexerStatus.Error:

ExitProgram("Indexer has error status. Check the Azure portal to further understand the error.");

break;

case IndexerStatus.Running:

Console.WriteLine("Indexer is running");

break;

case IndexerStatus.Unknown:

Console.WriteLine("Indexer status is unknown");

break;

default:

Console.WriteLine("No indexer information");

break;

}

}

catch (RequestFailedException ex)

{

Console.WriteLine("Failed to get indexer overall status\n Exception message: {0}\n", ex.Message);

}

}

demoIndexerExecutionInfo 表示索引器的当前状态和执行历史记录。

某些源文件与技能组合情况下警告很常见,但这并不一定表示存在问题。 在本教程中,警告是良性的(例如,JPEG 文件中没有文本输入)。

将以下行添加到 Main。

// Check indexer overall status

Console.WriteLine("Check the indexer overall status...");

CheckIndexerOverallStatus(indexerClient, demoIndexer);

搜寻

在 Azure AI 搜索教程控制台应用中,我们通常会在运行返回结果的查询之前添加 2 秒延迟,但由于扩充需要几分钟才能完成,因此我们将关闭控制台应用并改用另一种方法。

最简单的选项是 Azure 门户中的 搜索资源管理器 。 可以先运行空查询,返回所有文档;或者进行更有针对性的搜索,以返回由管道创建的新字段内容。

在 Azure 门户中的搜索服务页中,展开 “搜索管理>索引”。

在列表中查找

demoindex。 它应当有 14 个文档。 如果文档计数为零,索引器仍在运行,或者页面尚未刷新。选择

demoindex。 搜索资源管理器是第一个选项卡。文档一经加载,内容便可搜索。 若要验证内容是否存在,请单击“ 搜索”运行未指定的查询。 此查询返回所有当前已编制索引的文档,让你了解索引包含的内容。

若要获得更易于管理的结果,请切换到 JSON 视图并设置参数以选择字段:

{ "search": "*", "count": true, "select": "id, languageCode, organizations" }

重置并重新运行

在开发的早期阶段,设计迭代的最实用方法是从 Azure AI 搜索中删除对象,并允许代码重新生成它们。 资源名称是唯一的。 通过删除对象,可以使用同一名称重新创建它。

本教程的示例代码检查现有对象并删除它们,以便重新运行代码。 还可以使用 Azure 门户删除索引、索引器、数据源和技能集。

要点

本教程演示了通过创建组件部件(数据源、技能集、索引和索引器)生成扩充索引管道的基本步骤。

引入了内置技能,以及技能组定义以及通过输入和输出将技能链接在一起的机制。 你还了解到, outputFieldMappings 在索引器定义中,是将优化值从管道路由到 Azure AI 搜索服务上的可搜索索引所必需的。

最后,你学习了如何测试结果并重置系统以供进一步迭代。 你了解到,针对索引执行查询将返回增强的索引管道创建的输出。 还了解了如何在重新运行管道之前检查索引器状态以及要删除的对象。

清理资源

当您在自己的订阅中进行工作时,在项目结束后,建议删除那些不再需要的资源。 持续运行资源可能会产生费用。 可以逐个删除资源,也可以删除资源组以删除整个资源集。

可以使用左侧导航窗格中的“所有资源”或“资源组”链接在 Azure 门户中查找和管理资源。

后续步骤

熟悉 AI 扩充管道中的所有对象后,让我们更深入地了解技能集定义和个人技能。

了解如何调用 REST API,以便在编制索引期间创建用于内容提取和转换的 AI 扩充管道 。

技能集将 AI 处理添加到原始内容,使其更加统一和可搜索。 了解技能集的工作原理后,可以支持各种转换,从图像分析到自然语言处理,到从外部提供的自定义处理。

在本教程中,你将:

- 在扩充管道中定义对象。

- 构建技能集。 调用 OCR、语言检测、实体识别和关键短语提取。

- 执行流水线。 创建和加载搜索索引。

- 使用全文搜索检查结果。

概述

本教程使用 REST 客户端和 Azure AI 搜索 REST API 创建数据源、索引器、索引器和技能集。

索引器驱动管道中的每个步骤,从 Azure 存储上的 Blob 容器中示例数据(非结构化文本和图像)的内容提取开始。

提取内容后, 技能集 将从 Azure 执行内置技能以查找和提取信息。 这些技能包括图像上的光学字符识别(OCR),文本的语言检测,关键短语提取和实体识别(组织)。 技能组创建的新信息将发送到 索引中的字段。 索引生成后,可以在查询、分面和筛选中使用字段。

先决条件

拥有有效订阅的 Azure 帐户。 创建试用版订阅。

注释

可以使用本教程的免费搜索服务。 免费层将你限制为三个索引、三个索引器和三个数据源。 本教程创建每种各一个。 在开始之前,请确保服务上有空间以接受新资源。

下载文件

下载示例数据存储库的 zip 文件并提取内容。 了解如何操作。

将示例数据上传到 Azure 存储

在 Azure 存储中,创建新的容器并将其命名为 cog-search-demo。

获取存储连接字符串,以便可以在 Azure AI 搜索中构建连接。

在左侧,选择 “访问”键。

复制一个或两个密钥的连接字符串。 连接字符串类似于以下示例:

DefaultEndpointsProtocol=https;AccountName=<your account name>;AccountKey=<your account key>;EndpointSuffix=core.chinacloudapi.cn

Foundry 工具

内置 AI 扩充由 Foundry 工具提供支持,包括 Azure 语言和 Azure 视觉,用于自然语言和图像处理。 对于本教程等小型工作负荷,可以使用每个索引器 20 个事务的免费分配。 对于较大的工作负荷,将 Azure Foundry 资源连接到技能集以使用标准定价。

复制搜索服务 URL 和 API 密钥

在本教程中,连接到 Azure AI 搜索需要终结点和 API 密钥。 可以从 Azure 门户获取这些值。

登录到 Azure 门户 并选择搜索服务。

在左窗格中,选择“ 概述 ”并复制终结点。 它应采用以下格式:

https://my-service.search.azure.cn在左窗格中,选择“设置>”,并复制管理密钥以获取服务的完整权限。 有两个可交换的管理员密钥,为保证业务连续性而提供,以防需要滚动一个密钥。 可以对请求使用任一键来添加、修改或删除对象。

配置您的 REST 文件

启动 Visual Studio Code 并打开 skillset-tutorial.rest 文件。 请参阅快速入门:如需 REST 客户端的帮助, 请进行全文搜索 。

提供变量的值:搜索服务终结点、搜索服务管理员 API 密钥、索引名称、Azure 存储帐户的连接字符串和 Blob 容器名称。

创建管道

AI 扩充是索引器驱动的。 本演练的这一部分创建四个对象:数据源、索引定义、技能集、索引器。

步骤 1:创建数据源

调用 “创建数据源 ”,将连接字符串设置为包含示例数据文件的 Blob 容器。

### Create a data source

POST {{baseUrl}}/datasources?api-version=2025-09-01 HTTP/1.1

Content-Type: application/json

api-key: {{apiKey}}

{

"name": "cog-search-demo-ds",

"description": null,

"type": "azureblob",

"subtype": null,

"credentials": {

"connectionString": "{{storageConnectionString}}"

},

"container": {

"name": "{{blobContainer}}",

"query": null

},

"dataChangeDetectionPolicy": null,

"dataDeletionDetectionPolicy": null

}

步骤 2:创建技能集

调用 “创建技能集 ”以指定将哪些扩充步骤应用于内容。 除非存在依赖项,否则技能将并行执行。

### Create a skillset

POST {{baseUrl}}/skillsets?api-version=2025-09-01 HTTP/1.1

Content-Type: application/json

api-key: {{apiKey}}

{

"name": "cog-search-demo-ss",

"description": "Apply OCR, detect language, extract entities, and extract key-phrases.",

"cognitiveServices": null,

"skills":

[

{

"@odata.type": "#Microsoft.Skills.Vision.OcrSkill",

"context": "/document/normalized_images/*",

"defaultLanguageCode": "en",

"detectOrientation": true,

"inputs": [

{

"name": "image",

"source": "/document/normalized_images/*"

}

],

"outputs": [

{

"name": "text"

}

]

},

{

"@odata.type": "#Microsoft.Skills.Text.MergeSkill",

"description": "Create merged_text, which includes all the textual representation of each image inserted at the right location in the content field. This is useful for PDF and other file formats that supported embedded images.",

"context": "/document",

"insertPreTag": " ",

"insertPostTag": " ",

"inputs": [

{

"name":"text",

"source": "/document/content"

},

{

"name": "itemsToInsert",

"source": "/document/normalized_images/*/text"

},

{

"name":"offsets",

"source": "/document/normalized_images/*/contentOffset"

}

],

"outputs": [

{

"name": "mergedText",

"targetName" : "merged_text"

}

]

},

{

"@odata.type": "#Microsoft.Skills.Text.SplitSkill",

"textSplitMode": "pages",

"maximumPageLength": 4000,

"defaultLanguageCode": "en",

"context": "/document",

"inputs": [

{

"name": "text",

"source": "/document/merged_text"

}

],

"outputs": [

{

"name": "textItems",

"targetName": "pages"

}

]

},

{

"@odata.type": "#Microsoft.Skills.Text.LanguageDetectionSkill",

"description": "If you have multilingual content, adding a language code is useful for filtering",

"context": "/document",

"inputs": [

{

"name": "text",

"source": "/document/merged_text"

}

],

"outputs": [

{

"name": "languageName",

"targetName": "language"

}

]

},

{

"@odata.type": "#Microsoft.Skills.Text.KeyPhraseExtractionSkill",

"context": "/document/pages/*",

"inputs": [

{

"name": "text",

"source": "/document/pages/*"

}

],

"outputs": [

{

"name": "keyPhrases",

"targetName": "keyPhrases"

}

]

},

{

"@odata.type": "#Microsoft.Skills.Text.V3.EntityRecognitionSkill",

"categories": ["Organization"],

"context": "/document",

"inputs": [

{

"name": "text",

"source": "/document/merged_text"

}

],

"outputs": [

{

"name": "organizations",

"targetName": "organizations"

}

]

},

{

"@odata.type": "#Microsoft.Skills.Text.V3.EntityRecognitionSkill",

"categories": ["Location"],

"context": "/document",

"inputs": [

{

"name": "text",

"source": "/document/merged_text"

}

],

"outputs": [

{

"name": "locations",

"targetName": "locations"

}

]

},

{

"@odata.type": "#Microsoft.Skills.Text.V3.EntityRecognitionSkill",

"categories": ["Person"],

"context": "/document",

"inputs": [

{

"name": "text",

"source": "/document/merged_text"

}

],

"outputs": [

{

"name": "persons",

"targetName": "persons"

}

]

}

]

}

要点:

请求正文指定以下内置技能:

技能 Description 光学字符识别 识别图像文件中的文本和数字。 文本合并 创建“合并内容”,以重新组合以前分离的内容,对具有嵌入图像的文档(PDF、DOCX 等)非常有用。 图像和文本在文档破解阶段分隔。 合并技能通过将扩充期间创建的任何识别文本、图像标题或标记插入到从文档中提取图像的同一位置来重新组合它们。 在技能集中处理合并内容时,这个节点包含文档中的所有文本,包括那些从未经过 OCR 或图像分析处理的仅文本文件。 语言检测 检测语言并输出语言名称或代码。 在多语言数据集中,语言字段可用于筛选器。 实体识别 从合并的内容中提取人员、组织和位置的名称。 文本拆分 在调用关键短语提取技能之前,将大型合并内容分解为较小的区块。 关键短语提取接受 50,000 个字符或更少字符的输入。 一些示例文件需要拆分以适应此限制。 关键短语提取 提取重要关键短语。 每项技能都作用于文档的内容。 在处理过程中,Azure AI 搜索会破解每个文档,以读取不同文件格式的内容。 找到源自源文件的文本放置在生成的

content字段中,每个文档对应一个。 因此,输入变为"/document/content"。对于关键短语提取,因为我们使用文本拆分器技能将较大的文件分解成页面,关键短语提取技能的上下文是

"document/pages/*"(对于文档中的每一页),而不是"/document/content"。

注释

输出可以映射到一个索引,作为下游功能的输入,或同时用来做这两者,正如语言代码的情况一样。 在索引中,语言代码可用于筛选。 有关技能组基础知识的详细信息,请参阅 如何定义技能集。

步骤 3:创建索引

调用 “创建索引 ”以提供用于在 Azure AI 搜索中创建倒排索引和其他构造的架构。

索引的最大组成部分是字段集合,其中数据类型和属性确定 Azure AI 搜索中的内容和行为。 请确保为新生成的输出预留字段。

### Create an index

POST {{baseUrl}}/indexes?api-version=2025-09-01 HTTP/1.1

Content-Type: application/json

api-key: {{apiKey}}

{

"name": "cog-search-demo-idx",

"defaultScoringProfile": "",

"fields": [

{

"name": "content",

"type": "Edm.String",

"searchable": true,

"sortable": false,

"filterable": false,

"facetable": false

},

{

"name": "text",

"type": "Collection(Edm.String)",

"facetable": false,

"filterable": true,

"searchable": true,

"sortable": false

},

{

"name": "language",

"type": "Edm.String",

"searchable": false,

"sortable": true,

"filterable": true,

"facetable": false

},

{

"name": "keyPhrases",

"type": "Collection(Edm.String)",

"searchable": true,

"sortable": false,

"filterable": true,

"facetable": true

},

{

"name": "organizations",

"type": "Collection(Edm.String)",

"searchable": true,

"sortable": false,

"filterable": true,

"facetable": true

},

{

"name": "persons",

"type": "Collection(Edm.String)",

"searchable": true,

"sortable": false,

"filterable": true,

"facetable": true

},

{

"name": "locations",

"type": "Collection(Edm.String)",

"searchable": true,

"sortable": false,

"filterable": true,

"facetable": true

},

{

"name": "metadata_storage_path",

"type": "Edm.String",

"key": true,

"searchable": true,

"sortable": false,

"filterable": false,

"facetable": false

},

{

"name": "metadata_storage_name",

"type": "Edm.String",

"searchable": true,

"sortable": false,

"filterable": false,

"facetable": false

}

]

}

步骤 4:创建并运行索引器

调用 创建索引器 来驱动管道。 到目前为止创建的三个组件(数据源、技能集、索引)是索引器的输入。 在 Azure AI 搜索中创建索引器是使整个管道生效的事件。

预计此步骤需要几分钟才能完成。 尽管数据集很小,但分析技能是计算密集型的。

### Create and run an indexer

POST {{baseUrl}}/indexers?api-version=2025-09-01 HTTP/1.1

Content-Type: application/json

api-key: {{apiKey}}

{

"name": "cog-search-demo-idxr",

"description": "",

"dataSourceName" : "cog-search-demo-ds",

"targetIndexName" : "cog-search-demo-idx",

"skillsetName" : "cog-search-demo-ss",

"fieldMappings" : [

{

"sourceFieldName" : "metadata_storage_path",

"targetFieldName" : "metadata_storage_path",

"mappingFunction" : { "name" : "base64Encode" }

},

{

"sourceFieldName": "metadata_storage_name",

"targetFieldName": "metadata_storage_name"

}

],

"outputFieldMappings" :

[

{

"sourceFieldName": "/document/merged_text",

"targetFieldName": "content"

},

{

"sourceFieldName" : "/document/normalized_images/*/text",

"targetFieldName" : "text"

},

{

"sourceFieldName" : "/document/organizations",

"targetFieldName" : "organizations"

},

{

"sourceFieldName": "/document/language",

"targetFieldName": "language"

},

{

"sourceFieldName" : "/document/persons",

"targetFieldName" : "persons"

},

{

"sourceFieldName" : "/document/locations",

"targetFieldName" : "locations"

},

{

"sourceFieldName" : "/document/pages/*/keyPhrases/*",

"targetFieldName" : "keyPhrases"

}

],

"parameters":

{

"batchSize": 1,

"maxFailedItems":-1,

"maxFailedItemsPerBatch":-1,

"configuration":

{

"dataToExtract": "contentAndMetadata",

"imageAction": "generateNormalizedImages"

}

}

}

要点:

请求正文包括对以前的对象的引用、图像处理所需的配置属性和两种类型的字段映射。

"fieldMappings"先于技能集进行处理,将数据源中的内容发送到索引中的目标字段。 使用字段映射将现有未修改的内容发送到索引。 如果两端的字段名称和类型相同,则无需映射。"outputFieldMappings"适用于技能集执行后由技能创建的字段。 在文档解析或扩展创建这些引用之前,sourceFieldName中的outputFieldMappings引用并不存在。targetFieldName这是索引中的字段,在索引架构中定义。参数

"maxFailedItems"设置为 -1,指示索引引擎在数据导入过程中忽略错误。 这是可以接受的,因为演示数据源中的文档很少。 对于较大的数据源,请将该值设置为大于 0。该

"dataToExtract":"contentAndMetadata"语句告知索引器从 Blob 的内容属性和每个对象的元数据中自动提取值。该

imageAction参数告知索引器从数据源中找到的图像中提取文本。 该"imageAction":"generateNormalizedImages"配置与 OCR 技能与文本合并技能相结合,告知索引器从图像中提取文本(例如,流量停止符号中的“停止”一词),并将其嵌入内容字段的一部分。 此行为适用于嵌入图像(在 PDF 中考虑图像)和独立图像文件,例如 JPG 文件。

注释

创建索引器会调用管道。 如果在数据传输、输入输出映射或操作顺序方面出现问题,它们会在此阶段显示出来。 若要使用代码或脚本更改重新运行管道,可能需要先删除对象。 有关详细信息,请参阅 “重置并重新运行”。

监视索引编制

提交创建索引器请求后,立即开始编制索引和扩充。 根据技能的复杂性和操作,索引可能会花费一些时间。

若要确定索引器是否仍在运行,请调用 “获取索引器状态 ”以检查索引器状态。

### Get Indexer Status (wait several minutes for the indexer to complete)

GET {{baseUrl}}/indexers/cog-search-demo-idxr/status?api-version=2025-09-01 HTTP/1.1

Content-Type: application/json

api-key: {{apiKey}}

要点:

在某些情况下,警告很常见,并不总是指示问题。 例如,如果 Blob 容器包含映像文件,并且管道不处理映像,则会收到一条警告,指出未处理映像。

在此示例中,有一个不包含文本的 PNG 文件。 所有五种基于文本的技能(语言检测、位置实体识别、组织、人员和关键短语提取)都无法对此文件执行。 生成的通知显示在执行历史记录中。

检查结果

创建包含 AI 生成的内容的索引后,请调用 搜索文档 来运行一些查询以查看结果。

### Query the index\

POST {{baseUrl}}/indexes/cog-search-demo-idx/docs/search?api-version=2025-09-01 HTTP/1.1

Content-Type: application/json

api-key: {{apiKey}}

{

"search": "*",

"select": "metadata_storage_name,language,organizations",

"count": true

}

筛选器可帮助你将结果缩小到感兴趣的项:

### Filter by organization

POST {{baseUrl}}/indexes/cog-search-demo-idx/docs/search?api-version=2025-09-01 HTTP/1.1

Content-Type: application/json

api-key: {{apiKey}}

{

"search": "*",

"filter": "organizations/any(organizations: organizations eq 'Microsoft')",

"select": "metadata_storage_name,organizations",

"count": true

}

这些查询展示了如何对 Azure AI 搜索创建的新字段应用查询语法和筛选器的几种方式。 有关更多查询示例,请参阅 搜索文档 REST API 中的示例、 简单的语法查询示例和 完整的 Lucene 查询示例。

重置并重新运行

在开发的早期阶段,对设计进行迭代很常见。 重置和重新运行 有助于迭代。

要点

本教程演示了使用 REST API 创建 AI 扩充管道的基本步骤:数据源、技能集、索引和索引器。

引入了内置技能,以及技能集定义,用于显示通过输入和输出将技能链接在一起的机制。 你还了解到, outputFieldMappings 在索引器定义中,是将优化值从管道路由到 Azure AI 搜索服务上的可搜索索引所必需的。

最后,你学习了如何测试结果并重置系统以供进一步迭代。 你了解到,针对索引执行查询将返回增强的索引管道创建的输出。

清理资源

当您在自己的订阅中进行工作时,在项目结束后,建议删除那些不再需要的资源。 持续运行资源可能会产生费用。 可以逐个删除资源,也可以删除资源组以删除整个资源集。

可以使用左侧导航窗格中的“所有资源”或“资源组”链接在 Azure 门户中查找和管理资源。

后续步骤

熟悉 AI 扩充管道中的所有对象后,请仔细了解技能集定义和个人技能。