Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

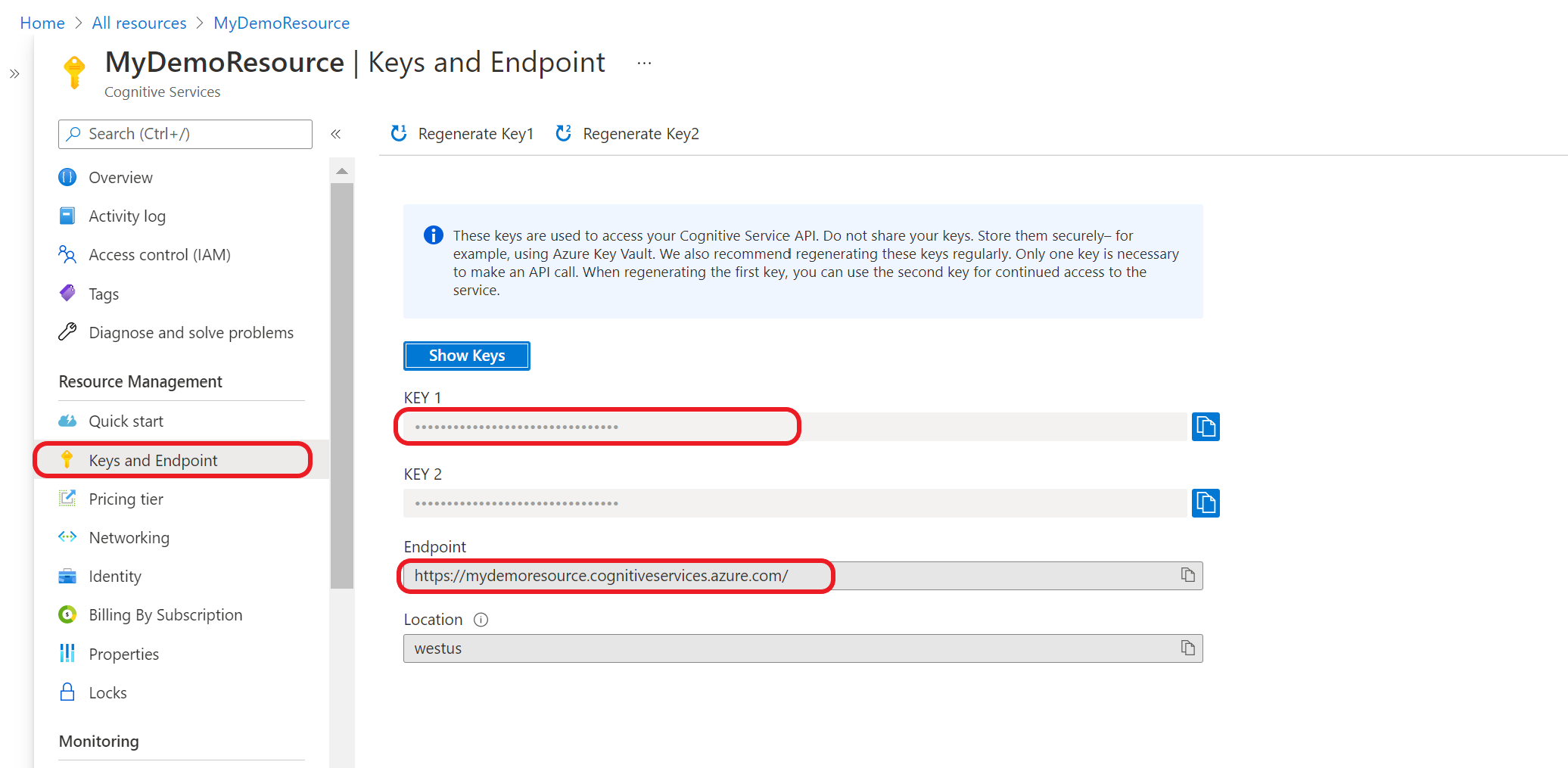

After the deployment is added successfully, you can query the deployment for intent and entities predictions from your utterance based on the model you assigned to the deployment. You can query the deployment programmatically Prediction API or through the Client libraries (Azure SDK).

Test deployed model

You can use Language Studio to submit an utterance, get predictions and visualize the results.

To test your model from Language Studio

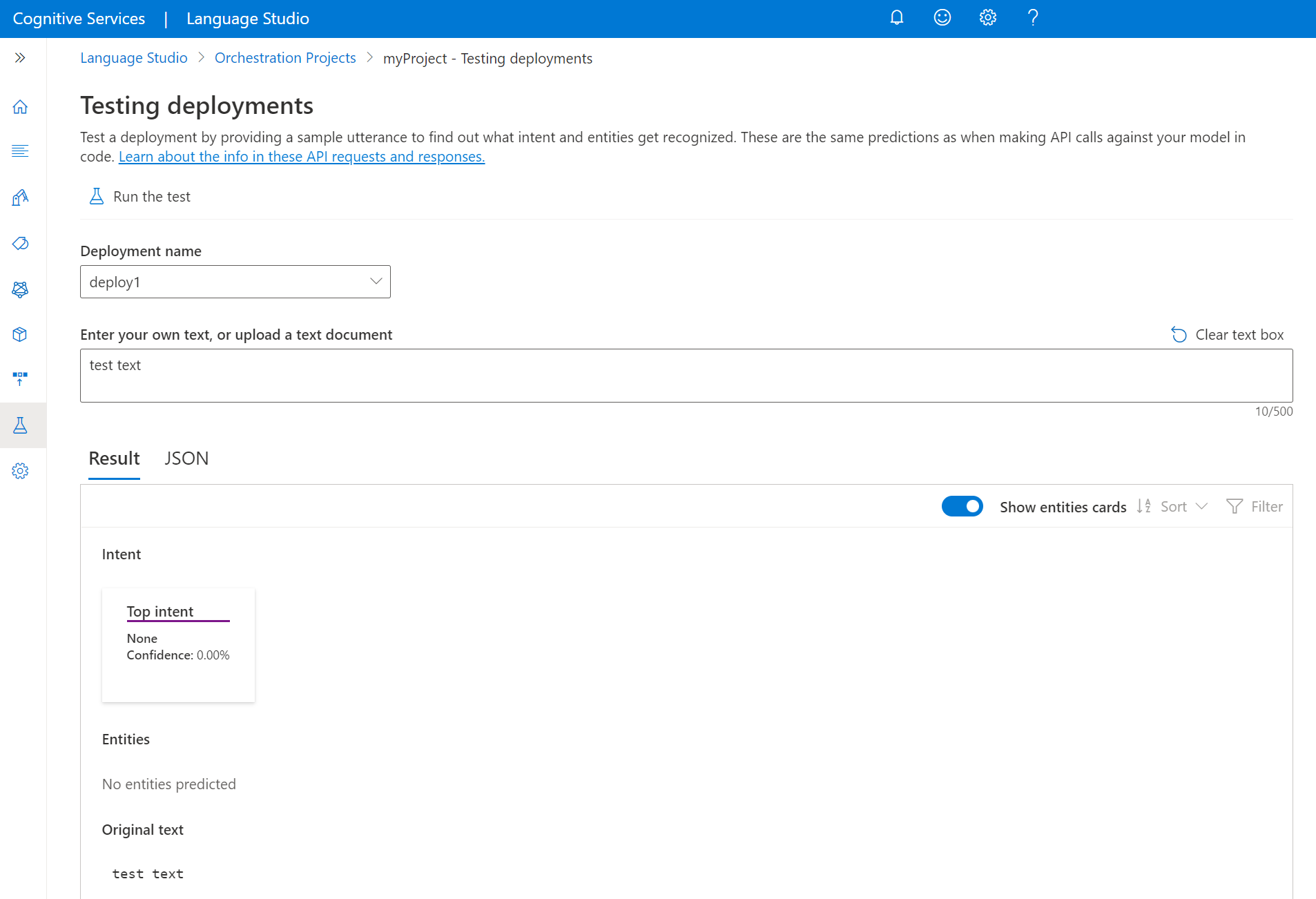

Select Testing deployments from the left side menu.

Select the model you want to test. You can only test models that are assigned to deployments.

From deployment name dropdown, select your deployment name.

In the text box, enter an utterance to test.

From the top menu, select Run the test.

After you run the test, you should see the response of the model in the result. You can view the results in entities cards view, or view it in JSON format.

Send an orchestration workflow request

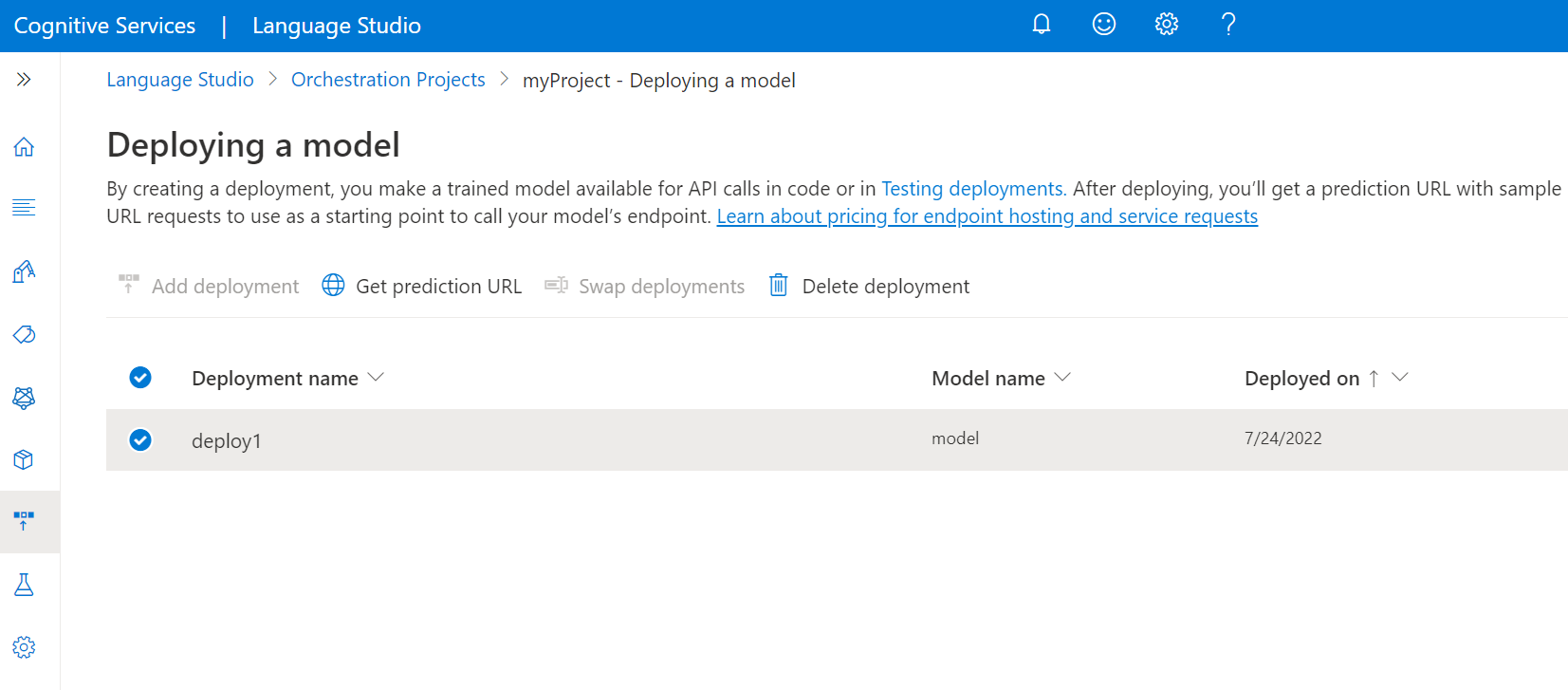

After the deployment job is completed successfully, select the deployment you want to use and from the top menu select Get prediction URL.

In the window that appears, copy the sample request URL and body into your command line. Replace

<YOUR_QUERY_HERE>with the actual text you want to send to extract intents and entities from.Submit the

POSTcURL request in your terminal or command prompt. You receive a 202 response with the API results if the request was successful.