Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Often, you build systems that react to a series of critical events. Whether you're building a web API, responding to database changes, or processing event streams or messages, you can use Azure Functions to implement these systems.

In many cases, a function integrates with an array of cloud services to provide feature-rich implementations. The following list shows common (but by no means exhaustive) scenarios for Azure Functions.

Select your development language at the top of the article.

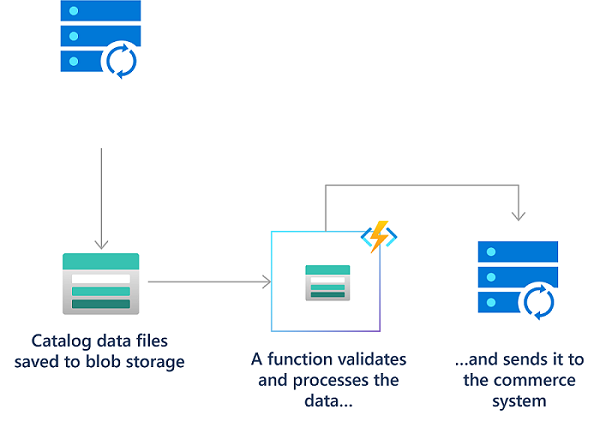

Process file uploads

You can use functions in several ways to process files into or out of a blob storage container. To learn more about options for triggering on a blob container, see Working with blobs in the best practices documentation.

For example, in a retail solution, a partner system can submit product catalog information as files into blob storage. You can use a blob triggered function to validate, transform, and process the files into the main system as you upload them.

The following tutorials use a Blob trigger (Event Grid based) to process files in a blob container:

For example, use the blob trigger with an event subscription on blob containers:

[FunctionName("ProcessCatalogData")]

public static async Task Run([BlobTrigger("catalog-uploads/{name}", Source = BlobTriggerSource.EventGrid, Connection = "<NAMED_STORAGE_CONNECTION>")] Stream myCatalogData, string name, ILogger log)

{

log.LogInformation($"C# Blob trigger function Processed blob\n Name:{name} \n Size: {myCatalogData.Length} Bytes");

using (var reader = new StreamReader(myCatalogData))

{

var catalogEntry = await reader.ReadLineAsync();

while(catalogEntry !=null)

{

// Process the catalog entry

// ...

catalogEntry = await reader.ReadLineAsync();

}

}

}

- Quickstart: Respond to blob storage events by using Azure Functions

- Sample: Blob trigger with the Event Grid source type quickstart sample)

- Tutorial (events): Trigger Azure Functions on blob containers using an event subscription

- Tutorial (polling): Upload and analyze a file with Azure Functions and Blob Storage

- Quickstart: Respond to blob storage events by using Azure Functions

- Sample: Blob trigger with the Event Grid source type quickstart sample)

- Tutorial (events): Trigger Azure Functions on blob containers using an event subscription

- Tutorial (polling): Upload and analyze a file with Azure Functions and Blob Storage

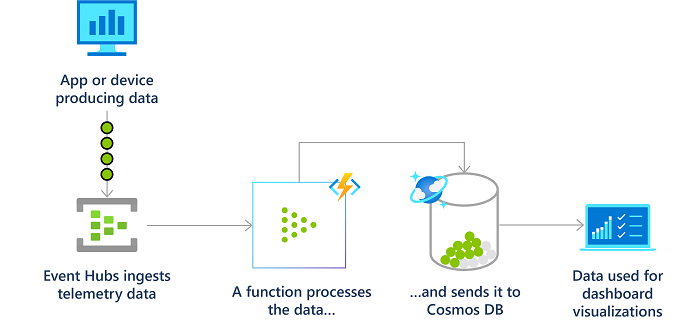

Real-time stream and event processing

Cloud applications, IoT devices, and networking devices generate and collect a large amount of telemetry. Azure Functions can process that data in near real-time as the hot path, then store it in Azure Cosmos DB for use in an analytics dashboard.

Your functions can also use low-latency event triggers, like Event Grid, and real-time outputs like SignalR to process data in near-real-time.

For example, you can use the event hubs trigger to read from an event hub and the output binding to write to an event hub after debatching and transforming the events:

[FunctionName("ProcessorFunction")]

public static async Task Run(

[EventHubTrigger(

"%Input_EH_Name%",

Connection = "InputEventHubConnectionSetting",

ConsumerGroup = "%Input_EH_ConsumerGroup%")] EventData[] inputMessages,

[EventHub(

"%Output_EH_Name%",

Connection = "OutputEventHubConnectionSetting")] IAsyncCollector<SensorDataRecord> outputMessages,

PartitionContext partitionContext,

ILogger log)

{

var debatcher = new Debatcher(log);

var debatchedMessages = await debatcher.Debatch(inputMessages, partitionContext.PartitionId);

var xformer = new Transformer(log);

await xformer.Transform(debatchedMessages, partitionContext.PartitionId, outputMessages);

}

- Streaming at scale with Azure Event Hubs, Functions and Azure SQL

- Streaming at scale with Azure Event Hubs, Functions and Cosmos DB

- Streaming at scale with Azure Event Hubs with Kafka producer, Functions with Kafka trigger and Cosmos DB

- Streaming at scale with Azure IoT Hub, Functions and Azure SQL

- Azure Event Hubs trigger for Azure Functions

- Apache Kafka trigger for Azure Functions

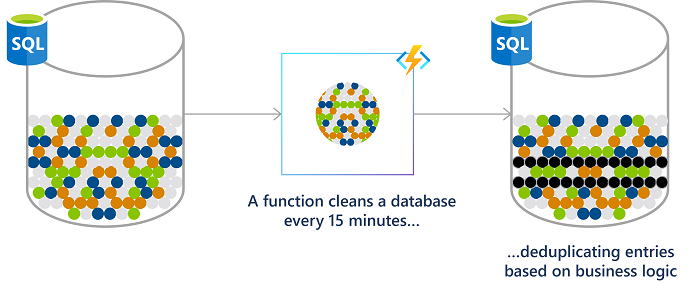

Run scheduled tasks

Functions enables you to run your code based on a cron schedule that you define.

See Create a function in the Azure portal that runs on a schedule.

For example, you might analyze a financial services customer database for duplicate entries every 15 minutes to avoid multiple communications going out to the same customer.

For examples, see these code snippets:

[FunctionName("TimerTriggerCSharp")]

public static void Run([TimerTrigger("0 */15 * * * *")]TimerInfo myTimer, ILogger log)

{

if (myTimer.IsPastDue)

{

log.LogInformation("Timer is running late!");

}

log.LogInformation($"C# Timer trigger function executed at: {DateTime.Now}");

// Perform the database deduplication

}

Build a scalable web API

An HTTP triggered function defines an HTTP endpoint. These endpoints run function code that can connect to other services directly or by using binding extensions. You can compose the endpoints into a web-based API.

You can also use an HTTP triggered function endpoint as a webhook integration, such as GitHub webhooks. In this way, you can create functions that process data from GitHub events. For more information, see Monitor GitHub events by using a webhook with Azure Functions.

For examples, see these code snippets:

[FunctionName("InsertName")]

public static async Task<IActionResult> Run(

[HttpTrigger(AuthorizationLevel.Function, "post")] HttpRequest req,

[CosmosDB(

databaseName: "my-database",

collectionName: "my-container",

ConnectionStringSetting = "CosmosDbConnectionString")]IAsyncCollector<dynamic> documentsOut,

ILogger log)

{

string requestBody = await new StreamReader(req.Body).ReadToEndAsync();

dynamic data = JsonConvert.DeserializeObject(requestBody);

string name = data?.name;

if (name == null)

{

return new BadRequestObjectResult("Please pass a name in the request body json");

}

// Add a JSON document to the output container.

await documentsOut.AddAsync(new

{

// create a random ID

id = System.Guid.NewGuid().ToString(),

name = name

});

return new OkResult();

}

- Quickstart: Azure Functions HTTP trigger

- Article: Create serverless APIs in Visual Studio using Azure Functions and API Management integration

- Training: Expose multiple function apps as a consistent API by using Azure API Management

- Sample: Web application with a C# API and Azure SQL DB on Static Web Apps and Functions

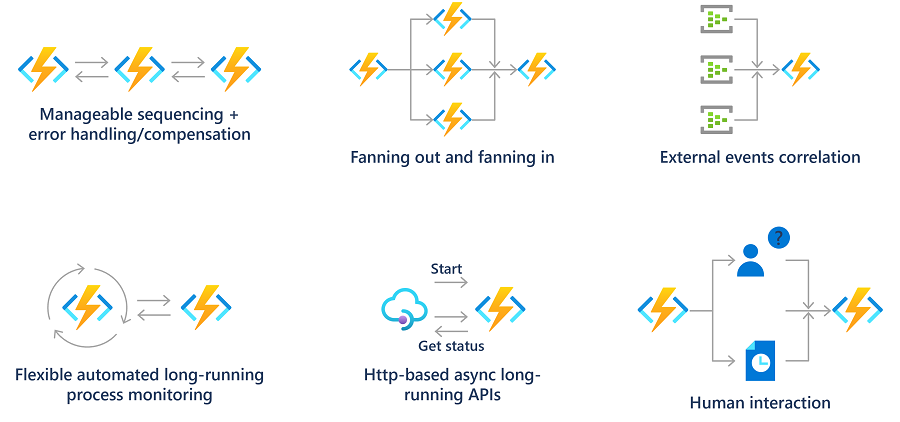

Build a serverless workflow

Functions often serve as the compute component in a serverless workflow topology, such as a Logic Apps workflow. You can also create long-running orchestrations by using the Durable Functions extension. For more information, see Durable Functions overview.

Respond to database changes

Some processes need to log, audit, or perform other operations when stored data changes. Functions triggers provide a good way to get notified of data changes to initial such an operation.

Consider these examples:

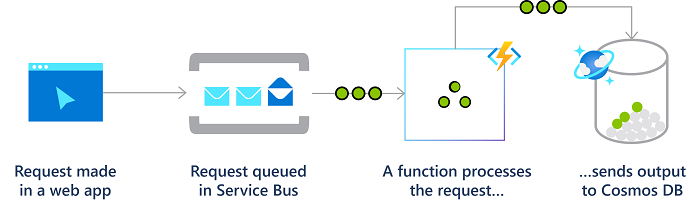

Create reliable message systems

You can use Functions with Azure messaging services to create advanced event-driven messaging solutions.

For example, you can use triggers on Azure Storage queues as a way to chain together a series of function executions. Or use service bus queues and triggers for an online ordering system.

These articles show how to write output to a storage queue:

These articles show how to trigger from an Azure Service Bus queue or topic.