适用于: Azure 数据工厂

Azure 数据工厂  Azure Synapse Analytics

Azure Synapse Analytics

本文概述了如何在 Azure 数据工厂或 Synapse Analytics 管道中使用复制活动来从 Spark 复制数据。 它是基于概述复制活动总体的复制活动概述一文。

支持的功能

此 Spark 连接器支持以下功能:

| 支持的功能 | IR |

|---|---|

| 复制活动(源/-) | (1) (2) |

| 查找活动 | (1) (2) |

① Azure 集成运行时 ② 自承载集成运行时

有关复制活动支持将其作为源/接收器的数据存储列表,请参阅支持的数据存储表。

该服务提供内置的驱动程序用于启用连接,因此使用此连接器无需手动安装任何驱动程序。

先决条件

如果数据存储位于本地网络、Azure 虚拟网络或 Amazon Virtual Private Cloud 内部,则需要配置自承载集成运行时才能连接到该数据存储。

如果数据存储是托管的云数据服务,则可以使用 Azure Integration Runtime。 如果访问范围限制为防火墙规则中允许的 IP,你可以选择将 Azure Integration Runtime IP 添加到允许列表。

此外,还可以使用 Azure 数据工厂中的托管虚拟网络集成运行时功能访问本地网络,而无需安装和配置自承载集成运行时。

要详细了解网络安全机制和数据工厂支持的选项,请参阅数据访问策略。

入门

若要使用管道执行复制活动,可以使用以下工具或 SDK 之一:

使用 UI 创建一个到 Spark 的链接服务

使用以下步骤在 Azure 门户 UI 中创建一个到 Spark 的链接服务。

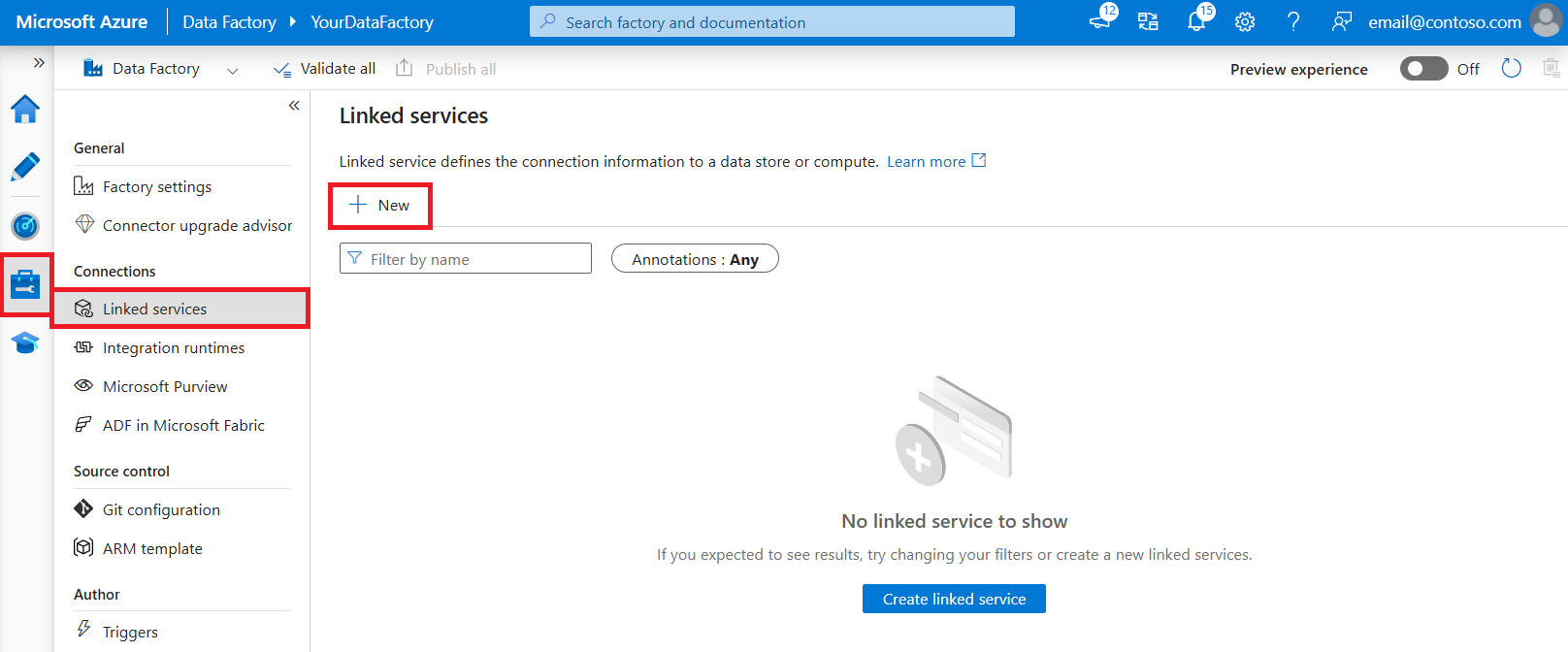

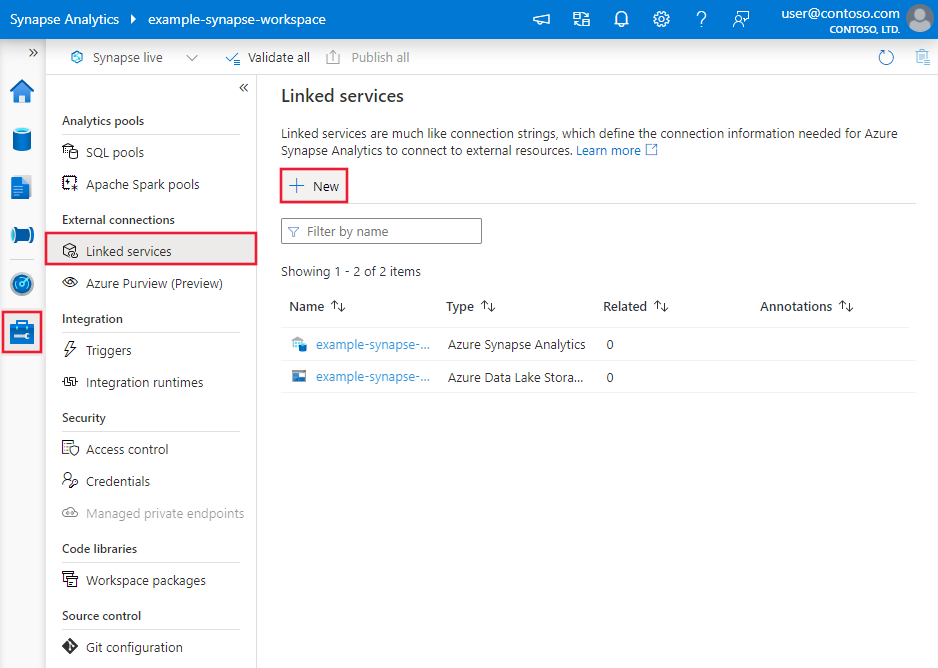

浏览到 Azure 数据工厂或 Synapse 工作区中的“管理”选项卡并选择“链接服务”,然后单击“新建”:

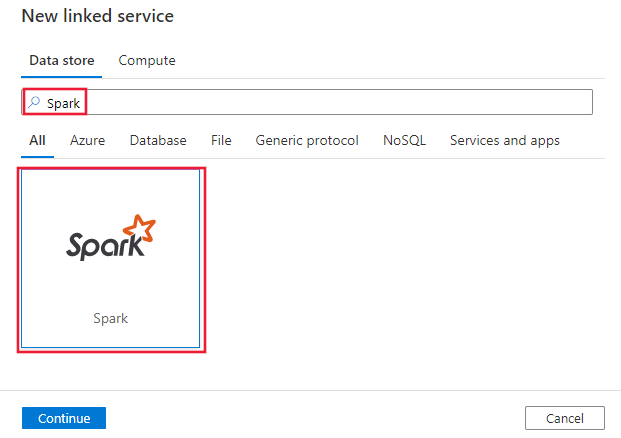

搜索 Spark 并选择 Spark 连接器。

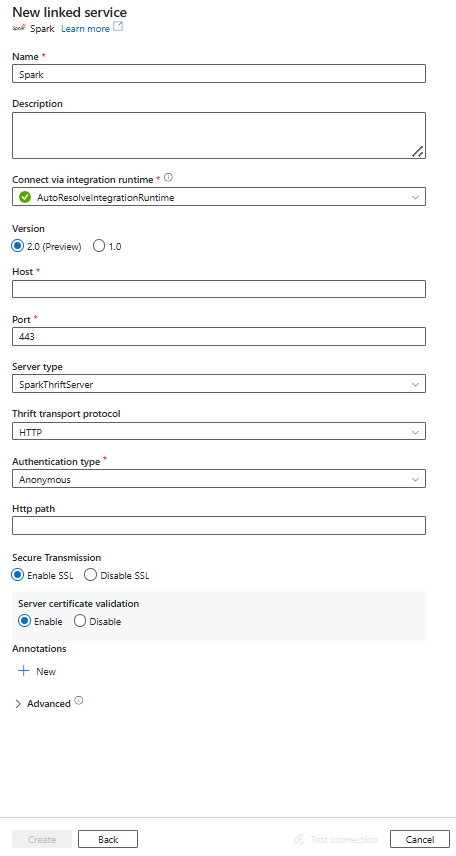

配置服务详细信息、测试连接并创建新的链接服务。

连接器配置详细信息

对于特定于 Spark 连接器的数据工厂实体,以下部分提供有关用于定义这些实体的属性的详细信息。

链接服务属性

Spark 连接器现在支持版本 2.0。 请参阅此部分将 Spark 连接器版本从版本 1.0 升级。 关于属性详情,请参阅对应部分。

版本 2.0

Spark 链接服务版本 2.0 支持以下属性:

| 属性 | 描述 | 必需 |

|---|---|---|

| 类型 | type 属性必须设置为:Spark | 是 |

| 版本 | 指定的版本。 该值为 2.0。 |

是 |

| 主持人 | Spark 服务器的 IP 地址或主机名 | 是 |

| 移植 | Spark 服务器用来侦听客户端连接的 TCP 端口。 如果连接到 Azure HDInsight,请指定端口 443。 | 是 |

| 服务器类型 | Spark 服务器的类型。 允许的值为:SparkThriftServer |

否 |

| thrift传输协议 | Thrift 层中要使用的传输协议。 允许的值为:HTTP |

否 |

| 验证类型 | 用于访问 Spark 服务器的身份验证方法。 允许的值为:Anonymous、UsernameAndPassword、WindowsAzureHDInsightService |

是 |

| 用户名 | 用于访问 Spark 服务器的用户名。 | 否 |

| 密码 | 用户所对应的密码。 将此字段标记为 SecureString 以安全地存储它,或引用 Azure Key Vault 中存储的机密。 | 否 |

| httpPath | 对应于 Spark 服务器的部分 URL。 对于 WindowsAzureHDInsightService 身份验证类型,默认值为 /sparkhive2。 |

否 |

| 启用SSL | 指定是否使用 TLS 加密到服务器的连接。 默认值为真。 | 否 |

| 启用服务器证书验证 | 指定连接时是否启用服务器 SSL 证书验证。 始终使用系统信任存储。 默认值为真。 |

否 |

| 连接方式 | 用于连接到数据存储的集成运行时。 在先决条件部分了解更多信息。 如果未指定,则使用默认 Azure Integration Runtime。 | 否 |

示例:

{

"name": "SparkLinkedService",

"properties": {

"type": "Spark",

"version": "2.0",

"typeProperties": {

"host": "<cluster>.azurehdinsight.cn",

"port": "<port>",

"authenticationType": "WindowsAzureHDInsightService",

"username": "<username>",

"password": {

"type": "SecureString",

"value": "<password>"

}

}

}

}

版本 1.0

Spark 链接服务版本 1.0 支持以下属性:

| 属性 | 描述 | 必需 |

|---|---|---|

| 类型 | type 属性必须设置为:Spark | 是 |

| 主持人 | Spark 服务器的 IP 地址或主机名 | 是 |

| 移植 | Spark 服务器用来侦听客户端连接的 TCP 端口。 如果连接到 Azure HDInsight,请指定端口 443。 | 是 |

| 服务器类型 | Spark 服务器的类型。 允许值包括:SharkServer、SharkServer2、SparkThriftServer |

否 |

| thrift传输协议 | Thrift 层中要使用的传输协议。 允许值包括:二进制、SASL、HTTP |

否 |

| 验证类型 | 用于访问 Spark 服务器的身份验证方法。 允许的值为:Anonymous、Username、UsernameAndPassword、WindowsAzureHDInsightService |

是 |

| 用户名 | 用于访问 Spark 服务器的用户名。 | 否 |

| 密码 | 用户所对应的密码。 将此字段标记为 SecureString 以安全地存储它,或引用 Azure Key Vault 中存储的机密。 | 否 |

| httpPath | 对应于 Spark 服务器的部分 URL。 | 否 |

| 启用SSL | 指定是否使用 TLS 加密到服务器的连接。 默认值为 false。 | 否 |

| 信任证书路径 (trustedCertPath) | 包含受信任 CA 证书(通过 TLS 进行连接时用来验证服务器)的 .pem 文件的完整路径。 只有在自承载 IR 上使用 TLS 时才能设置此属性。 默认值是随 IR 一起安装的 cacerts.pem 文件。 | 否 |

| 使用系统信任库 (useSystemTrustStore) | 指定是使用系统信任存储中的 CA 证书还是使用指定 PEM 文件中的 CA 证书。 默认值为 false。 | 否 |

| 允许主机名CN不匹配 | 指定通过 TLS 进行连接时是否要求 CA 颁发的 TLS/SSL 证书名称与服务器的主机名相匹配。 默认值为 false。 | 否 |

| 允许自签名服务器证书 | 指定是否允许来自服务器的自签名证书。 默认值为 false。 | 否 |

| 连接方式 | 用于连接到数据存储的集成运行时。 在先决条件部分了解更多信息。 如果未指定,则使用默认 Azure Integration Runtime。 | 否 |

示例:

{

"name": "SparkLinkedService",

"properties": {

"type": "Spark",

"typeProperties": {

"host" : "<cluster>.azurehdinsight.cn",

"port": "<port>",

"authenticationType": "WindowsAzureHDInsightService",

"username": "<username>",

"password": {

"type": "SecureString",

"value": "<password>"

}

}

}

}

数据集属性

有关可用于定义数据集的各部分和属性的完整列表,请参阅数据集一文。 本部分提供 Spark 数据集支持的属性列表。

要从 Spark 复制数据,请将数据集的 type 属性设置为“SparkObject” 。 支持以下属性:

| 属性 | 描述 | 必需 |

|---|---|---|

| 类型 | 数据集的 type 属性必须设置为:SparkObject | 是 |

| 架构 | 架构的名称。 | 否(如果指定了活动源中的“query”) |

| 表 | 表的名称。 | 否(如果指定了活动源中的“query”) |

| 表名称 | 具有架构的表的名称。 支持此属性是为了向后兼容。 对于新的工作负荷,请使用 schema 和 table。 |

否(如果指定了活动源中的“query”) |

示例

{

"name": "SparkDataset",

"properties": {

"type": "SparkObject",

"typeProperties": {},

"schema": [],

"linkedServiceName": {

"referenceName": "<Spark linked service name>",

"type": "LinkedServiceReference"

}

}

}

复制活动属性

有关可用于定义活动的各部分和属性的完整列表,请参阅管道一文。 本部分提供 Spark 数据源支持的属性列表。

Spark 作为源

要从 Spark 复制数据,请将复制活动中的源类型设置为“SparkSource” 。 复制活动source部分支持以下属性:

| 属性 | 描述 | 必需 |

|---|---|---|

| 类型 | 复制活动源的 type 属性必须设置为:SparkSource | 是 |

| 查询 | 使用自定义 SQL 查询读取数据。 例如:"SELECT * FROM MyTable"。 |

否(如果指定了数据集中的“tableName”) |

示例:

"activities":[

{

"name": "CopyFromSpark",

"type": "Copy",

"inputs": [

{

"referenceName": "<Spark input dataset name>",

"type": "DatasetReference"

}

],

"outputs": [

{

"referenceName": "<output dataset name>",

"type": "DatasetReference"

}

],

"typeProperties": {

"source": {

"type": "SparkSource",

"query": "SELECT * FROM MyTable"

},

"sink": {

"type": "<sink type>"

}

}

}

]

Spark 的数据类型映射

当你从/向 Spark 复制数据时,服务中会使用以下临时数据类型映射。 若要了解复制活动如何将源架构和数据类型映射到接收器,请参阅架构和数据类型映射。

| Spark 数据类型 | 临时服务数据类型(适用于版本 2.0) | 临时服务数据类型(适用于版本 1.0) |

|---|---|---|

| BooleanType | 布尔 | 布尔 |

| 字节类型 | Sbyte | Int16 |

| ShortType | Int16 | Int16 |

| 整数类型 | Int32 | Int32 |

| LongType | Int64 | Int64 |

| 浮点类型 | 单身 | 单身 |

| DoubleType | 加倍 | 加倍 |

| 日期类型 | 日期时间 | 日期时间 |

| 时间戳类型 | 日期时间偏移 (DateTimeOffset) | 日期时间 |

| 字符串类型 | 字符串 | 字符串 |

| 二进制类型 | Byte[] | Byte[] |

| 小数类型 | 十进制 | Decimal 字符串 (精度 > 28) |

| 数组类型 (ArrayType) | 字符串 | 字符串 |

| 结构类型 | 字符串 | 字符串 |

| 地图类型 | 字符串 | 字符串 |

| TimestampNTZType | 日期时间 | 日期时间 |

| 年-月-间隔类型 | 字符串 | 不支持。 |

| 日间时间间隔类型 | 字符串 | 不支持。 |

查找活动属性

若要了解有关属性的详细信息,请查看 Lookup 活动。

Spark 连接器生命周期和升级

下表显示了不同版本的 Spark 连接器的发布阶段和更改日志:

| 版本 | 发布阶段 | 更改日志 |

|---|---|---|

| 版本 1.0 | Removed | 不適用。 |

| 版本 2.0 | GA版本现已可用 | • enableServerCertificateValidation 受支持。 • 默认值 enableSSL 为 true。 • 对于 WindowsAzureHDInsightService 身份验证类型,默认值为 httpPath/sparkhive2。DecimalType 被读取为十进制数据类型。 • TimestampType 读取为 DateTimeOffset 数据类型。 • YearMonthIntervalType、DayTimeIntervalType 作为字符串数据类型读取。 • trustedCertPath,useSystemTrustStoreallowHostNameCNMismatch且allowSelfSignedServerCert不受支持。 • SharkServer 和 SharkServer2 不支持 serverType。 • 不支持 thriftTransportProtocl 上的二进制和 SASL。 • 不支持用户名身份验证类型。 |

将 Spark 连接器从版本 1.0 升级到版本 2.0

在 “编辑链接服务 ”页中,选择 2.0 以获取版本,并通过引用 链接服务属性版本 2.0 配置链接服务。

Spark 链接服务版本 2.0 的数据类型映射不同于版本 1.0 的数据类型映射。 若要了解最新的数据类型映射,请参阅 Spark 的数据类型映射。

相关内容

有关复制活动中作为来源和接收器的支持数据存储列表,请参阅支持的数据存储。