本文介绍 Azure 流分析输入连接的常见问题,以及如何排查和解决这些问题。

许多故障排除步骤都需要为流分析作业启用资源日志。 如果没有启用资源日志,请参阅使用资源日志排查 Azure 流分析问题。

作业不接收输入事件

验证与输入和输出的连接。 使用每项输入和输出对应的“测试连接”按钮。

检查输入数据:

确保在输入预览中选择了时间范围。 选择“选择时间范围”,输入示例持续时间,然后测试查询。

格式错误的输入事件导致反序列化错误

当流分析作业的输入流包含格式错误的消息时,会出现反序列化问题。 例如,JSON 对象中缺少圆括号或大括号,或者时间字段中的时间戳格式不当,都可能导致消息格式错误。

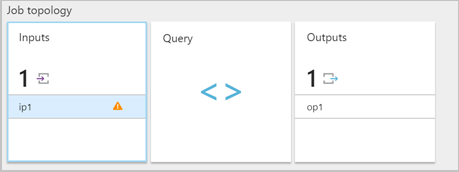

当流分析作业从某个输入收到格式不当的消息时,它会丢弃该消息并通过警告来通知你。 流分析作业的“输入”磁贴上会出现一个警告符号。 只要作业处于运行状态,此警告符号就存在。

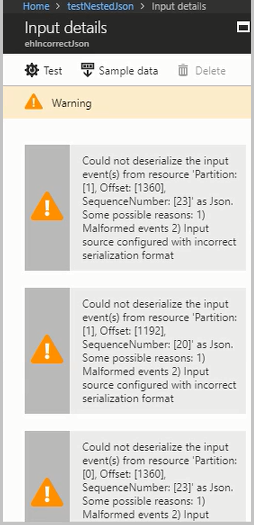

启用资源日志可查看错误的详细信息以及导致错误的消息(有效负载)。 有多种原因会导致反序列化错误发生。 有关特定反序列化错误的详细信息,请参阅输入数据错误。 如果未启用资源日志,Azure 门户中会出现一个简短通知。

如果消息有效负载大于 32 KB 或采用二进制格式,请运行 GitHub 示例存储库中提供的 CheckMalformedEvents.cs 代码。 此代码读取分区 ID 偏移量,并列显位于该偏移位置的数据。

输入反序列化错误的其他常见原因有:

- 整数列具有大于

9223372036854775807的值。 - 字符串,而不是对象数组或行分隔的对象。 有效示例:

*[{'a':1}]*。 无效示例:*"'a' :1"*。 - 使用事件中心捕获 Avro 格式的 Blob 作为作业中的输入。

- 单个输入事件中有两个仅大小写不同的列。 示例:

*column1*和*COLUMN1*。

分区计数更改

可以更改事件中心的分区计数。 如果事件中心的分区计数发生更改,则需要停止并重启流分析作业。

当作业正在运行时,如果更改事件中心的分区计数,则将出现以下错误:Microsoft.Streaming.Diagnostics.Exceptions.InputPartitioningChangedException。

作业超出了事件中心接收器的最大数量

使用事件中心时,最佳做法是使用多个使用者组来确保作业的可伸缩性。 对于特定的输入,流分析作业中读取器的数量会影响单个使用者组中的读取器数量。

接收器的精确数量取决于横向扩展的拓扑逻辑的内部实现详细信息。 数量不向外部公开。 读取者的数量会在作业启动或升级时发生更改。

当接收器数量超过最大数量时,将出现以下错误消息。 消息包含使用者组下与事件中心建立的现有连接的列表。 标记 AzureStreamAnalytics 指示连接来自 Azure 流式处理服务。

The streaming job failed: Stream Analytics job has validation errors: Job will exceed the maximum amount of Event Hubs Receivers.

The following information may be helpful in identifying the connected receivers: Exceeded the maximum number of allowed receivers per partition in a consumer group which is 5. List of connected receivers –

AzureStreamAnalytics_c4b65e4a-f572-4cfc-b4e2-cf237f43c6f0_1,

AzureStreamAnalytics_c4b65e4a-f572-4cfc-b4e2-cf237f43c6f0_1,

AzureStreamAnalytics_c4b65e4a-f572-4cfc-b4e2-cf237f43c6f0_1,

AzureStreamAnalytics_c4b65e4a-f572-4cfc-b4e2-cf237f43c6f0_1,

AzureStreamAnalytics_c4b65e4a-f572-4cfc-b4e2-cf237f43c6f0_1.

注意

当读取器的数量在作业升级期间发生更改时,暂时性警告会被写入到审核日志中。 流分析作业会自动从这些暂时性问题中恢复。

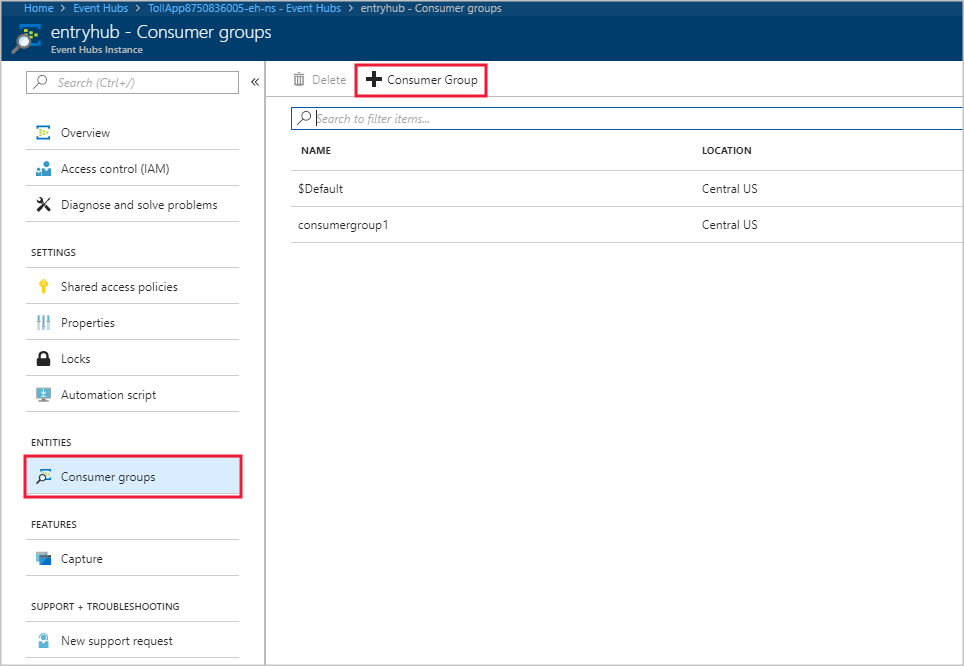

若要在事件中心实例内添加新的使用者组,请执行以下步骤:

登录到 Azure 门户。

找到你的事件中心。

在“实体”标题下,选择“事件中心”。

通过名称选择事件中心。

在“事件中心实例”页面上,在“实体”标题下,选择“使用者组”。 此时将列出名为 $Default 的使用者组。

选择“+ 使用者组”添加新的使用者组。

在流分析作业中创建输入以指向事件中心时,你在那里指定了使用者组。 如果未指定使用者组,事件中心将使用 $Default。 在创建使用者组后,编辑流分析作业中的事件中心输入并指定新使用者组的名称。

每个分区的读取者数超过事件中心限制

如果流式处理查询语法多次引用了同一事件中心输入资源,则作业引擎可以为每个查询使用来自该同一使用者组的多个读取者。 当存在对同一使用者组的太多引用时,作业可能会超出限制数 5 并引发错误。 在这些情况下,可以通过对多个使用者组使用多个输入来进行进一步划分。

每个分区的读取者数超过数据中心限制(5 个)的情况包括:

多个

SELECT语句:如果使用引用同一个事件中心输入的多个SELECT语句,则每个SELECT语句都将导致新建一个接收器。UNION:使用UNION时,可能存在引用同一个事件中心和使用者组的多个输入。SELF JOIN:使用SELF JOIN操作时,可能会多次引用同一个事件中心。

下列最佳做法可帮助缓解每个分区的读取器数超过数据中心限制(5 个)的情况。

使用 WITH 子句将查询拆分为多个步骤

WITH 子句指定查询中的 FROM 子句可以引用的临时命名结果集。 在单个 SELECT 语句的执行范围内定义 WITH 子句。

例如,与其使用此查询:

SELECT foo

INTO output1

FROM inputEventHub

SELECT bar

INTO output2

FROM inputEventHub

…

不如使用此查询:

WITH data AS (

SELECT * FROM inputEventHub

)

SELECT foo

INTO output1

FROM data

SELECT bar

INTO output2

FROM data

…

确保输入绑定到不同的使用者组

对于有三个或三个以上输入连接到同一事件中心的查询,请创建单独的使用者组。 此任务需要创建额外的流分析输入。

使用不同的使用者组创建不同的输入

你可以使用不同的使用者组为同一事件中心创建不同的输入。 在下面的 UNION 查询示例中,InputOne 和 InputTwo 指代同一事件中心源。 任何查询都可以使用不同的使用者组创建不同的输入 UNION 查询只是一个示例。

WITH

DataOne AS

(

SELECT * FROM InputOne

),

DataTwo AS

(

SELECT * FROM InputTwo

),

SELECT foo FROM DataOne

UNION

SELECT foo FROM DataTwo

每个分区的读取者数超过 IoT 中心限制

流分析作业使用 Azure IoT 中心内置的与事件中心兼容的终结点从 IoT 中心连接和读取事件。 如果每个分区的读取者数超过了 IoT 中心的限制,则可以使用事件中心的解决方案来解决。 可以通过 IoT 中心门户终结点会话或通过 IoT 中心 SDK 为内置终结点创建使用者组。

获取帮助

如需进一步的帮助,请尝试 Azure 流分析的 Microsoft Q&A 页。