在 Azure Kubernetes 服务 (AKS) 中开发和运行应用程序时,需要考虑到几个重要方面。 管理应用程序部署的方式可能会对所提供服务的最终用户体验产生负面影响。

本文从应用程序开发人员的角度重点介绍如何运行群集和工作负载。 有关管理最佳做法的信息,请参阅有关 Azure Kubernetes 服务 (AKS) 中隔离和资源管理的群集操作员最佳做法。

本文涵盖以下主题:

- Pod 资源请求和限制。

- 使用 Bridge to Kubernetes 与 Visual Studio Code 开发、调试和部署应用程序的方法。

定义 pod 资源请求和限制

最佳实践指南

在 YAML 清单中针对所有 pod 设置 pod 请求和限制。 如果 AKS 群集使用资源配额,而你未定义这些值,则可能会拒绝你的部署。

可使用 Pod 请求和限制管理 AKS 群集中的计算资源。 Pod 请求和限制通知 Kubernetes 计划程序要分配给 Pod 的计算资源。

Pod CPU/内存请求

Pod 请求定期定义 pod 所需的固定 CPU 和内存量。

在 pod 规范中,根据上述信息定义这些请求和限制非常重要。 如果不包括这些值,那么 Kubernetes 计划程序就无法考虑应用程序需要哪些资源来帮助做出计划决策。

监视应用程序的性能以调整 pod 请求。 如果低估了 Pod 请求,应用程序可能会因节点计划过度而导致性能下降。 如果请求被高估,那么应用程序可能会增加计划难度。

Pod CPU/内存限制

Pod 限制设置 pod 可以使用的最大 CPU 和内存量。 内存限制定义在节点因资源不足而不稳定时应移除的 pod。 在没有设定适当限制的情况下,pod 会被移除,直到资源压力解除。 虽然 pod 可能会周期性地超过 CPU 限制,但 pod 不会因超过 CPU 限制而被移除。

Pod 限制定义了 Pod 何时失去对资源消耗的控制。 当它超过限制时,pod 会被加上移除标记。 此行为可维护节点健康状况,并最大程度地减少对共享节点的 pod 的影响。 如果不设置 pod 限制,则默认为给定节点上的最高可用值。

请避免设置的 pod 限制超过节点可以支持的限制。 每个 AKS 节点将为核心 Kubernetes 组件保留一定的 CPU 和内存量。 应用程序可能会尝试消耗节点上的大量资源,使其他 pod 能够成功运行。

请在一天或一周的不同时间监视应用程序的性能。 确定峰值需求时间,并根据满足最大需求所需的资源来调整 pod 限制。

重要

在 Pod 规范中,根据上述信息定义这些请求和限制。 如果不包括这些值,Kubernetes 计划程序将无法计算应用程序帮助做出计划决策所需的资源。

如果计划程序在资源不足的节点上放置 pod,则应用程序性能会降低。 群集管理员必须针对需要设置资源请求和限制的命名空间设置资源配额。 有关详细信息,请参阅 AKS 群集上的资源配额。

定义 CPU 请求或限制时,值以 CPU 单位计量。

-

1.0 CPU 相当于节点上的一个基础虚拟 CPU 核心。

- GPU 使用与此相同的计量方法。

- 可以定义以毫核心数度量的分数。 例如,100 m 表示 0.1 个基础 vCPU 核心。

在以下单个 NGINX pod 的基本示例中,pod 请求 100 m 的 CPU 时间和 128 Mi 的内存。 pod 的资源限制设置为 250 m CPU 和 256Mi 内存。

kind: Pod

apiVersion: v1

metadata:

name: mypod

spec:

containers:

- name: mypod

image: mcr.azk8s.cn/oss/nginx/nginx:1.15.5-alpine

resources:

requests:

cpu: 100m

memory: 128Mi

limits:

cpu: 250m

memory: 256Mi

有关资源度量和分配的详细信息,请参阅管理容器的计算资源。

针对 AKS 群集开发和调试应用程序

最佳实践指南

开发团队应该使用 Bridge to Kubernetes 针对 AKS 群集进行部署和调试。

使用 Bridge to Kubernetes 可直接针对 AKS 群集开发、调试和测试应用程序。 在整个应用程序生命周期,团队中的开发人员协作进行生成和测试。 可以继续将现有工具(例如 Visual Studio 或 Visual Studio Code)与 Bridge to Kubernetes 扩展结合使用。

使用 Bridge to Kubernetes 的集成式开发和测试过程减少了对 minikube 之类的本地测试环境的需求。 相反,可以针对 AKS 群集进行开发和测试,即使是在安全和隔离的群集中。

注释

Bridge to Kubernetes 旨在与运行在 Linux pod 和节点上的应用程序一起使用。

使用适用于 Kubernetes 的 Visual Studio Code (VS Code) 扩展

最佳实践指南

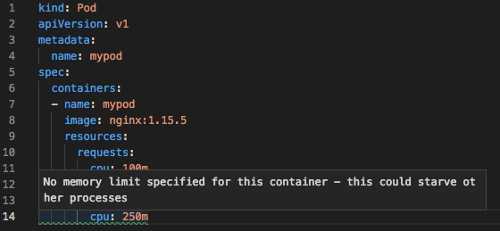

编写 YAML 清单时安装并使用适用于 Kubernetes 的 VS Code 扩展。 还可将该扩展用于集成式部署解决方案,不经常与 AKS 群集交互的应用程序所有者也许可从中获得帮助。

适用于 Kubernetes 的 Visual Studio Code 扩展可帮助你开发应用程序并将其部署到 AKS。 此扩展具有以下功能:

适用于 Kubernetes 资源、Helm 图表和模板的 Intellisense 功能。

从 VS Code 中浏览、部署和编辑 Kubernetes 资源的功能。

Intellisense 检查 pod 规范中设置的资源请求或限制:

后续步骤

本文从群集操作员的角度重点介绍了如何运行群集和工作负荷。 有关管理最佳做法的信息,请参阅有关 Azure Kubernetes 服务 (AKS) 中隔离和资源管理的群集操作员最佳做法。

要实现其中一些最佳做法,请参阅使用 Bridge to Kubernetes 进行开发。