数据引入涉及将数据加载到群集中的表。 Azure 数据资源管理器可确保数据有效性,根据需要转换格式,并执行架构匹配、组织、索引、编码和压缩等操作。 引入后,数据即可供查询。

Azure 数据资源管理器使用流式引入或排队引入提供一次性引入或建立连续引入管道。 若要确定适合你的方案,请参阅一次性数据引入和连续数据引入。

注意

- 数据根据设置的保留策略保留在存储中。

- 如果记录或整个记录中的单个字符串值超过允许的数据限制 64MB,则引入失败。

一次性数据引入

一次性引入有助于传输历史数据、填补缺失数据以及原型制作和数据分析的初始阶段。 此方法有助于快速实现数据集成,而无需持续的管道承诺。

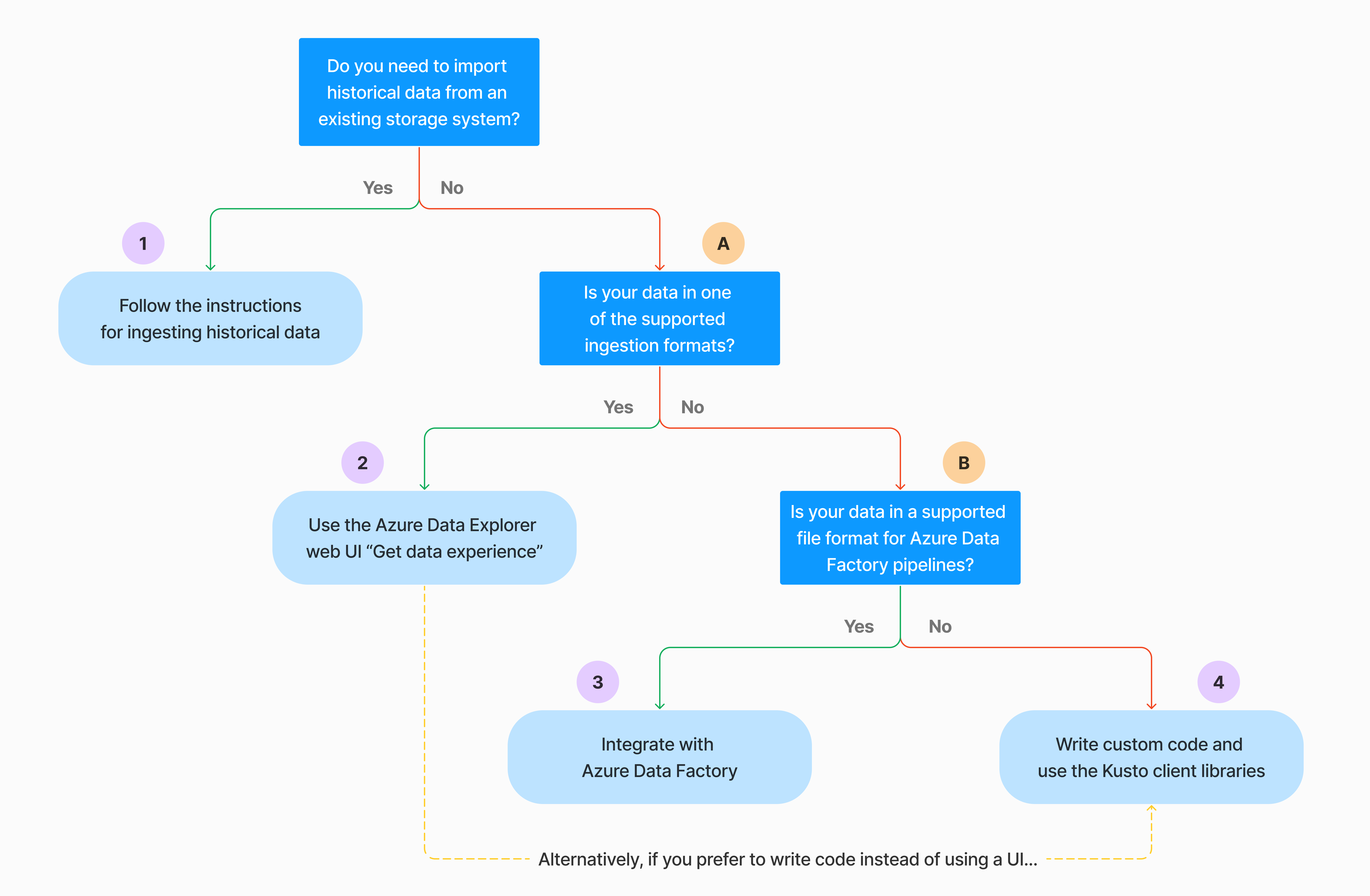

有多种方法可以执行一次性数据引入。 使用以下决策树来确定最适合你的用例的选项:

有关详细信息,请参阅相关文档:

| 标注 | 相关文档 |

|---|---|

|

请参阅 Azure 数据资源管理器支持引入的数据格式。 |

|

请参阅 Azure 数据工厂管道支持的文件格式。 |

|

若要从现有的存储系统导入数据,请参阅如何将历史数据引入 Azure 数据资源管理器。 |

|

在 Azure 数据资源管理器 Web UI 中,可以从本地文件、Amazon S3 或 Azure 存储获取数据。 |

|

若要与 Azure 数据工厂集成,请参阅使用 Azure 数据工厂将数据复制到 Azure 数据资源管理器。 |

|

Kusto 客户端库适用于 C#、Python、Java、JavaScript、TypeScript 和 Go。 可以编写代码来操作数据,然后使用 Kusto 引入库将数据引入 Azure 数据资源管理器表。 在引入之前,数据必须属于受支持的格式之一。 |

持续数据引入

持续引入在需要即刻从实时数据获取见解的场景下非常出色。 例如,连续引入对于监视系统、日志和事件数据、实时分析很有用。

连续数据引入涉及使用流式引入或排队引入设置引入管道:

流式引入:此方法针对每个表的小型数据确保实现近乎实时的延迟。 数据从流式处理源引入微批,最初放置在行存储中,然后传输到列存储区。 有关详细信息,请参阅配置流式引入。

排队引入:此方法针对高引入吞吐量进行了优化。 数据基于引入属性分为小的批次,然后合并,为快速查询结果优化。 默认情况下,最大排队值为 5 分钟、1000 个项或 1 GB 总大小。 排队引入命令的数据大小限制为 6 GB。 此方法使用重试机制来缓解暂时性故障,并遵循“至少一次”消息传送语义,以确保进程中不会丢失任何消息。 有关排队引入的详细信息,请参阅引入批处理策略。

注意

对于大多数方案,我们建议使用排队引入,因为它是性能更高的选项。

注意

队列式引入可确保可靠的数据缓冲长达 7 天。 但是,如果群集在此保留时段内没有足够的容量来完成引入,则会在超过 7 天限制后删除数据。 若要避免数据丢失和引入延迟,请确保群集有足够的资源来处理 7 天内排队的数据。

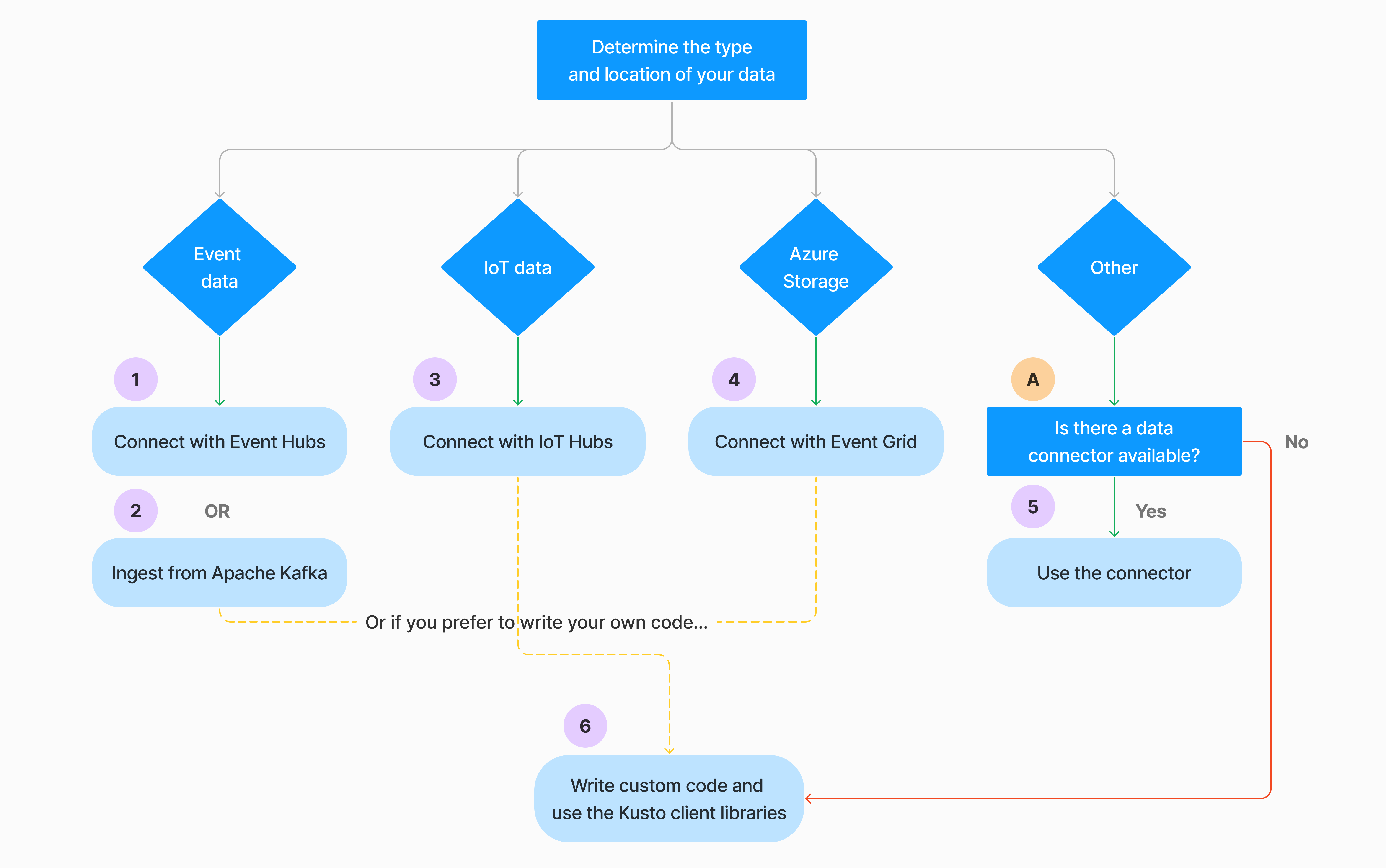

可通过多种方式配置连续数据引入。 使用以下决策树来确定最适合你的用例的选项:

有关详细信息,请参阅相关文档:

| 标注 | 相关文档 |

|---|---|

|

如需连接器列表,请参阅连接器概述。 |

|

创建事件中心数据连接。 与事件中心集成后,可实现带宽限制、重试、监视和警报等功能。 |

|

从 Apache Kafka 引入数据,它是一个分布式流式处理平台,用于生成实时流数据管道。 |

|

创建 IoT 中心数据连接。 与 IoT 中心集成后,可实现带宽限制、重试、监视和警报等功能。 |

|

创建事件网格数据连接。 与事件网格集成后,可实现带宽限制、重试、监视和警报等功能。 |

|

请参阅相关连接器的指导,例如 Apache Spark、Apache Kafka、Azure Cosmos DB、Fluent Bit、Logstash、Open Telemetry、Power Automate、Splunk 等。 有关详细信息,请参阅连接器概述。 |

|

Kusto 客户端库适用于 C#、Python、Java、JavaScript、TypeScript 和 Go。 可以编写代码来操作数据,然后使用 Kusto 引入库将数据引入 Azure 数据资源管理器表。 在引入之前,数据必须属于受支持的格式之一。 |

注意

所有引入方法都不支持流式引入。 有关支持的详细信息,请查看特定引入方法的文档。

使用管理命令直接引入

Azure 数据资源管理器提供以下引入管理命令,这些命令直接将数据引入到群集,而不使用数据管理服务。 它们应仅用于探索和原型制作,不应用于生产或大容量方案。

- 内联引入:.ingest 内联命令包含要引入的数据作为命令文本本身的一部分。 此方法用于临时测试目的。

- 从查询引入:.set、.append、.set-or-append 或 .set-or-replace 命令间接指定要引入的数据作为查询或命令的结果。

- 从存储引入:.ingest into 命令从外部存储(例如 Azure Blob 存储)获取要引入的数据,这些数据可由你的群集访问,并由命令指向。

注意

如果失败,则会再次执行引入,最多会重试 48 小时,并使用指数退避方法来确定两次尝试之间的等待时间。

比较引入方法

下表比较了主要的引入方法:

| 引入名称 | 数据类型 | 文件大小上限 | 流式处理、已排队、直接 | 最常用场景 | 注意事项 |

|---|---|---|---|---|---|

| Apache Spark 连接器 | Spark 环境支持的每一种格式 | 无限制 | 已排队 | 在引入现有管道前在 Spark 上进行预处理,是从 Spark 环境支持的各种源创建安全的 (Spark) 流式处理管道的快速方法。 | 考虑 Spark 群集的成本。 对于批量写入,请与适用于事件网格的 Azure 数据资源管理器数据连接进行比较。 对于 Spark 流式处理,请与适用于事件中心的数据连接进行比较。 |

| Azure 数据工厂 (ADF) | 支持的数据格式 | 不受限制。 继承 ADF 限制。 | 排队或按 ADF 触发器 | 支持不支持的格式,例如 Excel 和 XML,并且可以从本地复制到云中的 90 多个源中的大型文件 | 在引入数据之前,此方法的用时相对较长。 ADF 将所有数据上传到内存,然后开始引入。 |

| 事件网格 | 支持的数据格式 | 6 GB 未压缩 | 已排队 | 从 Azure 存储持续引入,Azure 存储中的外部数据 | 可通过 Blob 重命名或 Blob 创建操作触发引入 |

| 事件中心 | 支持的数据格式 | 空值 | 排队、流式处理 | 消息,事件 | |

| 获取数据体验 | *SV、JSON | 1 GB,解压缩 | 排队或直接引入 | 一次性、创建表架构、使用事件网格定义持续引入、对容器进行批量引入(最多 5,000 个 blob;使用历史引入时无限制) | |

| IoT 中心 | 支持的数据格式 | 空值 | 排队、流式处理 | IoT 消息,IoT 事件,IoT 属性 | |

| Kafka 连接器 | Avro、ApacheAvro、JSON、CSV、Parquet 和 ORC | 不受限制。 继承 Java 限制。 | 排队、流式处理 | 现有管道,源产生的消耗很大。 | 首选项可以通过当前对多个生成者或使用者服务的使用或所需的服务管理级别来确定。 |

| Kusto 客户端库 | 支持的数据格式 | 1 GB,解压缩 | 排队、流式处理、直接 | 根据组织需求编写自己的代码 | 通过在引入期间和之后尽量减少存储事务,编程引入得到优化,可降低引入成本 (COG)。 |

| LightIngest | 支持的数据格式 | 1 GB,解压缩 | 排队或直接引入 | 数据迁移,含已调整引入时间戳的历史数据,批量引入 | 区分大小写,区分有无空格 |

| 逻辑应用程序 | 支持的数据格式 | 1 GB,解压缩 | 已排队 | 用于自动化管道 | |

| LogStash | JSON | 不受限制。 继承 Java 限制。 | 已排队 | 现有管道,使用 Logstash 的成熟开源性质,便于从输入大量消耗。 | 首选项可以通过当前对多个生成者或使用者服务的使用或所需的服务管理级别来确定。 |

有关其他连接器的信息,请参阅连接器概述。

权限

以下列表描述了各种引入方案所需的 权限 :

- 若要创建新表,必须至少具有数据库用户权限。

- 若要将数据引入现有表,而不更改其架构,必须至少具有表引入器权限。

- 若要更改现有表的架构,必须至少具有表管理员或数据库管理员权限。

下表描述了每个引入方法所需的权限:

| 引入方法 | 权限 |

|---|---|

| 一次性引入 | 至少具有表引入者角色 |

| 连续流式引入 | 至少具有表引入者角色 |

| 连续排队引入 | 至少具有表引入者角色 |

| 直接内联引入 | 至少具有表引入者以及数据库查看器角色 |

| 从查询直接引入 | 至少具有表引入者以及数据库查看器角色 |

| 从存储直接引入 | 至少具有表引入者角色 |

有关详细信息,请参阅 Kusto 基于角色的访问控制。

引入过程

以下步骤概述了常规引入过程:

设置保留策略(可选):如果数据库保留策略不适合你的需求,请在表级别替代它。 有关详细信息,请参阅保留策略。

创建表:如果使用“获取数据”体验,则可以在引入流中创建表。 否则,请在引入之前在 Azure 数据资源管理器 Web UI 中或使用 .create table 命令创建表。

创建架构映射:架构映射有助于将源数据字段绑定到目标表列。 支持不同类型的映射,包括 CSV、JSON 和 AVRO 等面向行的格式,以及 Parquet 等面向列的格式。 在大多数方法中,还可以在表上预先创建映射。

设置更新策略(可选):某些数据格式(如 Parquet、JSON 和 Avro)可实现直接的引入时间转换。 若要在引入期间进行更复杂的处理,请使用更新策略。 此策略会自动对原始表中的引入数据执行提取和转换,然后将修改后的数据引入到一个或多个目标表中。

引入数据:使用你偏好的引入工具、连接器或方法引入数据。