在 Azure Kubernetes 服务(AKS)中运行应用程序时,可能需要主动增加或减少群集中的计算资源量。 更改拥有的应用程序实例数时,可能需要更改基础 Kubernetes 节点数。 可能还需要预配大量其他应用程序实例。

本文介绍核心 AKS 应用程序缩放概念,包括手动缩放 pod 或节点、使用水平 Pod 自动缩放程序、使用群集自动缩放程序以及与 Azure 容器实例 (ACI) 集成。

手动缩放 Pod 或节点

可以手动缩放副本或 Pod 和节点,以测试应用程序如何响应可用资源和状态的更改。 通过手动缩放资源,可以定义一组要使用的资源,例如节点数,以维持固定成本。 若要手动缩放,请定义副本或节点计数。 然后,Kubernetes API 会根据该副本或节点计数调度创建更多 Pod 或清空节点。

纵向缩减节点时,Kubernetes API 调用与群集使用的计算类型绑定的相关 Azure 计算 API。 例如,对于基于虚拟机规模集生成的群集,虚拟机规模集 API 确定要删除的节点。 若要详细了解如何在缩减节点时选择要删除的节点,请参阅 虚拟机规模集常见问题解答。

若要开始手动缩放节点,请参阅手动缩放 AKS 群集中的节点。 若要手动缩放 Pod 数量,请参阅 kubectl scale 命令。

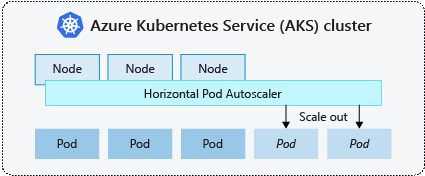

水平 Pod 自动缩放程序

Kubernetes 使用水平 Pod 自动缩放程序 (HPA) 来监视资源需求并自动缩放 Pod 数量。 默认情况下,HPA 每隔 15 秒检查一次指标 API,以检查副本计数中所需的任何更改,而指标 API 每隔 60 秒从 Kubelet 检索一次数据。 因此,HPA 每 60 秒更新一次。 当需要更改时,副本数量会相应调整。 HPA 适用于已部署 Kubernetes 版本 1.8 及更高版本的指标服务器的 AKS 群集。

为给定部署配置 HPA 时,请定义可运行的最小和最大副本数。 此外,您还定义了用于监控并根据 CPU 使用情况等进行缩放决策的指标。

若要开始使用 AKS 中的水平 Pod 自动缩放程序,请参阅在 AKS 中自动缩放 Pod。

缩放事件的冷却时间

由于 HPA 每 60 秒有效更新一次,因此在进行另一次检查之前,以前的缩放事件可能尚未成功完成。 此行为可能导致 HPA 在上一个缩放事件能够接收应用程序工作负荷之前更改副本数,并且资源需要相应地进行调整。

为了尽量减少争用事件,需要设置一个延迟值。 此值定义 HPA 在一个缩放事件之后必须等待多长时间才能触发另一个缩放事件。 此行为允许新副本计数生效,指标 API 反映分布式工作负荷。 从 Kubernetes 1.12 起,纵向扩展事件不会延迟。 但是,缩减事件的默认延迟为 5 分钟。

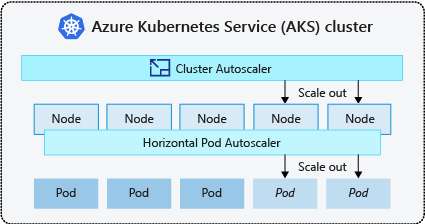

群集自动缩放程序

为响应不断变化的 Pod 需求,Kubernetes 集群自动缩放程序可根据节点池中请求的计算资源调整节点数。 默认情况下,群集自动缩放程序每隔 10 秒检查一次指标 API 服务器,以了解节点计数所需的任何更改。 如果群集自动缩放程序确定需要进行更改,则 AKS 群集中的节点数会相应增加或减少。 群集自动缩放程序适用于运行 Kubernetes 1.10.x 或更高版本的支持 Kubernetes RBAC 的 AKS 群集。

群集自动缩放程序通常与水平 Pod 自动缩放程序配合使用。 组合时,水平 pod 自动缩放器会根据应用程序需求增加或减少 pod 的数量,并且群集自动缩放器会调整节点数以运行更多 pod。

若要开始使用 AKS 中的群集自动缩放程序,请参阅 AKS 上的群集自动缩放程序。

横向扩展事件

如果节点没有足够的计算资源来运行请求的 Pod,则该 Pod 无法按照计划继续运行。 除非节点池中提供了更多计算资源,否则 Pod 无法启动。

当群集自动缩放程序通知由于节点池资源限制而无法将 Pod 列入计划时,节点池中的节点数量会增加,提供额外的计算资源。 当这些节点成功部署并可在节点池中使用时,可将 Pod 计划为运行。

如果应用程序需要快速扩展,某些 Pod 可能会保持在等待调度的状态,直到集群自动扩缩器部署的更多节点能够接收已调度的 Pod。 对于具有高突发需求的应用程序,可以使用虚拟节点和 Azure 容器实例进行缩放。

横向缩减事件

群集自动缩放程序还会监视最近未收到新计划请求的节点的 Pod 计划状态。 此方案表明节点池具有的计算资源多于所需资源,并且可以减少节点数。 默认情况下,超过 10 分钟未被使用的节点将被安排删除。 发生这种情况时,会计划 Pod 在节点池中的其他节点上运行,并且群集自动缩放程序会减少节点数。

当群集自动缩放程序减少节点数时,由于在不同节点上计划 Pod,应用程序可能会发生一些中断。 为最大限度地减少中断,请避免使用单个 Pod 实例的应用程序。

Kubernetes 事件驱动的自动缩放(KEDA)

Kubernetes 事件驱动的自动缩放 (KEDA) 是一个开源组件,用于对工作负载进行事件驱动的自动缩放。 它根据收到的事件数量动态缩放工作负载。 KEDA 使用自定义资源定义 (CRD)(称为 ScaledObject)扩展 Kubernetes,以描述应如何缩放应用程序来响应特定流量。

KEDA 缩放在工作负载接收突发流量或处理大量数据的方案中非常有用。 KEDA 不同于水平 Pod 自动缩放程序,因为 KEDA 是事件驱动的,并根据事件数进行缩放,而 HPA 是基于资源利用率(例如 CPU 和内存)驱动的指标驱动。

若要在 AKS 中开始使用 KEDA 加载项,请参阅 KEDA 概述。

节点自动预配

节点自动预配(预览版)(NAP) 使用开源 Karpenter 项目,该项目会自动在 AKS 群集上部署、配置和管理 Karpenter 。 NAP 根据挂起的 Pod 资源要求动态预配节点;它会自动选择最佳虚拟机 (VM) SKU 和数量,以满足实时需求。

NAP 采用预定义的 VM SKU 列表作为确定哪种 SKU 最适合待处理工作负荷的起点。 为了进行更精确的控制,用户可以定义节点池使用的资源上限,以及当有多个节点池时应计划工作负荷的首选项。

控制平面扩展和保护措施

Kubernetes 具有多维度的缩放范围,每个资源类型代表一个维度。 并非所有资源都一样。 例如,在 Secret 对象上通常设置监视,这会导致对 kube-apiserver 的列表调用增加成本,并使控制平面上的负载与没有监视的资源相比不成比例地更高。

控制平面管理群集中的所有资源缩放,因此在给定维度内缩放群集越多,在其他维度内缩放群集就越少。 例如,在 AKS 群集中运行数十万个 Pod 会影响控制平面支持的 Pod 流失率(每秒 Pod 突变数)的多少。 请参阅 最佳做法。

AKS 根据关键信号(例如群集中的内核总数和控制平面组件上的 CPU 或内存压力)自动缩放控制平面组件。

若要验证控制平面是否已扩容,请检查名为“large-cluster-control-plane-scaling-status”的 ConfigMap。

kubectl describe configmap large-cluster-control-plane-scaling-status -n kube-system

控制平面保护

如果在高负载场景下自动扩展 API 服务器未能使其稳定,AKS 会部署托管的 API 服务器防护措施。 此防护充当最后手段机制,通过限制非系统客户端请求并防止控制平面完全无响应来保护 API 服务器。 从 kubelet 等组件对 API 服务器的系统关键调用将继续正常运行。

若要验证是否已应用托管 API 服务器防护,请检查是否存在 “aks-managed-apiserver-guard” 的 FlowSchema 和 PriorityLevelConfiguration。

kubectl get flowschemas

kubectl get prioritylevelconfigurations

如果已在群集上应用了“aks-managed-apiserver-guard”FlowSchema 和 PriorityLevelConfiguration 以快速缓解,请参阅 API 服务器和 Etcd 故障排除指南。

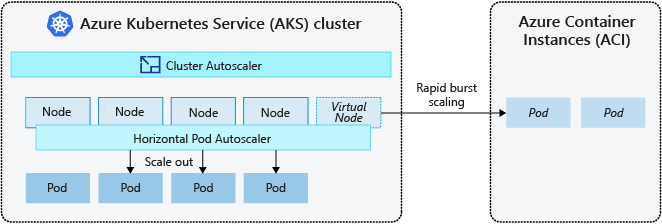

突发到 Azure 容器实例 (ACI)

若要快速缩放 AKS 群集,可以与 Azure 容器实例 (ACI) 集成。 Kubernetes 具有内置组件,可缩放副本和节点数。 但是,如果应用程序需要迅速扩展,水平 Pod 自动调节器可能会分配的 Pod 数量超过节点池的现有计算资源所能支持的数量。 如果已配置,则此方案将触发群集自动缩放程序在节点池中部署更多节点,但可能需要几分钟后,这些节点才能成功配置并允许 Kubernetes 计划程序在其上运行 Pod。

使用 ACI,可以快速部署容器实例,而无需额外的基础结构开销。 当与 AKS 连接时,ACI 会成为 AKS 群集的安全逻辑扩展。 虚拟节点组件基于虚拟 Kubelet,它安装在 AKS 群集中,将 ACI 显示为虚拟 Kubernetes 节点。 然后,Kubernetes 可以计划通过虚拟节点作为 ACI 实例运行的 Pod,而不是直接在 AKS 群集中的 VM 节点上运行的 Pod。

应用程序无需修改即可使用虚拟节点。 集群自动缩放程序在 AKS 集群中部署新节点后,部署可以跨 AKS 和 ACI 进行缩放,且没有延迟。

虚拟节点将部署到与 AKS 群集相同的虚拟网络中的其他子网。 这种虚拟网络配置确保了 ACI 和 AKS 之间的流量。 与 AKS 群集一样,ACI 实例是与其他用户隔离的安全逻辑计算资源。

后续步骤

若要开始缩放应用程序,请参阅以下资源:

- 手动缩放 Pod 或节点

- 使用水平 Pod 自动缩放程序

- 使用群集自动缩放程序

- 使用 Kubernetes 事件驱动的自动缩放 (KEDA) 加载项

有关核心 Kubernetes 和 AKS 概念的详细信息,请参阅以下文章: