Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Important

Attention: All Microsoft Sentinel features will be officially retired in Azure in China regions on August 18, 2026 per the announcement posted by 21Vianet.

Azure Monitor Logs serves as the data platform for Microsoft Sentinel. All logs ingested into Microsoft Sentinel are stored in a Log Analytics workspace, and log queries written in Kusto Query Language (KQL) are used to detect threats and monitor your network activity.

Log Analytics gives you a high level of control over the data that gets ingested to your workspace with custom data ingestion and data collection rules (DCRs). DCRs allow you to both collect and manipulate your data before it's stored in your workspace. DCRs both format and send data to both standard Log Analytics tables and customizable tables for data sources that produce unique log formats.

Azure Monitor tools for custom data ingestion in Microsoft Sentinel

Microsoft Sentinel uses the following Azure Monitor tools to control custom data ingestion:

Transformations are defined in DCRs and apply KQL queries to incoming data before it's stored in your workspace. These transformations can filter out irrelevant data, enrich existing data with analytics or external data, or mask sensitive or personal information.

The Logs ingestion API allows you to send custom-format logs from any data source to your Log Analytics workspace, and store those logs either in certain standard tables, or in custom-formatted tables that you create. You have full control over the creation of these custom tables, down to specifying the column names and types. The API uses DCRs to define, configure, and apply transformations to these data flows.

Note

Log Analytics workspaces enabled for Microsoft Sentinel aren't subject to Azure Monitor's filtering ingestion charge, regardless of how much data the transformation filters. However, transformations in Microsoft Sentinel otherwise have the same limitations as Azure Monitor. For more information, see Limitations and considerations.

DCR support in Microsoft Sentinel

Ingestion-time transformations are defined in data collection rules (DCRs), which control the data flow in Azure Monitor. DCRs are used by AMA-based Sentinel connectors and workflows using the Logs ingestion API. Each DCR contains the configuration for a particular data collection scenario, and multiple connectors or sources can share a single DCR.

Workspace transformation DCRs support workflows that don't otherwise use DCRs. Workspace transformation DCRs contain transformations for any supported tables and are applied to all traffic sent to that table.

For more information, see:

- Data collection transformations in Azure Monitor

- Logs ingestion API in Azure Monitor Logs

- Data collection rules in Azure Monitor

Use cases and sample scenarios

The article Sample transformations in Azure Monitor provides description and sample queries for common scenarios using ingestion-time transformations in Azure Monitor. Scenarios that are particularly useful for Microsoft Sentinel include:

Reduce data costs. Filter data collection by either rows or columns to reduce ingestion and storage costs.

Normalize data. Normalize logs with the Advanced Security Information Model (ASIM) to improve the performance of normalized queries. For more information, see Ingest-time normalization.

Enrich data. Ingestion-time transformations let you improve analytics by enriching your data with extra columns added to the configured KQL transformation. Extra columns might include parsed or calculated data from existing columns.

Remove sensitive data. Ingestion-time transformations can be used to mask or remove personal information such as masking all but the last digits of a social security number or credit card number.

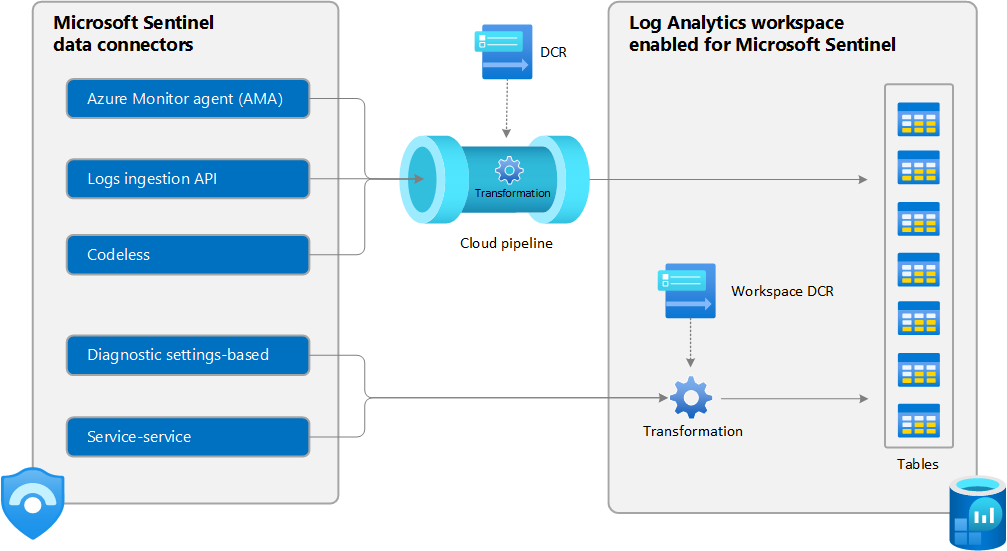

Data ingestion flow in Microsoft Sentinel

The following image shows where ingestion-time data transformation enters the data ingestion flow in Microsoft Sentinel. This data can be supported standard tables or in a specific set of custom tables.

This image shows the cloud pipeline, which represents the data collection component of Azure Monitor. You can learn more about it along with other data collection scenarios in Data collection rules (DCRs) in Azure Monitor.

Microsoft Sentinel collects data in the Log Analytics workspace from multiple sources.

- Data collected from the Logs ingestion API endpoint or Azure Monitor agent (AMA) is processed by a specific DCR that may include an ingestion-time transformation.

- Data from built-in data connectors is processed in Log Analytics using a combination of hardcoded workflows and ingestion-time transformations in the workspace DCR.

The following table describes DCR support for Microsoft Sentinel data connector types:

| Data connector type | DCR support |

|---|---|

| Azure Monitor agent (AMA) logs, such as: |

One or more DCRs associated with the agent |

| Direct ingestion via Logs ingestion API | DCR specified in API call |

| Built-in, API-based data connector | DCR created for connector |

| Diagnostic settings-based connections | Workspace transformation DCR with supported output tables |

| Built-in, API-based data connectors | Not currently supported |

| Built-in, service-to-service data connectors, such as: |

Workspace transformation DCR for tables that support transformations |

Related content

For more information, see: