适用于: Azure 数据工厂

Azure 数据工厂  Azure Synapse Analytics

Azure Synapse Analytics

本文概述了如何使用复制活动从 Amazon Simple Storage Service (Amazon S3) 复制数据,以及如何使用数据流转换 Amazon S3 中的数据。 有关详细信息,请阅读 Azure 数据工厂和 Synapse Analytics 的简介文章。

提示

若要详细了解从 Amazon S3 到 Azure 存储的数据迁移方案,请参阅将数据从 Amazon S3 迁移到 Azure 存储。

支持的功能

此 Amazon S3 连接器支持以下功能:

| 支持的功能 | IR |

|---|---|

| 复制活动(源/-) | (1) (2) |

| 映射数据流源(源/接收器) | ① |

| 查找活动 | (1) (2) |

| GetMetadata 活动 | (1) (2) |

| 删除活动 | (1) (2) |

① Azure 集成运行时 ② 自承载集成运行时

具体而言,此 Amazon S3 连接器支持按原样复制文件,或者使用受支持的文件格式和压缩编解码器分析文件。 还可以选择在复制期间保留文件元数据。 此连接器使用 AWS 签名版本 4 对发往 S3 的请求进行身份验证。

提示

如果要从任何兼容 S3 的存储提供程序复制数据,请参阅 Amazon S3 兼容存储。

所需的权限

若要从 Amazon S3 复制数据,请确保已被授予以下 Amazon S3 对象操作权限:s3:GetObject 和 s3:GetObjectVersion。

如果使用数据工厂 UI 进行创作,则需要额外的 s3:ListAllMyBuckets 和 s3:ListBucket/s3:GetBucketLocation 权限,才能执行诸如测试与链接服务的连接、从根目录浏览之类的操作。 如果不想授予这些权限,则可以选择 UI 中的“测试与文件路径的连接”或“从指定路径浏览”选项。

如需 Amazon S3 权限的完整列表,请参阅 AWS 站点上的在策略中指定权限。

入门

若要使用管道执行复制活动,可以使用以下工具或 SDK 之一:

使用 UI 创建 Amazon 简单存储服务 (S3) 链接服务

使用以下步骤在 Azure 门户 UI 中创建 Amazon S3 链接服务。

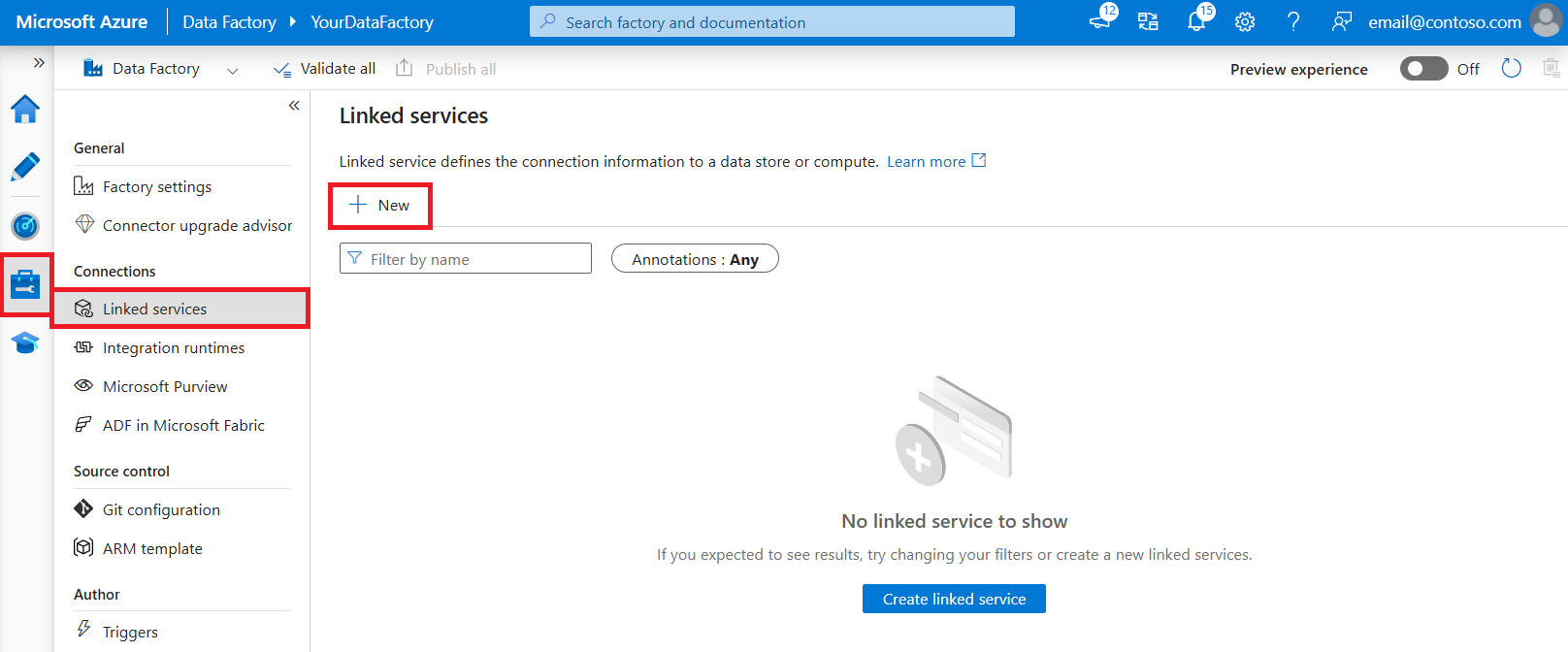

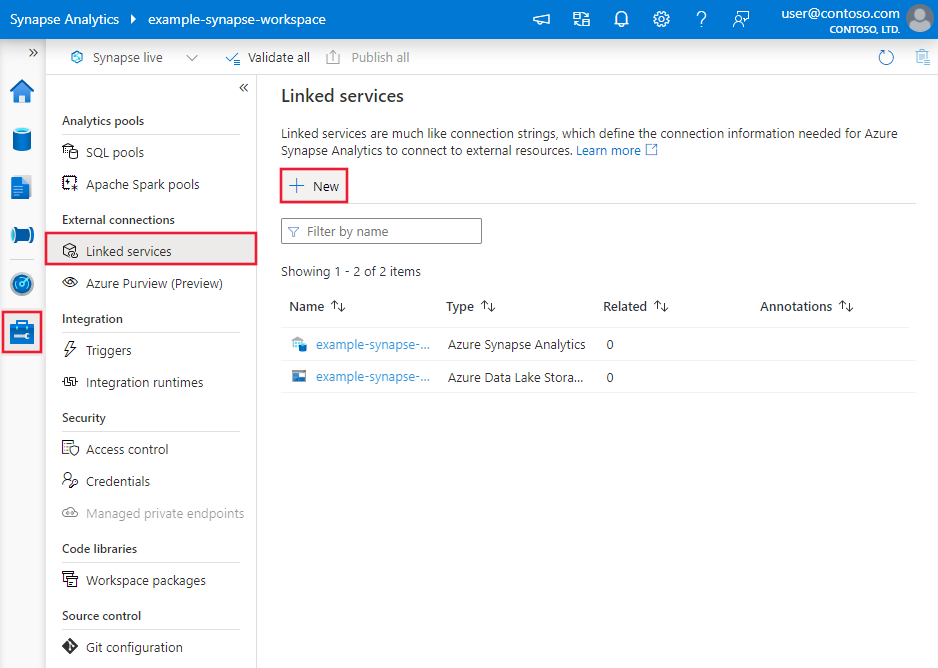

浏览到 Azure 数据工厂或 Synapse 工作区中的“管理”选项卡并选择“链接服务”,然后单击“新建”:

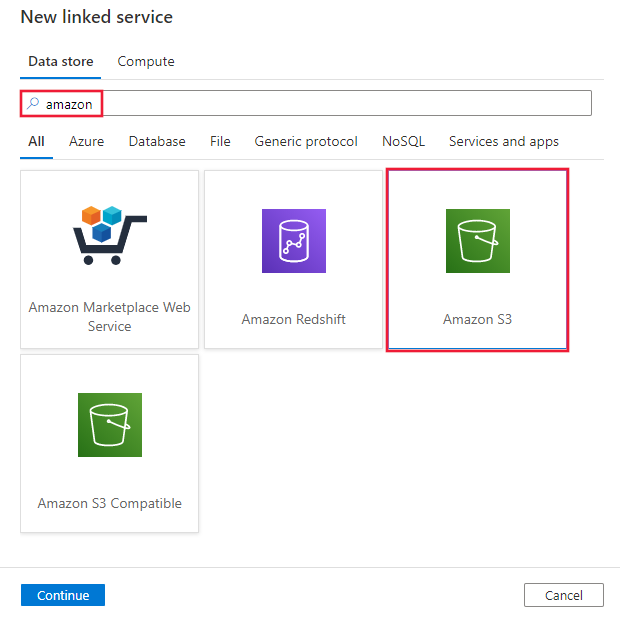

搜索“Amazon”并选择“Amazon S3 连接器”。

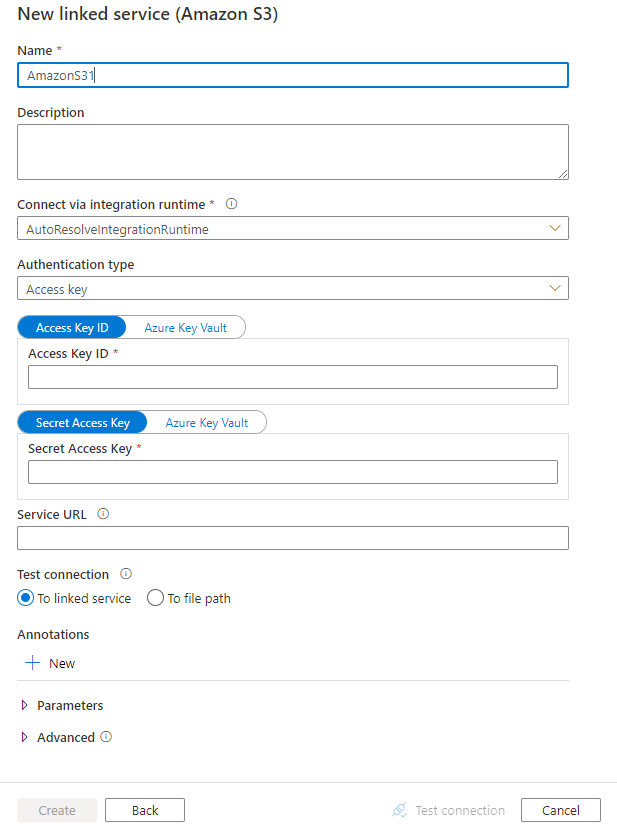

配置服务详细信息、测试连接并创建新的链接服务。

连接器配置详细信息

对于特定于 Amazon S3 的数据工厂实体,以下部分提供了有关用于定义这些实体的属性的详细信息。

链接服务属性

Amazon S3 链接服务支持以下属性:

| 属性 | 描述 | 必需 |

|---|---|---|

| 类型 | type 属性必须设置为 AmazonS3。 | 是 |

| 身份验证类型 | 指定用于连接到 Amazon S3 的身份验证类型。 可以选择使用 AWS 标识和访问管理 (IAM) 帐户的访问密钥或临时安全凭据。 允许的值为 AccessKey(默认值)和 TemporarySecurityCredentials。 |

否 |

| accessKeyId | 机密访问键 ID。 | 是 |

| 秘密访问密钥 | 机密访问键本身。 请将此字段标记为 SecureString 以安全地存储它,或引用存储在 Azure Key Vault 中的机密。 | 是 |

| sessionToken | 使用临时安全凭据身份验证时适用。 了解如何从 AWS 请求临时安全凭据。 请注意,根据设置,AWS 临时凭据会在 15 分钟到 36 小时后过期。 请确保凭据在活动执行时有效,特别是对于操作化工作负荷,例如,可以定期将其刷新并存储在 Azure Key Vault 中。 请将此字段标记为 SecureString 以安全地存储它,或引用存储在 Azure Key Vault 中的机密。 |

否 |

| serviceUrl | 指定自定义 S3 终结点 https://<service url>。仅当要尝试其他服务终结点或在 https 和 http 之间切换时,才更改它。 |

否 |

| connectVia | 用于连接到数据存储的集成运行时。 可使用 Azure Integration Runtime 或自承载集成运行时(如果数据存储位于专用网络中)。 如果未指定此属性,服务会使用默认的 Azure Integration Runtime。 | 否 |

示例:使用访问密钥身份验证

{

"name": "AmazonS3LinkedService",

"properties": {

"type": "AmazonS3",

"typeProperties": {

"accessKeyId": "<access key id>",

"secretAccessKey": {

"type": "SecureString",

"value": "<secret access key>"

}

},

"connectVia": {

"referenceName": "<name of Integration Runtime>",

"type": "IntegrationRuntimeReference"

}

}

}

示例:使用临时安全凭据身份验证

{

"name": "AmazonS3LinkedService",

"properties": {

"type": "AmazonS3",

"typeProperties": {

"authenticationType": "TemporarySecurityCredentials",

"accessKeyId": "<access key id>",

"secretAccessKey": {

"type": "SecureString",

"value": "<secret access key>"

},

"sessionToken": {

"type": "SecureString",

"value": "<session token>"

}

},

"connectVia": {

"referenceName": "<name of Integration Runtime>",

"type": "IntegrationRuntimeReference"

}

}

}

数据集属性

有关可用于定义数据集的各部分和属性的完整列表,请参阅数据集一文。

Azure 数据工厂支持以下文件格式。 请参阅每篇文章以了解基于格式的设置。

在 location 设置中,Amazon S3 支持以下基于格式的数据集属性:

| 属性 | 描述 | 必需 |

|---|---|---|

| 类型 | 数据集中 下的 location 属性必须设置为 AmazonS3Location。 |

是 |

| bucketName | S3 存储桶的名称。 | 是 |

| folderPath | 给定 Bucket 下的文件夹路径。 如果要使用通配符来筛选文件夹,请跳过此设置并在活动源设置中进行相应的指定。 | 否 |

| fileName | 给定 Bucket 和文件夹路径下的文件名。 如果要使用通配符来筛选文件,请跳过此设置并在活动源设置中进行相应的指定。 | 否 |

| 版本 | 启用 S3 版本控制时 S3 对象的版本。 如果未指定,则会提取最新版本。 | 否 |

示例:

{

"name": "DelimitedTextDataset",

"properties": {

"type": "DelimitedText",

"linkedServiceName": {

"referenceName": "<Amazon S3 linked service name>",

"type": "LinkedServiceReference"

},

"schema": [ < physical schema, optional, auto retrieved during authoring > ],

"typeProperties": {

"location": {

"type": "AmazonS3Location",

"bucketName": "bucketname",

"folderPath": "folder/subfolder"

},

"columnDelimiter": ",",

"quoteChar": "\"",

"firstRowAsHeader": true,

"compressionCodec": "gzip"

}

}

}

复制活动属性

有关可用于定义活动的各部分和属性的完整列表,请参阅管道一文。 本部分提供 Amazon S3 源支持的属性列表。

以 Amazon S3 作为源类型

Azure 数据工厂支持以下文件格式。 请参阅每篇文章以了解基于格式的设置。

Amazon S3 支持基于格式的复制源中 storeSettings 设置下的以下属性:

| 属性 | 描述 | 必需 |

|---|---|---|

| 类型 |

下的 storeSettings 属性必须设置为 AmazonS3ReadSettings。 |

是 |

| 找到要复制的文件: | ||

| 选项 1:静态路径 |

从数据集中指定的给定存储桶或文件夹/文件路径复制。 若要复制 Bucket 或文件夹中的所有文件,请另外将 wildcardFileName 指定为 *。 |

|

| 选项 2:S3 前缀 -前缀 |

数据集中配置的给定 Bucket 下的 S3 密钥名称的前缀,用于筛选源 S3 文件。 名称以 bucket_in_dataset/this_prefix 开头的 S3 密钥会被选中。 它利用 S3 的服务端筛选器。与通配符筛选器相比,该服务端筛选器可提供更好的性能。在使用前缀的情况下选择复制到基于文件的接收器并保留层次结构时,请注意,系统会保留前缀中最后一个“/”后面的子路径。 例如,如果在源为 bucket/folder/subfolder/file.txt 的情况下将前缀配置为 folder/sub,则保留的文件路径为 subfolder/file.txt。 |

否 |

| 选项 3:通配符 - wildcardFolderPath |

数据集中配置的给定 Bucket 下包含通配符的文件夹路径,用于筛选源文件夹。 允许的通配符为: *(匹配零个或更多字符)和 ?(匹配零个或单个字符)。 如果文件夹名内包含通配符或此转义字符,请使用 ^ 进行转义。 请参阅文件夹和文件筛选器示例中的更多示例。 |

否 |

| 选项 3:通配符 - 通配符文件名 |

给定 Bucket 和文件夹路径(或通配符文件夹路径)下包含通配符的文件名,用于筛选源文件。 允许的通配符为: *(匹配零个或更多字符)和 ?(匹配零个或单个字符)。 如果文件名包含通配符或此转义字符,请使用 ^ 进行转义。 请参阅文件夹和文件筛选器示例中的更多示例。 |

是 |

| 选项 4:文件列表 - fileListPath |

指明复制给定文件集。 请指向一个文本文件,该文件包含要复制的文件列表,每行一个文件(即相对于数据集中配置路径的相对路径)。 使用此选项时,请不要在数据集中指定文件名。 请参阅文件列表示例中的更多示例。 |

否 |

| 其他设置: | ||

| recursive | 指示是要从子文件夹中以递归方式读取数据,还是只从指定的文件夹中读取数据。 请注意,当 recursive 设置为 true 且接收器是基于文件的存储时,将不会在接收器上复制或创建空的文件夹或子文件夹。 允许的值为 true(默认值)和 false。 如果配置 fileListPath,则此属性不适用。 |

否 |

| 完成后删除文件 | 指示是否会在二进制文件成功移到目标存储后将其从源存储中删除。 文件删除按文件进行。因此,当复制活动失败时,你会看到一些文件已经复制到目标并从源中删除,而另一些文件仍保留在源存储中。 此属性仅在二进制文件复制方案中有效。 默认值:false。 |

否 |

| modifiedDatetimeStart | 文件根据“上次修改时间”属性进行筛选。 如果文件的上次修改时间大于或等于 modifiedDatetimeStart 并且小于 modifiedDatetimeEnd,将选择这些文件。 该时间应用于 UTC 时区,格式为“2018-12-01T05:00:00Z”。 属性可以为 NULL,这意味着不会向数据集应用任何文件属性筛选器。 如果 modifiedDatetimeStart 具有日期/时间值,但 modifiedDatetimeEnd 为 NULL,则会选中“上次修改时间”属性大于或等于该日期/时间值的文件。 如果 modifiedDatetimeEnd 具有日期/时间值,但 modifiedDatetimeStart 为 NULL,则会选中“上次修改时间”属性小于该日期/时间值的文件。如果配置 fileListPath,则此属性不适用。 |

否 |

| modifiedDatetimeEnd | 同上。 | 否 |

| enablePartitionDiscovery | 对于已分区的文件,请指定是否从文件路径分析分区,并将它们添加为附加的源列。 允许的值为 false(默认)和 true 。 |

否 |

| partitionRootPath | 启用分区发现时,请指定绝对根路径,以便将已分区文件夹读取为数据列。 如果未指定,默认情况下, - 在数据集或源的文件列表中使用文件路径时,分区根路径是在数据集中配置的路径。 - 使用通配符文件夹筛选器时,分区根路径是第一个通配符前的子路径。 - 在使用前缀时,分区根路径是最后一个“/”前的子路径。 例如,假设你将数据集中的路径配置为“root/folder/year=2020/month=08/day=27”: - 如果将分区根路径指定为“root/folder/year=2020”,则除了文件内的列外,复制活动还将生成另外两个列 month 和 day,其值分别为“08”和“27”。- 如果未指定分区根路径,则不会生成额外的列。 |

否 |

| maxConcurrentConnections | 活动运行期间与数据存储建立的并发连接的上限。 仅在要限制并发连接时指定一个值。 | 否 |

示例:

"activities":[

{

"name": "CopyFromAmazonS3",

"type": "Copy",

"inputs": [

{

"referenceName": "<Delimited text input dataset name>",

"type": "DatasetReference"

}

],

"outputs": [

{

"referenceName": "<output dataset name>",

"type": "DatasetReference"

}

],

"typeProperties": {

"source": {

"type": "DelimitedTextSource",

"formatSettings":{

"type": "DelimitedTextReadSettings",

"skipLineCount": 10

},

"storeSettings":{

"type": "AmazonS3ReadSettings",

"recursive": true,

"wildcardFolderPath": "myfolder*A",

"wildcardFileName": "*.csv"

}

},

"sink": {

"type": "<sink type>"

}

}

}

]

文件夹和文件筛选器示例

本部分介绍使用通配符筛选器生成文件夹路径和文件名的行为。

| Bucket | 关键值 | recursive | 源文件夹结构和筛选器结果(用粗体表示的文件已检索) |

|---|---|---|---|

| Bucket | Folder*/* |

false | Bucket 文件夹A File1.csv File2.json 子文件夹1 File3.csv File4.json File5.csv AnotherFolderB File6.csv |

| Bucket | Folder*/* |

true | Bucket 文件夹A File1.csv File2.json 子文件夹1 File3.csv File4.json File5.csv AnotherFolderB File6.csv |

| Bucket | Folder*/*.csv |

false | Bucket 文件夹A File1.csv File2.json 子文件夹1 File3.csv File4.json File5.csv AnotherFolderB File6.csv |

| Bucket | Folder*/*.csv |

true | Bucket 文件夹A File1.csv File2.json 子文件夹1 File3.csv File4.json File5.csv AnotherFolderB File6.csv |

文件列表示例

本部分介绍了在复制活动源中使用文件列表路径时产生的行为。

假设有以下源文件夹结构,并且要复制以粗体显示的文件:

| 示例源结构 | FileListToCopy.txt 中的内容 | 配置 |

|---|---|---|

| Bucket 文件夹A File1.csv File2.json 子文件夹1 File3.csv File4.json File5.csv 元数据 FileListToCopy.txt |

File1.csv Subfolder1/File3.csv Subfolder1/File5.csv |

在数据集中: - 桶: bucket- 文件夹路径: FolderA在复制活动源中: - 文件列表路径: bucket/Metadata/FileListToCopy.txt 文件列表路径指向同一数据存储中的一个文本文件,该文件包含要复制的文件列表(每行一个文件,带有数据集中所配置路径的相对路径)。 |

在复制期间保留元数据

将文件从 Amazon S3 复制到 Azure Data Lake Storage Gen2 或 Azure Blob 存储时,可以选择将文件元数据与数据一起保留。 从保留元数据中了解更多信息。

映射数据流属性

在映射数据流中转换数据时,可以在 Amazon S3 中读取以下格式的文件:

有关该格式的特定设置,请参阅文档。 有关详细信息,请参阅映射数据流中的源转换。

源转换

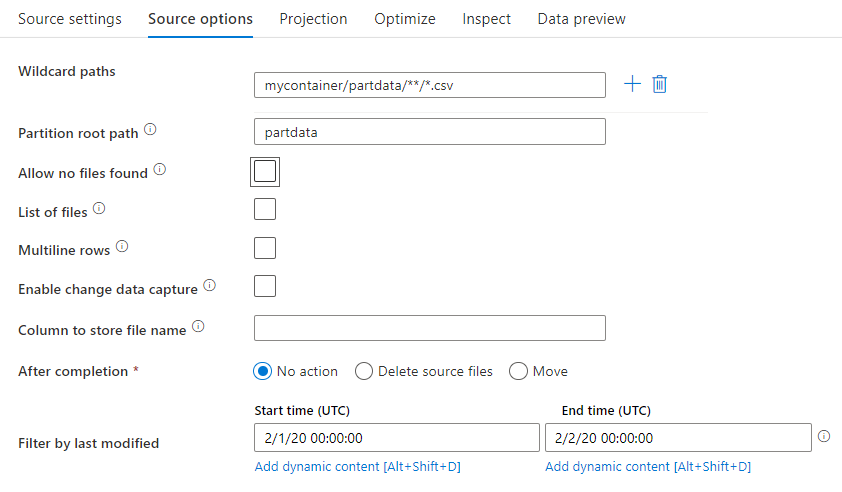

在源转换中,可以从 Amazon S3 中的容器、文件夹或单个文件进行读取。 使用“源选项”选项卡,可以管理文件的读取方式。

通配符路径:如果使用通配符模式,则会指示服务在单个源转换中循环访问每个匹配的文件夹和文件。 这是在单个流中处理多个文件的有效方法。 使用将鼠标指针悬停在现有通配符模式上时出现的加号来添加多个通配符匹配模式。

从源容器中,选择与模式匹配的一系列文件。 数据集中只能指定容器。 因此,通配符路径还必须包含根文件夹中的文件夹路径。

通配符示例:

*表示任意字符集。**表示递归目录嵌套。?替换一个字符。[]匹配括号内的一个或多个字符。/data/sales/**/*.csv获取 /data/sales 下的所有 .csv 文件。/data/sales/20??/**/获取 20 世纪的所有文件。/data/sales/*/*/*.csv获取比 /data/sales 低两个级别的 .csv 文件。/data/sales/2004/*/12/[XY]1?.csv获取时间为 2004 年 12 月、以 X 或 Y 开头且以两位数为前缀的所有 .csv 文件。

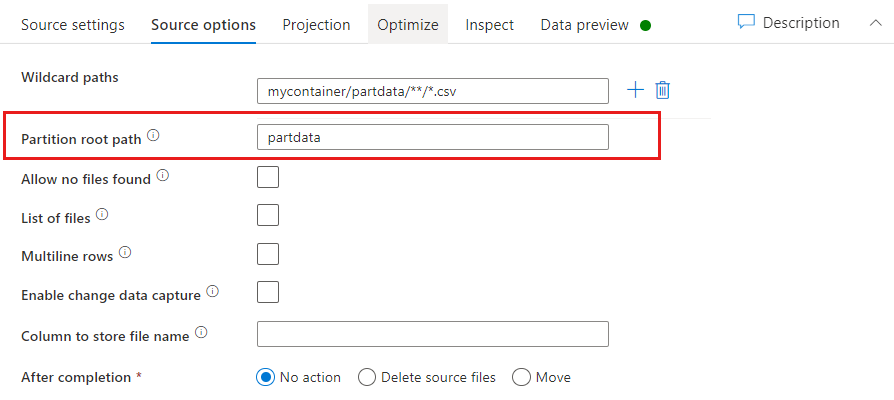

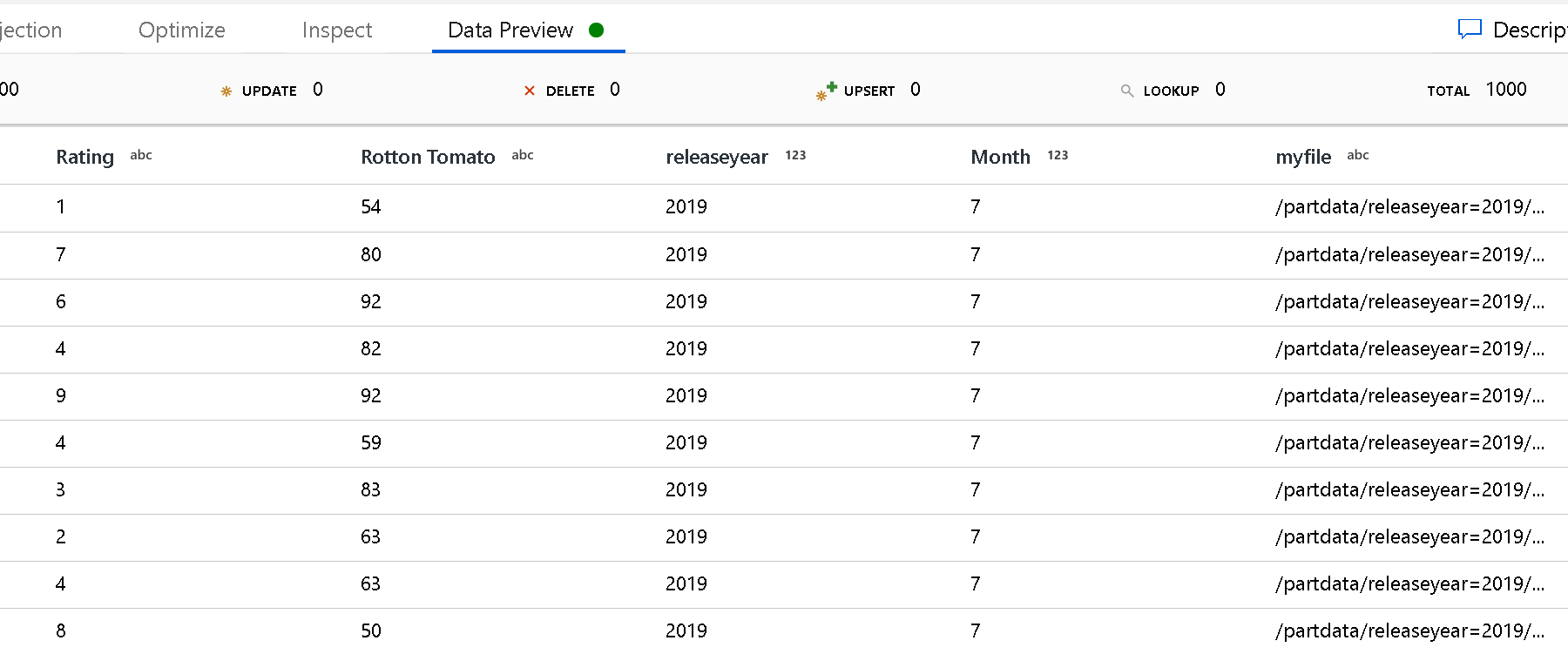

分区根路径:如果文件源中存在 格式(例如 key=value)的分区文件夹,则可以向该分区文件夹树的顶层分配数据流中的列名称。

首先,设置一个通配符,用于包括属于分区文件夹以及要读取的叶文件的所有路径。

使用“分区根路径”设置来定义文件夹结构路径的最高层级。 通过数据预览查看数据内容时会看到,该服务会添加在每个文件夹级别中找到的已解析的分区。

文件列表: 这是一个文件集。 创建一个文本文件,其中包含要处理的相对路径文件的列表。 指向此文本文件。

用于存储文件名的列: 将源文件的名称存储在数据的列中。 请在此处输入新列名称以存储文件名字符串。

完成后: 数据流运行后,可以选择不对源文件执行任何操作、删除源文件或移动源文件。 移动路径是相对路径。

要将源文件移到其他位置进行后期处理,请首先选择“移动”以执行文件操作。 然后,设置“移动源”目录。 如果未对路径使用任何通配符,则“从”设置中的文件夹将是与源文件夹相同的文件夹。

如果源路径包含通配符,则语法将如下所示:

/data/sales/20??/**/*.csv

可以将“源”指定为:

/data/sales

可以将“目标”指定为:

/backup/priorSales

在此例中,源自 /data/sales 下的所有文件都将移动到 /backup/priorSales。

注意

仅当从在管道中使用“执行数据流”活动的管道运行进程(管道调试或执行运行)中启动数据流时,文件操作才会运行。 文件操作不会在数据流调试模式下运行。

按上次修改时间筛选: 通过指定上次修改的日期范围,可以筛选要处理的文件。 所有日期/时间均采用 UTC 格式。

Lookup 活动属性

若要了解有关属性的详细信息,请查看 Lookup 活动。

GetMetadata 活动属性

若要了解有关属性的详细信息,请查看 GetMetadata 活动。

Delete 活动属性

若要了解有关属性的详细信息,请查看删除活动。

旧模型

注意

仍会按原样支持以下模型,以实现后向兼容性。 建议使用前面提到的新模型。 创作 UI 已切换为生成新模型。

旧数据集模型

| 属性 | 描述 | 必需 |

|---|---|---|

| 类型 | 数据集的 type 属性必须设置为 AmazonS3Object。 | 是 |

| bucketName | S3 存储桶的名称。 通配符筛选器不受支持。 | 对于复制或查找活动为“是”,对于 GetMetadata 活动为“否” |

| 关键值 | 指定 Bucket 下的 S3 对象键的名称或通配符筛选器。 仅当未指定 prefix 属性时应用。 文件夹部分和文件名部分都支持通配符筛选器。 允许的通配符为: *(匹配零个或更多字符)和 ?(匹配零个或单个字符)。- 示例 1: "key": "rootfolder/subfolder/*.csv"- 示例 2: "key": "rootfolder/subfolder/???20180427.txt"请参阅文件夹和文件筛选器示例中的更多示例。 如果实际文件夹名或文件名内具有通配符或此转义字符,请使用 ^ 进行转义。 |

否 |

| 前缀 | S3 对象键的前缀。 以该前缀开头的对象被选中。 仅当未指定 key 属性时应用。 | 否 |

| 版本 | 启用 S3 版本控制时 S3 对象的版本。 如果未指定版本,则会提取最新版本。 | 否 |

| modifiedDatetimeStart | 文件根据“上次修改时间”属性进行筛选。 如果文件的上次修改时间大于或等于 modifiedDatetimeStart 并且小于 modifiedDatetimeEnd,将选择这些文件。 该时间应用于 UTC 时区,格式为“2018-12-01T05:00:00Z”。 请注意,要对大量文件进行筛选时,启用此设置会影响数据移动的整体性能。 属性可以为 NULL,这意味着不会向数据集应用任何文件属性筛选器。 如果 modifiedDatetimeStart 具有日期/时间值,但 modifiedDatetimeEnd 为 NULL,则会选中“上次修改时间”属性大于或等于该日期/时间值的文件。 如果 modifiedDatetimeEnd 具有日期/时间值,但 modifiedDatetimeStart 为 NULL,则会选中“上次修改时间”属性小于该日期/时间值的文件。 |

否 |

| modifiedDatetimeEnd | 文件根据“上次修改时间”属性进行筛选。 如果文件的上次修改时间大于或等于 modifiedDatetimeStart 并且小于 modifiedDatetimeEnd,将选择这些文件。 该时间应用于 UTC 时区,格式为“2018-12-01T05:00:00Z”。 请注意,要对大量文件进行筛选时,启用此设置会影响数据移动的整体性能。 属性可以为 NULL,这意味着不会向数据集应用任何文件属性筛选器。 如果 modifiedDatetimeStart 具有日期/时间值,但 modifiedDatetimeEnd 为 NULL,则会选中“上次修改时间”属性大于或等于该日期/时间值的文件。 如果 modifiedDatetimeEnd 具有日期/时间值,但 modifiedDatetimeStart 为 NULL,则会选中“上次修改时间”属性小于该日期/时间值的文件。 |

否 |

| 格式 | 若要在基于文件的存储之间按原样复制文件(二进制副本),可以在输入和输出数据集定义中跳过格式节。 若要分析或生成具有特定格式的文件,以下是受支持的文件格式类型:TextFormat、JsonFormat、AvroFormat、OrcFormat、ParquetFormat 。 请将 format 中的 type 属性设置为上述值之一。 有关详细信息,请参阅文本格式、JSON 格式、Avro 格式、Orc 格式和 Parquet 格式部分。 |

否(仅适用于二进制复制方案) |

| 压缩 | 指定数据的压缩类型和级别。 有关详细信息,请参阅受支持的文件格式和压缩编解码器。 支持的类型为 GZip、Deflate、BZip2 和 ZipDeflate。 支持的级别为“最佳”和“最快”。 |

否 |

提示

若要复制文件夹下的所有文件,请使用 Bucket 的名称指定“bucketName”并使用文件夹部分指定“prefix”。

若要复制具有给定名称的单个文件,请为存储桶指定 bucketName,为文件夹部分以及文件名指定 key。

若要复制文件夹下的文件子集,请使用 Bucket 的名称指定“bucketName”并使用文件夹部分及通配符筛选器指定“key”。

示例:使用前缀

{

"name": "AmazonS3Dataset",

"properties": {

"type": "AmazonS3Object",

"linkedServiceName": {

"referenceName": "<Amazon S3 linked service name>",

"type": "LinkedServiceReference"

},

"typeProperties": {

"bucketName": "testbucket",

"prefix": "testFolder/test",

"modifiedDatetimeStart": "2018-12-01T05:00:00Z",

"modifiedDatetimeEnd": "2018-12-01T06:00:00Z",

"format": {

"type": "TextFormat",

"columnDelimiter": ",",

"rowDelimiter": "\n"

},

"compression": {

"type": "GZip",

"level": "Optimal"

}

}

}

}

示例:使用键和版本(可选)

{

"name": "AmazonS3Dataset",

"properties": {

"type": "AmazonS3",

"linkedServiceName": {

"referenceName": "<Amazon S3 linked service name>",

"type": "LinkedServiceReference"

},

"typeProperties": {

"bucketName": "testbucket",

"key": "testFolder/testfile.csv.gz",

"version": "XXXXXXXXXczm0CJajYkHf0_k6LhBmkcL",

"format": {

"type": "TextFormat",

"columnDelimiter": ",",

"rowDelimiter": "\n"

},

"compression": {

"type": "GZip",

"level": "Optimal"

}

}

}

}

复制活动的旧源模型

| 属性 | 描述 | 必需 |

|---|---|---|

| 类型 | 复制活动源的 type 属性必须设置为 FileSystemSource。 | 是 |

| recursive | 指示是要从子文件夹中以递归方式读取数据,还是只从指定的文件夹中读取数据。 请注意,当 recursive 设置为 true 且接收端是基于文件的存储时,空的文件夹或子文件夹将不会在接收端复制或创建。 允许的值为 true(默认值)和 false。 |

否 |

| maxConcurrentConnections | 活动运行期间与数据存储建立的并发连接的上限。 仅在要限制并发连接时指定一个值。 | 否 |

示例:

"activities":[

{

"name": "CopyFromAmazonS3",

"type": "Copy",

"inputs": [

{

"referenceName": "<Amazon S3 input dataset name>",

"type": "DatasetReference"

}

],

"outputs": [

{

"referenceName": "<output dataset name>",

"type": "DatasetReference"

}

],

"typeProperties": {

"source": {

"type": "FileSystemSource",

"recursive": true

},

"sink": {

"type": "<sink type>"

}

}

}

]

相关内容

有关复制活动支持作为源和接收器的数据存储的列表,请参阅支持的数据存储。