适用于: Azure 数据工厂

Azure 数据工厂  Azure Synapse Analytics

Azure Synapse Analytics

本文介绍 Azure 数据工厂中数据移动服务用于帮助保护数据的基本安全基础结构。 数据工厂管理资源建立在 Azure 安全基础结构上,并使用 Azure 提供的所有可能的安全措施。

在数据工厂解决方案中,可以创建一个或多个数据管道。 “管道”是共同执行一项任务的活动的逻辑分组。 这些管道位于创建数据工厂的区域。

尽管数据工厂仅在少数区域中可用,但数据移动服务在全球范围内可用,以确保数据符合性、高效和降低网络出口成本。

除使用证书加密的云数据存储的链接服务凭据外,Azure 数据工厂(包括 Azure Integration Runtime 和自承载集成运行时)不存储任何临时数据、缓存数据或日志。 使用数据工厂可以创建数据驱动的工作流,协调受支持数据存储之间的数据移动,以及使用计算服务在其他区域或本地环境中处理数据。 还可以使用 SDK 与 Azure Monitor 来监视和管理工作流。

数据工厂已获得以下认证:

| CSA STAR 认证 |

|---|

| ISO 20000-1:2011 |

| ISO 22301:2012 |

| ISO 27001:2013 |

| ISO 27017:2015 |

| ISO 27018:2014 |

| ISO 9001:2015 |

| SOC 1, 2, 3 |

| HIPAA BAA |

| HITRUST |

如果对 Azure 合规性以及 Azure 如何保护其专属基础结构感兴趣,请访问 Azure 信任中心。 有关所有 Azure 合规性产品检查的最新列表 - https://aka.ms/AzureCompliance。

在本文中,我们将查看以下两个数据移动方案中的安全注意事项:

- 云场景:在此场景中,源和目标都可通过 Internet 公开访问。 其中包括托管的云存储服务(如 Azure 存储、Azure Synapse Analytics、Azure SQL 数据库、Amazon S3、Amazon Redshift)、SaaS 服务(如 Salesforce)以及 Web 协议(如 FTP 和 OData)。 可以在支持的数据存储和格式中找到受支持数据源的完整列表。

- 混合场景:在此场景中,源或目标位于防火墙之后或本地公司网络中。 或者,数据存储位于专用网络或虚拟网络(通常是源)中,且不可公开访问。 虚拟机上托管的数据库服务器也属于这种情况。

注意

建议使用 Azure Az PowerShell 模块与 Azure 交互。 请参阅安装 Azure PowerShell 以开始使用。 若要了解如何迁移到 Az PowerShell 模块,请参阅 将 Azure PowerShell 从 AzureRM 迁移到 Az。

云方案

保护数据存储凭据

- 在 Azure 数据工厂托管存储中存储加密的凭据。 数据工厂使用由 Azure 管理的证书对数据存储凭据加密,从而帮助为这些凭据提供保护。 这些证书每两年轮换一次(包括证书续订和凭据迁移)。 有关 Azure 存储安全的详细信息,请参阅 Azure 存储安全概述。

- 在 Azure Key Vault 中存储凭据。 还可以将数据存储的凭据存储在 Azure Key Vault 中。 数据工厂在执行某个活动期间会检索该凭据。 有关详细信息,请参阅在 Azure Key Vault 中存储凭据。

- 在 Azure Key Vault 中集中存储应用程序机密就可以控制其分发。 Key Vault 可以大大减少机密意外泄露的可能性。 则可将连接字符串安全地存储在 Key Vault 中,而不是存储在应用代码中。 应用程序可以使用 URI 安全访问其所需的信息。 这些 URI 允许应用程序检索特定版本的机密。 无需编写自定义代码即可保护 Key Vault 中存储的任何机密信息。

传输中的数据加密

如果云数据存储支持 HTTPS 或 TLS,则数据工厂中数据移动服务与云数据存储之间的所有数据传输均通过安全通道 HTTPS 或 TLS 进行。

注意

在与数据库相互传输数据时,与 Azure SQL 数据库和 Azure Synapse Analytics 的所有连接都需要加密 (SSL/TLS)。 在使用 JSON 创作管道时,请在连接字符串中添加 encryption 属性并将其设置为 true。 对于 Azure 存储,可以在连接字符串中使用 HTTPS。

注意

若要在从 Oracle 移动数据时启用传输加密,请遵循以下选项之一:

- 在 Oracle 服务器中,转到“Oracle 高级安全性(OAS)”并配置加密设置,该设置支持三重 DES 加密 (3DES) 和高级加密标准 (AES),请参阅此处了解详细信息。 ADF 会自动协商加密方法,以便在与 Oracle 建立连接时使用在 OAS 中配置的加密方法。

- 在 ADF 中,可以在连接字符串中添加 EncryptionMethod=1(在链接服务中)。 这将使用 SSL/TLS 作为加密方法。 若要使用此功能,需要在 Oracle 服务器端的 OAS 中禁用非 SSL 加密设置,以避免加密冲突。

注意

使用的 TLS 版本为 1.2。

静态数据加密

某些数据存储支持静态数据加密。 我们建议为这些数据存储启用数据加密机制。

Azure Synapse Analytics

Azure Synapse Analytics 中的透明数据加密 (TDE) 可帮助对静态数据进行实时加密和解密,避免恶意活动造成的威胁。 此行为对客户端透明。 有关详细信息,请参阅保护 Azure Synapse Analytics 中的数据库。

Azure SQL 数据库

Azure SQL 数据库还支持透明数据加密 (TDE),它无需更改应用程序,即可对数据执行实时加密和解密,从而帮助防止恶意活动的威胁。 此行为对客户端透明。 有关详细信息,请参阅 SQL 数据库和数据仓库的透明数据加密。

Azure Blob 存储和 Azure 表存储

Azure Blob 存储和 Azure 表存储支持存储服务加密 (SSE),它会在将数据保存到存储中前进行自动加密,在检索前进行自动解密。 有关详细信息,请参阅静态数据的 Azure 存储服务加密。

Amazon S3

Amazon S3 支持静态数据的客户端和服务器加密。 有关详细信息,请参阅使用加密保护数据。

Amazon Redshift

Amazon Redshift 支持静态数据的群集加密。 有关详细信息,请参阅 Amazon Redshift 数据库加密。

Salesforce

Salesforce 支持防火墙平台加密,它允许加密所有文件、附件和自定义字段。 有关详细信息,请参阅 Understanding the Web Server OAuth Authentication Flow(了解 Web 服务器 OAuth 身份验证流)。

混合场景

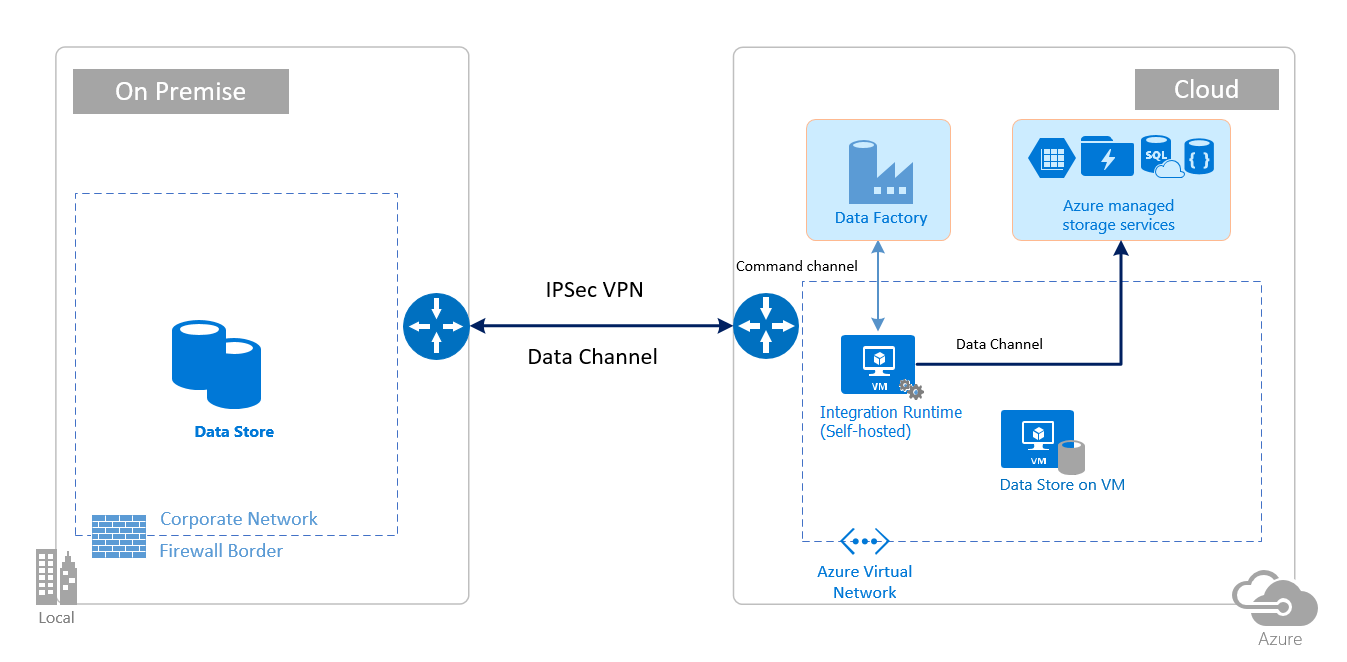

混合场景需要在本地网络、虚拟网络 (Azure) 或虚拟私有云 (Amazon) 中安装自承载集成运行时。 自承载集成运行时必须能够访问本地数据存储。 有关自承载集成运行时的详细信息,请参阅如何创建和配置自承载集成运行时。

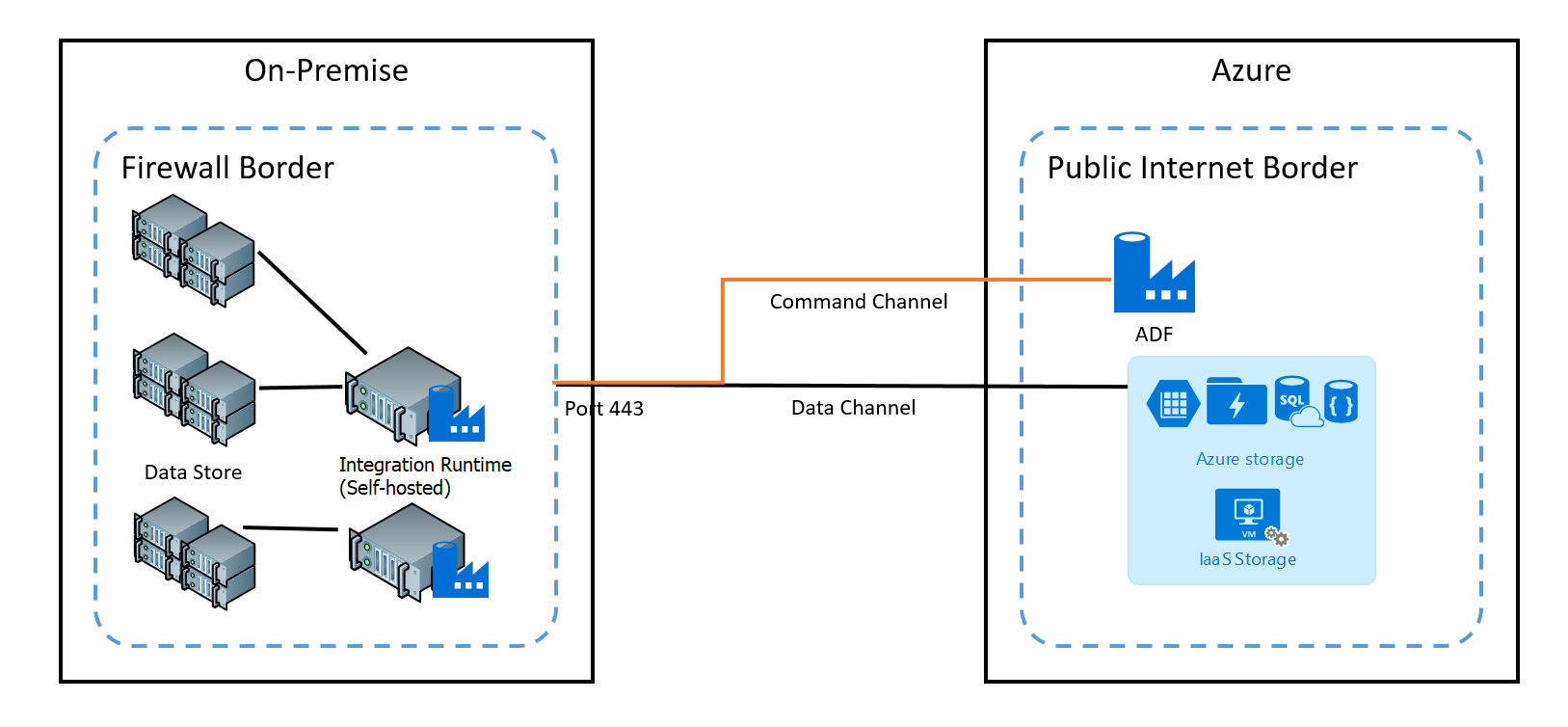

使用命令通道可在数据工厂中的数据移动服务与自承载集成运行时之间通信。 通信包含与活动相关的信息。 数据信道用于在本地数据存储和云数据存储之间传输数据。

本地数据存储凭据

凭据可以存储在数据工厂中,也可以在运行时从 Azure 密钥保管库由数据工厂引用。 如果将凭据存储在数据工厂中,则凭据会始终以加密方式存储在自承载集成运行时上。

在本地存储凭据。 如果直接结合 JSON 中内联的连接字符串和凭据使用 Set-AzDataFactoryV2LinkedService cmdlet,则链接服务将加密并存储在自承载集成运行时中。 在这种情况下,凭据将通过 Azure 后端服务(此服务非常安全)传递到自承载集成计算机(最终对其进行加密和存储的地方)。 自承载集成运行时使用 Windows DPAPI 来加密敏感数据和凭据信息。

在 Azure Key Vault 中存储凭据。 还可以将数据存储的凭据存储在 Azure Key Vault 中。 数据工厂在执行某个活动期间会检索该凭据。 有关详细信息,请参阅在 Azure Key Vault 中存储凭据。

在本地存储凭据,而无需通过 Azure 后端将凭据传递到自承载集成运行时。 如果想要在自承载集成运行时本地加密并存储凭据,而不必通过数据工厂后端传递凭据,请按照在 Azure 数据工厂中加密本地数据存储的凭据中的步骤操作。 所有连接器都支持此选项。 自承载集成运行时使用 Windows DPAPI 来加密敏感数据和凭据信息。

使用 New-AzDataFactoryV2LinkedServiceEncryptedCredential cmdle 可加密链接服务凭据和链接服务中的敏感详细信息。 然后,可以通过 Set-AzDataFactoryV2LinkedService cmdlet 使用返回的 JSON(结合连接字符串中的 EncryptedCredential 元素)创建链接服务。

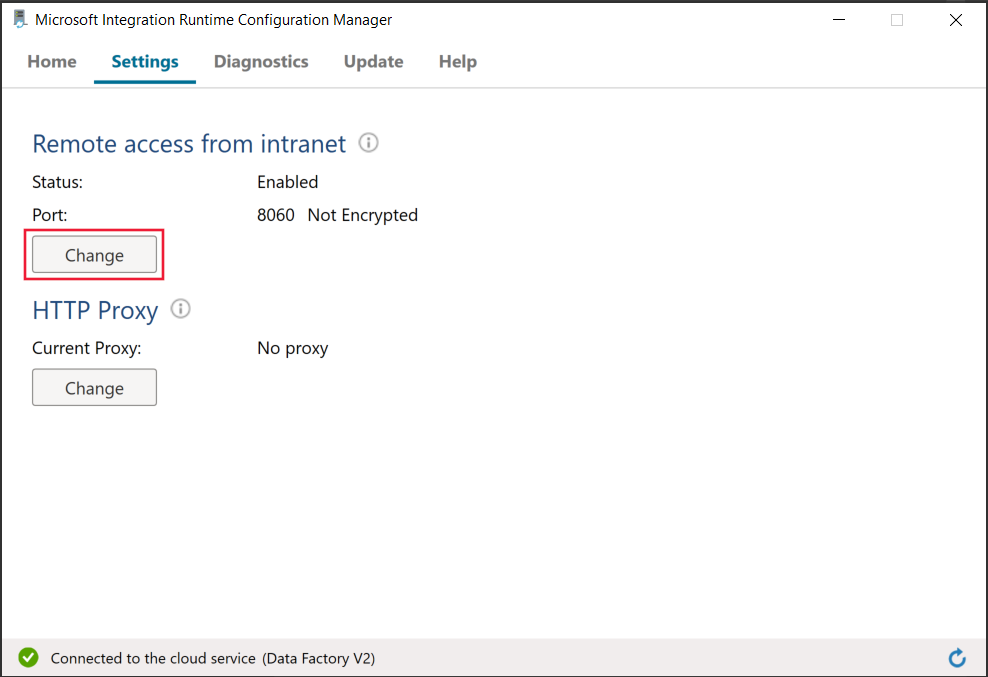

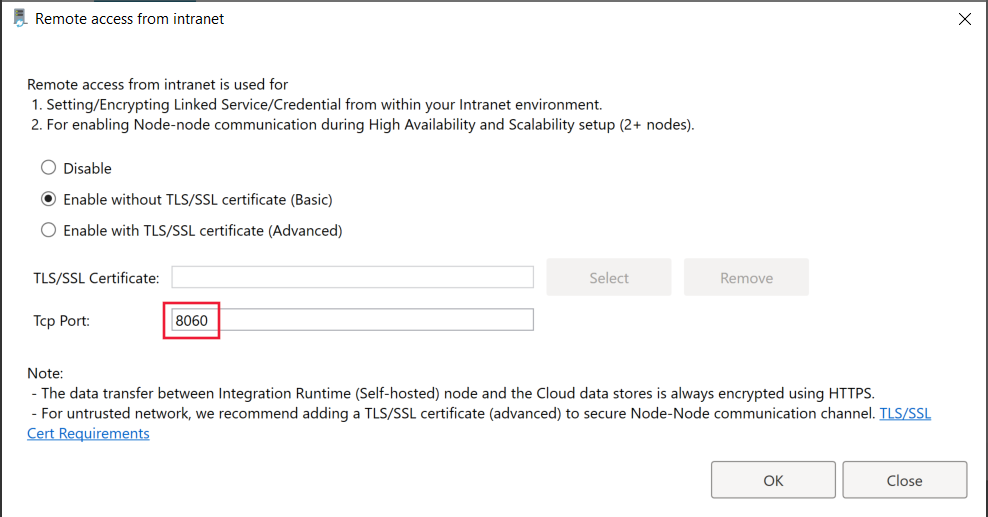

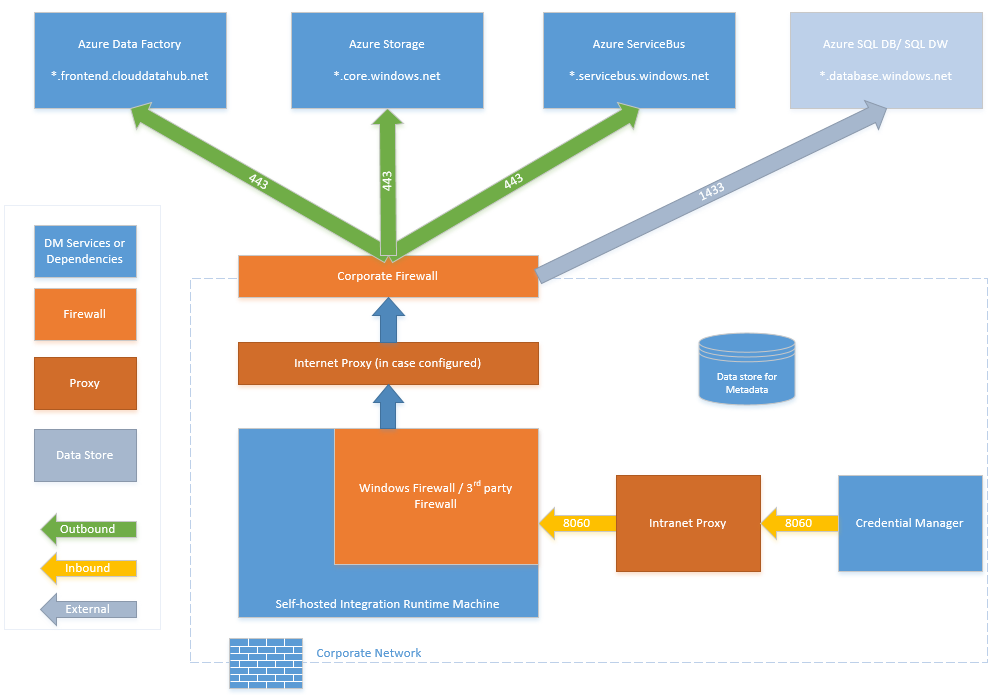

在自承载集成运行时中加密链接服务时使用的端口

默认情况下,当启用从 Intranet 进行远程访问的功能时,PowerShell 会使用装有自承载集成运行时的计算机上的端口 8060 进行安全通信。 如有必要,可以从 Integration Runtime 配置管理器的“设置”选项卡更改此端口:

传输中加密

所有数据传输都是通过 HTTPS 和 TLS over TCP 安全通道进行的,可防止与 Azure 服务通信期间发生中间人攻击。

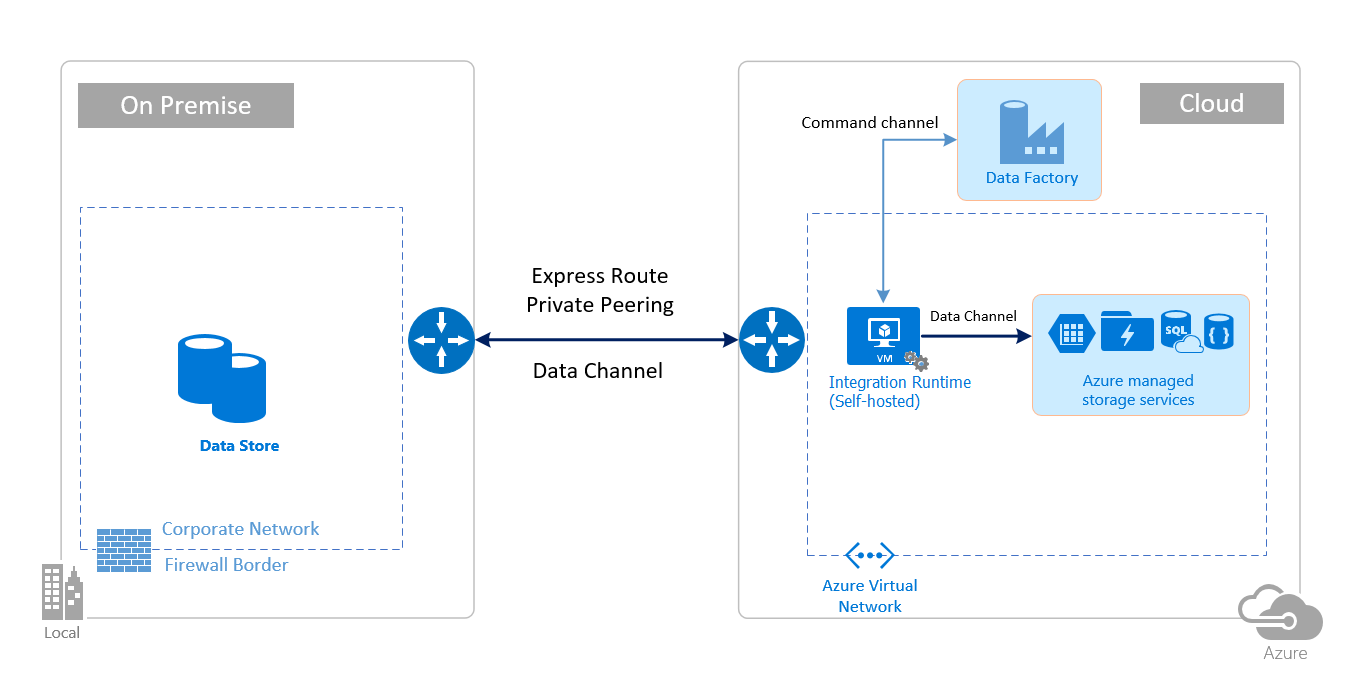

还可以使用 IPsec VPN 或 Azure ExpressRoute 进一步保护本地网络和 Azure 之间的通信信道。

Azure 虚拟网络是网络在云中的逻辑表示形式。 可以通过设置 IPsec VPN(站点到站点)或 ExpressRoute(专用对等互连)将本地网络连接到虚拟网络。

下表根据混合数据移动的源和目标位置的不同组合,汇总了有关网络和自承载集成运行时的配置建议。

| Source | 目标 | 网络配置 | 集成运行时安装 |

|---|---|---|---|

| 本地 | 虚拟网络中部署的虚拟机和云服务 | IPsec VPN(点到站点或站点到站点) | 自承载集成运行时应安装在虚拟网络中的 Azure 虚拟机上。 |

| 本地 | 虚拟网络中部署的虚拟机和云服务 | ExpressRoute(专用对等互连) | 自承载集成运行时应安装在虚拟网络中的 Azure 虚拟机上。 |

| 本地 | 具有公共终结点的基于 Azure 的服务 | ExpressRoute(Microsoft 对等互连) | 自承载集成运行时可以在本地安装,也可以安装在 Azure 虚拟机上。 |

下图显示了如何使用自承载集成运行时通过 ExpressRoute 和 IPsec VPN(具有 Azure 虚拟网络)在本地数据库和 Azure 服务之间移动数据:

Express Route

IPsec VPN

防火墙配置和针对 IP 地址设置的允许列表

注意

可能需要按相应数据源的要求在企业防火墙级别为域管理端口或设置允许列表。 此表仅以 Azure SQL 数据库和 Azure Synapse Analytics 为例。

注意

若要详细了解通过 Azure 数据工厂实施的数据访问策略,请参阅此文。

本地/专用网络的防火墙要求

在企业中,企业防火墙在组织的中央路由器上运行。 Windows 防火墙在安装自承载集成运行时的本地计算机上作为守护程序运行。

下表提供了企业防火墙的出站端口和域要求:

| 域名 | 出站端口 | 说明 |

|---|---|---|

*.servicebus.chinacloudapi.cn |

443 | 自承载集成运行时需要用它来进行交互式创作。 |

{datafactory}.{region}.datafactory.azure.cn或 *.frontend.datamovement.azure.cn |

443 | 自承载集成运行时连接到数据工厂服务时需要此端口。 对于新创建的数据工厂,请在自承载集成运行时密钥中查找 FQDN,其格式为 {datafactory}.{region}.datafactory.azure.cn。 对于旧数据工厂,如果在自承载集成密钥中找不到 FQDN,请改用“*.frontend.datamovement.azure.cn”。 |

download.microsoft.com |

443 | 自承载集成运行时下载更新时需要此端口。 如果已禁用自动更新,则可以跳过对此域的配置。 |

*.core.chinacloudapi.cn |

443 | 使用分阶段复制功能时,由自承载集成运行时用来连接到 Azure 存储帐户。 |

*.database.chinacloudapi.cn |

1433 | 仅当从或向 Azure SQL 数据库或 Azure Synapse Analytics 复制时才是必需的,否则为可选。 在不打开端口 1433 的情况下,使用暂存复制功能将数据复制到 SQL 数据库或 Azure Synapse Analytics。 |

注意

可能需要按相应数据源的要求在企业防火墙级别为域管理端口或设置允许列表。 此表仅以 Azure SQL 数据库和 Azure Synapse Analytics 为例。

下表提供了 Windows 防火墙的入站端口要求:

| 入站端口 | 说明 |

|---|---|

| 8060 (TCP) | PowerShell 加密 cmdlet(参阅在 Azure 数据工厂中加密本地数据存储的凭据)和凭据管理器应用程序需要使用此端口在自承载集成运行时中安全设置本地数据存储的凭据。 |

IP 配置和数据存储中设置的允许列表

云中的一些数据存储还要求你允许访问存储的计算机的 IP 地址。 确保已在防火墙中相应地允许或配置自承载集成运行时计算机的 IP 地址。

以下云数据存储要求允许自承载集成运行时计算机的 IP 地址。 默认情况下,其中一些数据存储可能不需要允许列表。

常见问题

是否可在不同的数据工厂之间共享自承载集成运行时?

是的。 此处提供了更多详细信息。

需要满足哪些端口要求才能让自承载集成运行时正常工作?

自承载集成运行时与访问 Internet 建立基于 HTTP 的连接。 必须打开出站端口 443,才能让自承载集成运行时建立此连接。 仅在计算机级别(不是企业防火墙级别)为凭据管理器应用程序打开入站端口 8060。 如果使用 Azure SQL 数据库或 Azure Synapse Analytics 作为源或目标,则还需要打开端口 1433。 有关详细信息,请参阅防火墙配置和针对 IP 地址设置的允许列表部分。

相关内容

有关 Azure 数据工厂复制活动性能的信息,请参阅复制活动性能和优化指南。