适用于: Azure 数据工厂

Azure 数据工厂  Azure Synapse Analytics

Azure Synapse Analytics

本文概述如何排查 Azure 数据工厂中复制活动的性能问题。

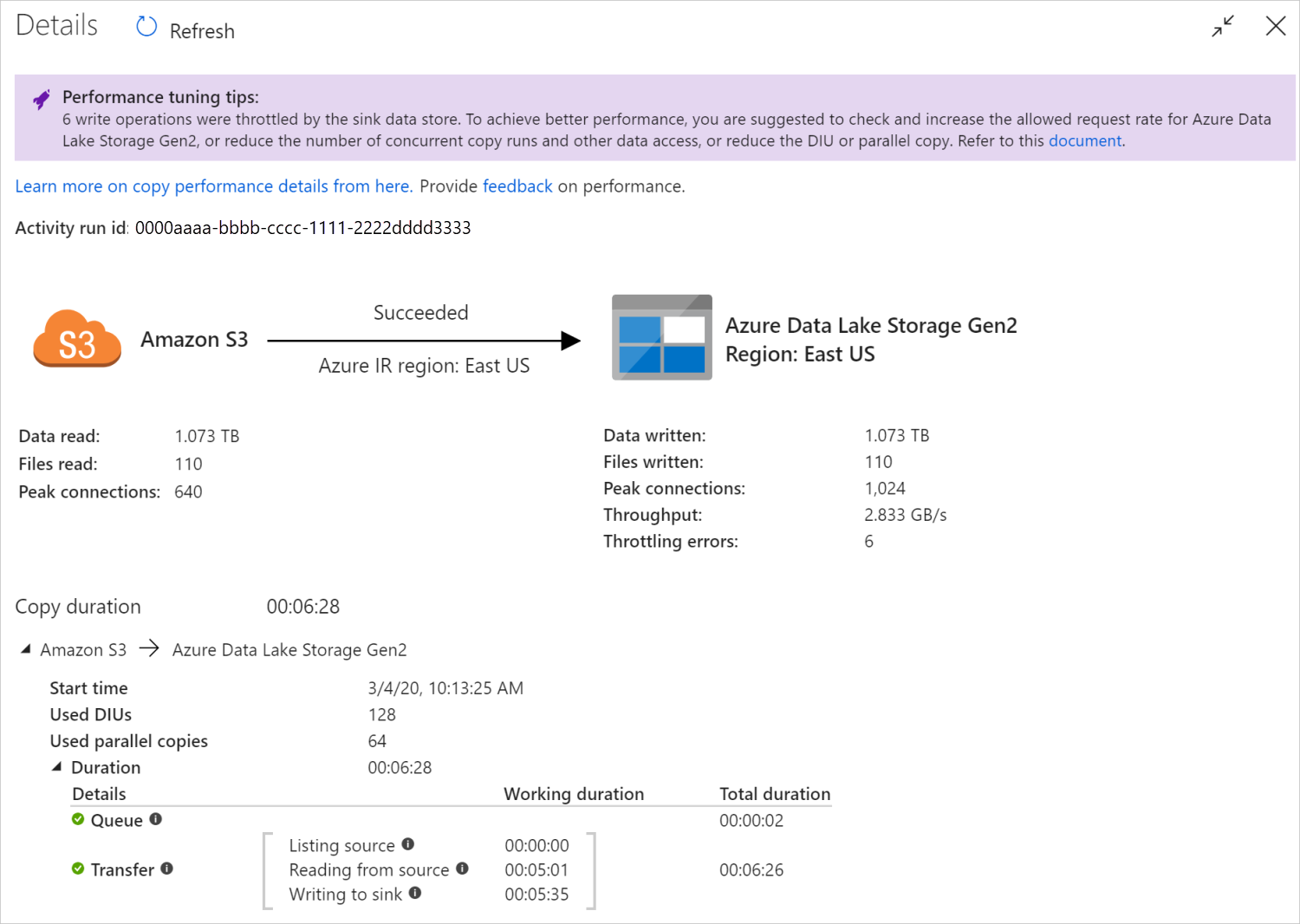

运行复制活动后,可以在复制活动监视视图中收集运行结果和性能统计信息。 下图显示了一个示例。

性能优化提示

在某些情况下,运行复制活动时,顶部会显示“性能优化提示”,如上图所示。 这些提示告知服务针对此特定复制运行识别到的瓶颈,并建议如何提高复制吞吐量。 请尝试根据建议进行更改,然后再次运行复制。

作为参考,当前性能优化提示针对以下情况提供了建议:

| 类别 | 性能优化提示 |

|---|---|

| 特定于数据存储 | 将数据载入 Azure Synapse Analytics:建议使用 PolyBase;如果 PolyBase 不可用,则使用 COPY 语句。 |

| 从/向 Azure SQL 数据库复制数据:当 DTU 的利用率较高时,建议升级到更高的层。 | |

| 从/向 Azure Cosmos DB 复制数据:当 RU 的利用率较高时,建议升级到更大的 RU。 | |

| 从 SAP 表复制数据:复制大量数据时,建议利用 SAP 连接器的分区选项来启用并行加载并增加最大分区数。 | |

| 从 Amazon Redshift 引入数据:如果未使用 UNLOAD,建议使用它。 | |

| 数据存储限制 | 如果在复制期间数据存储限制了许多读/写操作,则建议检查并增大数据存储允许的请求速率,或减少并发工作负载。 |

| 集成运行时 | 如果使用了自承载集成运行时 (IR),而复制活动在队列中长时间等待,直到 IR 提供了用于执行该活动的资源,则建议横向/纵向扩展 IR。 |

| 如果使用了非最佳区域中的 Azure 集成运行时,导致读/写速度缓慢,则建议配置为使用另一区域中的 IR。 | |

| 容错 | 如果配置了容错并跳过不兼容的行,导致性能变慢,则建议确保源和接收器数据兼容。 |

| 暂存复制 | 如果配置了分阶段复制,但此方法对于源-接收器对不起作用,则建议删除此方法。 |

| 继续 | 如果复制活动已从上一故障点恢复,但你在完成原始运行后正好更改了 DIU 设置,请注意,新的 DIU 设置不会生效。 |

了解复制活动执行详细信息

复制活动监视视图底部的执行详细信息和持续时间描述了复制活动所要经历的重要阶段(请参阅本文开头的示例),这对于排查复制性能问题特别有用。 复制运行的瓶颈就是持续时间最长的那个运行。 请参阅下表中每个阶段的定义,并了解如何使用此类信息排查 Azure IR 中的复制活动的问题和排查自承载 IR 中的复制活动的问题。

| 暂存 | 说明 |

|---|---|

| 排队 | 复制活动在集成运行时中实际启动之前所消逝的时间。 |

| 预复制脚本 | 复制活动在 IR 中启动之后、在接收器数据存储中执行完复制前脚本之前所消逝的时间。 在为数据库接收器配置预复制脚本时使用,例如,在将数据写入 Azure SQL 数据库时,应在复制新数据之前进行清理。 |

| 传输 | 完成前一步骤之后、在 IR 将所有数据从源传输到接收器之前所消逝的时间。 请注意,传输中的子步骤会并行运行,某些操作(例如,分析/生成文件格式)现在未显示。 - 距第一字节的时间: 在前一步骤结束之后、IR 从源数据存储收到第一个字节之前所经过的时间。 适用于不是基于文件的源。 - 列出源:枚举源文件或数据分区所花费的时间。 后者适用于为数据库源配置分区选项时,例如,从 Oracle/SAP HANA/Teradata/Netezza 等数据库复制数据时。 -从源中读取: 从源数据存储检索数据所花费的时间。 - 写入接收器:将数据写入接收器数据存储所花费的时间。 请注意,某些连接器(包括 Azure AI 搜索、Azure 数据资源管理器、Azure 表存储、Oracle、SQL Server、Common Data Service、Dynamics 365、Dynamics CRM、Salesforce/Salesforce 服务云)目前没有此指标。 |

排查 Azure IR 中的复制活动的问题

遵循性能优化步骤为方案规划并执行性能测试。

当复制活动性能不符合预期时,若要排查 Azure Integration Runtime 中运行的单个复制活动的问题,在看到复制监视视图中显示了性能优化提示的情况下,请应用建议并重试。 否则,请了解复制活动执行详细信息,检查哪个阶段的持续时间最长,并应用以下指导以提升复制性能:

“复制前脚本”的持续时间较长:表示接收器数据库中运行的复制前脚本花费了较长时间来完成。 优化指定的复制前脚本逻辑,以增强性能。 如果在改进脚本方面需要更多的帮助,请与数据库团队联系。

“传输 - 距第一字节的时间”的工作持续时间较长:表示源查询花费了较长时间来返回任何数据。 检查并优化查询或服务器。 如需更多帮助,请与数据存储团队联系。

“传输 - 列出源”的工作持续时间较长: 表示枚举源文件或源数据库数据分区的速度缓慢。

从基于文件的源复制数据时,如果对文件夹路径或文件名使用通配符筛选器(

wildcardFolderPath或wildcardFileName),或使用文件上次修改时间筛选器(modifiedDatetimeStart或modifiedDatetimeEnd),请注意,此类筛选器会导致复制活动在客户端中列出指定文件夹下的所有文件,然后应用筛选器。 此类文件枚举可能会变成瓶颈,尤其是只有少量的文件符合筛选规则时。检查是否可以基于按日期时间分区的文件路径或名称复制文件。 这样不会给列表来源端带来负担。

检查是否可以改用数据存储的本机筛选器,具体说来就是,是否可以使用 Amazon S3/Azure Blob 存储/Azure 文件存储的“前缀”。 这些筛选器是数据存储服务器端筛选器,拥有更好的性能。

考虑将单个大型数据集拆分为多个小型数据集,并让每个并发运行的复制作业处理一部分数据。 为此,可以使用 Lookup/GetMetadata + ForEach + Copy。 请参阅从多个容器复制文件或将数据从 Amazon S3 迁移到 ADLS Gen2 解决方案模板,其中提供了一般性的示例。

检查服务是否报告了源中的任何限流错误,或者数据存储是否处于高负载状态。 如果是,请减少数据存储上的工作负荷,或者尝试联系数据存储管理员来提高限流或增加资源的可用性。

在与你的源数据存储区域相同或相近的区域中使用 Azure IR。

“传输 - 从源读取”的工作持续时间较长:

采用特定于连接器的数据加载最佳做法(如果适用)。 例如,从 Amazon Redshift 复制数据时,请配置为使用 Redshift UNLOAD。

检查服务是否报告了源中的任何节流错误,或者您的数据存储是否处于高负载状态。 如果是,请减少数据存储中的工作负荷,或者尝试联系数据存储管理员来提高限流限制或增加可用资源。

检查复制源和接收器模式:

如果复制模式支持 4 个以上的数据集成单位 (DIU) - 请参阅此部分中的详细信息,一般情况下,可以尝试增加 DIU 以获得更好的性能。

否则,请考虑将单个大型数据集拆分为多个小型数据集,并让那些复制作业并发运行,使其各自处理一部分数据。 为此,可以使用 Lookup/GetMetadata + ForEach + Copy。 请参阅从多个容器复制文件、将数据从 Amazon S3 迁移到 ADLS Gen2 或使用控制表进行批量复制解决方案模板,其中提供了一般性的示例。

使用同一源数据存储区域中或者与之靠近的区域中的 Azure IR。

“传输 - 写入接收器”的工作持续时间较长:

采用特定于连接器的数据加载最佳做法(如果适用)。 例如,将数据复制到 Azure Synapse Analytics 时,请使用 PolyBase 或 COPY 语句。

检查该服务是否报告了接收器中的任何限制错误,或者数据存储是否处于高利用率状态。 如果是,请减少数据存储中的负载,或者尝试联系数据存储管理员来提高限制速率或增加可用资源。

检查复制源和接收器模式:

使用同一接收器数据存储区域中或者与之靠近的区域中的 Azure IR。

排查自承载 IR 中的复制活动的问题

遵循性能优化步骤为方案规划并执行性能测试。

当复制性能不符合预期时,若要排查 Azure Integration Runtime 中运行的单个复制活动的问题,在看到复制监视视图中显示了性能优化提示的情况下,请应用建议并重试。 否则,请了解复制活动执行详细信息,检查哪个阶段的持续时间最长,并应用以下指导以提升复制性能:

“排队”持续时间较长:表示复制活动在队列中长时间等待,直到自承载 IR 提供了用于执行该活动的资源。 检查 IR 容量和使用率,并根据工作负荷进行纵向或横向扩展。

“传输 - 首字节时间”的运行时间较长: 表示源查询需要较长时间返回数据。 检查并优化查询或服务器。 如需更多帮助,请与数据存储团队联系。

“传输 - 列出源”的工作持续时间较长: 表示枚举源文件或源数据库数据分区的速度缓慢。

检查自托管 IR 服务器是否以较低的延迟连接到源数据存储。 如果源位于 Azure 中,你可以使用此工具检查自承载 IR 计算机与 Azure 区域之间的连接延迟,延迟值越小越好。

从基于文件的源复制数据时,如果对文件夹路径或文件名使用通配符筛选器(

wildcardFolderPath或wildcardFileName),或使用文件上次修改时间筛选器(modifiedDatetimeStart或modifiedDatetimeEnd),请注意,此类筛选器会导致复制活动在客户端中列出指定文件夹下的所有文件,然后应用筛选器。 此类文件枚举可能会变成瓶颈,尤其是只有少量的文件符合筛选规则时。检查是否可以基于按日期时间分区的文件路径或名称复制文件。 这不会在“列出源”端带来负担。

检查是否可以改用数据存储的本机筛选器,具体说来就是,是否可以使用 Amazon S3/Azure Blob 存储/Azure 文件存储的“前缀”。 这些筛选器是数据存储服务器端筛选器,拥有更好的性能。

考虑将单个大型数据集拆分为多个小型数据集,并让每个并发运行的复制作业处理一部分数据。 为此,可以使用 Lookup/GetMetadata + ForEach + Copy。 请参阅从多个容器复制文件或将数据从 Amazon S3 迁移到 ADLS Gen2 解决方案模板,其中提供了一般性的示例。

检查该服务是否报告了源中的任何限制错误,或者数据存储是否处于高利用率状态。 如果是,请减少数据存储中的工作负荷,或者尝试联系数据存储管理员来提高限制或增加可用资源。

“传输 - 从源读取”的工作持续时间较长:

检查本地托管的 IR 服务器是否能够以低延迟连接到源数据存储。 如果源位于 Azure 中,你可以使用此工具检查自承载 IR 计算机与 Azure 区域之间的连接延迟,延迟值越小越好。

检查自承载 IR 计算机是否具有足够的入站带宽,可以有效地读取和传输数据。 如果源数据存储位于 Azure 中,你可以使用此工具检查下载速度。

在 Azure 门户 -> 数据工厂或 Synapse 工作区 -> 概述页面,检查自承载 IR 的 CPU 和内存使用趋势。 如果 CPU 使用率较高或可用内存不足,请考虑纵向/横向扩展 IR。

采用特定于连接器的数据加载最佳做法(如果适用)。 例如:

从 Oracle、Netezza、Teradata、SAP HANA、SAP Table 和 SAP Open Hub 复制数据时,请启用数据分区选项以并行复制数据。

从 HDFS复制数据时,请配置为使用 DistCp。

从 Amazon Redshift 复制数据时,请配置为使用 Redshift UNLOAD。

检查该服务是否报告了源中的任何限制错误,或者数据存储是否处于高利用率。 如果是,请减少数据存储中的工作负荷,或者尝试联系数据存储管理员来提高限制或增加可用资源。

检查复制源和接收器模式:

如果从已启用分区选项的数据存储复制数据,请考虑逐步优化并行复制。 过多的并行复制甚至可能会损害性能。

否则,请考虑将单个大型数据集拆分为多个小型数据集,并让每个并发运行的复制作业处理一部分数据。 为此,可以使用 Lookup/GetMetadata + ForEach + Copy。 请参阅从多个容器复制文件、将数据从 Amazon S3 迁移到 ADLS Gen2 或使用控制表进行批量复制解决方案模板,其中提供了一般性的示例。

“传输 - 写入接收器”的工作持续时间较长:

采用特定于连接器的数据加载最佳做法(如果适用)。 例如,将数据复制到 Azure Synapse Analytics 时,请使用 PolyBase 或 COPY 语句。

检查自承载 IR 计算机是否以较低的延迟连接到接收器数据存储。 如果接收器位于 Azure 中,你可以使用此工具检查自承载 IR 计算机与 Azure 区域之间的连接延迟,延迟值越小越好。

检查自承载 IR 计算机是否具有足够的出站带宽,可以有效地传输和写入数据。 如果接收器数据存储位于 Azure 中,你可以使用此工具检查上传速度。

在 Azure 门户 -> 数据工厂或 Synapse 工作区 -> 概述页面,检查自承载 IR 的 CPU 和内存使用趋势。 如果 CPU 使用率较高或可用内存不足,请考虑纵向/横向扩展 IR。

检查该服务是否报告了接收器中的任何限制错误,或者数据存储是否处于高利用率状态。 如果是,请减少在数据存储上的工作负荷,或者尝试联系数据存储管理员以提高流量限制或增加可用资源。

请考虑逐步优化并行复制。 过多的并行复制甚至可能会损害性能。

连接器和 IR 性能

本部分探讨特定连接器类型或集成运行时的一些性能故障排除指南。

使用 Azure IR 与 Azure 虚拟网络 IR 时,活动执行时间会有所不同

当数据集基于不同 Integration Runtime 时,活动执行时间会有所不同。

症状:只需在数据集中切换“链接服务”下拉列表就可以执行相同的管道活动,但运行时间会明显不同。 当数据集基于托管虚拟网络集成运行时时,运行所需的平均时间要比基于默认集成运行时时更长。

原因:检查管道运行的详细信息,可以看到慢速管道在托管虚拟网络 IR 上运行,而正常管道在 Azure IR 上运行。 按照设计,托管虚拟网络 IR 的队列时间比 Azure IR 长,因为我们不会为每个服务实例都保留一个计算节点,因此每个复制活动都需要预热后才能启动,并且主要在虚拟网络联接而不是在 Azure IR 上发生。

在将数据加载到 Azure SQL 数据库时性能较低

症状:在将数据复制到 Azure SQL 数据库时速度变慢。

原因: 此问题的根本原因主要由 Azure SQL 数据库端的瓶颈触发。 下面是一些可能的原因:

Azure SQL 数据库层不够高。

Azure SQL 数据库 DTU 使用率接近 100%。 可以监视性能并考虑将 Azure SQL 数据库层升级。

索引未正确设置。 请在加载数据之前先删除所有索引,并在加载完成之后再重新创建索引。

WriteBatchSize 不够大,无法容纳架构行大小。 若要解决此问题,请尝试增大该属性。

使用的是存储过程,而不是批量插入,这会使性能更差。

分析大型 Excel 文件时超时或性能较低

症状:

在创建 Excel 数据集并从连接/存储导入架构、预览数据、列出或刷新工作表时,如果 Excel 文件很大,则可能会遇到超时错误。

在使用复制活动将大型 Excel 文件 (>= 100 MB) 中的数据复制到其他数据存储时,可能会遇到性能低下或 OOM 问题。

原因:

对于导入架构、预览数据以及在 Excel 数据集上列出工作表等操作, 超时时间固定为 100 秒。 对于大型 Excel 文件,这些操作可能无法在超时值内完成。

复制活动将整个 Excel 文件读入内存,然后查找指定的工作表和单元格来读取数据。 此行为是由服务使用的基础 SDK 导致的。

解决方法:

对于导入架构,你可以生成一个较小的示例文件(原始文件的一部分),并选择“从示例文件导入架构”而不是“从连接/存储导入架构”。

若要列出工作表,可以改为在工作表下拉框中选择“编辑”并输入工作表名称/索引。

若要将大型 Excel 文件 (>100 MB) 复制到其他存储,可以使用支持流式读取且性能更好的数据流 Excel 源。

读取大型 JSON/Excel/XML 文件时出现 OOM 问题

症状:读取大型 JSON/Excel/XML 文件时,在活动执行期间遇到内存不足 (OOM) 问题。

原因:

- 对于大型 XML 文件:读取大型 XML 文件时出现 OOM 问题是出于设计考量。 原因是整个 XML 文件必须作为单个对象读取到内存中,然后再推断架构并检索数据。

- 对于大型 Excel 文件:读取大型 Excel 文件时出现 OOM 问题是出于设计考量。 原因是使用的 SDK (POI/NPOI) 必须将整个 Excel 文件读取到内存中,然后再推断架构并获取数据。

- 对于大型 JSON 文件:当 JSON 文件是单个对象时,读取大型 JSON 文件出现 OOM 问题是出于设计考量。

建议做法:采用以下其中一个选项来解决此问题。

- 选项 1:向高性能计算机(高 CPU /大内存)注册联机自承载集成运行时,通过复制活动读取大型文件中的数据。

- 选项 2:使用优化的内存和大型群集(例如,48 个核心),通过映射数据流活动读取大型文件中的数据。

- 选项 3:将大型文件拆分成多个小文件,然后使用复制或映射数据流活动读取文件夹。

- 选项 4:如果在复制 XML/Excel/JSON 文件夹期间卡住或是遇到 OOM 问题,请使用管道中的 foreach 活动 + 复制/映射数据流活动来处理每个文件或子文件夹。

- 选项 5:其他:

- 使用内存优化集群的笔记本活动读取 XML 文件中的数据,前提是每个文件的架构都相同。 目前,Apache Spark 提供多种实现方法来处理 XML 文件。

- 对于 JSON,请使用映射数据流源下面的 JSON 设置中的不同文档格式(例如,“单个文档”、“每行一个文档”和“文档阵列”)。 如果 JSON 文件内容为“每行文档”,则其占用的内存很少。

其他参考资料

这里是一些支持的数据存储的性能监测和调优参考资料:

- Azure Blob 存储:Blob 存储的可伸缩性和性能目标和 Blob 存储的性能与可伸缩性查检表。

- Azure 表存储:表存储的可伸缩性和性能目标和表存储的性能与可伸缩性查检表。

- Azure SQL 数据库:可监视性能并检查数据库事务单位 (DTU) 百分比。

- Azure Synapse Analytics:其功能以数据仓库单位 (DWU) 衡量。 请参阅管理 Azure Synapse Analytics 中的计算能力(概述)。

- Azure Cosmos DB:Azure Cosmos DB 中的性能级别。

- SQL Server:性能监视和优化。

- 本地文件服务器:文件服务器性能优化。

相关内容

请参阅其他复制活动文章: