适用于: Azure 数据工厂

Azure 数据工厂  Azure Synapse Analytics

Azure Synapse Analytics

本文概述如何使用 Azure 数据工厂和 Synapse Analytics 管道中的复制活动从 Teradata Vantage 复制数据。 本文是在复制活动概述的基础上编写的。

重要

Teradata 连接器版本 1.0 处于 删除阶段。 建议将 Teradata 连接器 从版本 1.0 升级到 2.0。

支持的功能

此 Teradata 连接器支持以下功能:

| 支持的功能 | IR |

|---|---|

| 复制活动(源/-) | (1) (2) |

| Lookup 活动 | (1) (2) |

① Azure 集成运行时 ② 自承载集成运行时

有关复制活动支持作为源/接收器的数据存储列表,请参阅支持的数据存储表。

具体而言,此 Teradata 连接器支持:

- Teradata Vantage 版本 17.0、17.10、17.20 和 20.0 适用于版本 2.0。

- 对于版本 1.0,Teradata Vantage 版本 14.10、15.0、15.10、16.0、16.10 和 16.20 。

- 使用“基本”、“Windows”或“LDAP”身份验证复制数据。

- 从 Teradata 源进行并行复制。 有关详细信息,请参阅从 Teradata 进行并行复制部分。

先决条件

如果数据存储位于本地网络、Azure 虚拟网络或 Amazon Virtual Private Cloud 内部,则需要配置自承载集成运行时才能连接到该数据存储。

如果数据存储是托管的云数据服务,则可以使用 Azure Integration Runtime。 如果访问范围限制为防火墙规则中允许的 IP,你可以选择将 Azure Integration Runtime IP 添加到允许列表。

此外,还可以使用 Azure 数据工厂中的托管虚拟网络集成运行时功能访问本地网络,而无需安装和配置自承载集成运行时。

要详细了解网络安全机制和数据工厂支持的选项,请参阅数据访问策略。

对于版本 2.0

需要在运行自承载集成运行时且版本低于 5.56.9318.1 的计算机上安装版本为 20.00.03.00 或更高版本的 .NET 数据提供程序 。 使用自承载集成运行时版本 5.56.9318.1 或更高版本时,不需要手动安装 Teradata 驱动程序,因为这些版本提供内置驱动程序。

适用于版本 1.0

如果使用自承载集成运行时,请注意,它从 3.18 版开始提供内置的 Teradata 驱动程序。 无需手动安装任何驱动程序。 驱动程序要求在自承载集成运行时计算机上安装“Visual C++ Redistributable 2012 Update 4”。 如果尚未安装,请在此处下载。

入门

若要使用管道执行复制活动,可以使用以下工具或 SDK 之一:

使用 UI 创建到 Teradata 的链接服务

使用以下步骤在 Azure 门户 UI 中创建一个到 Teradata 的链接服务。

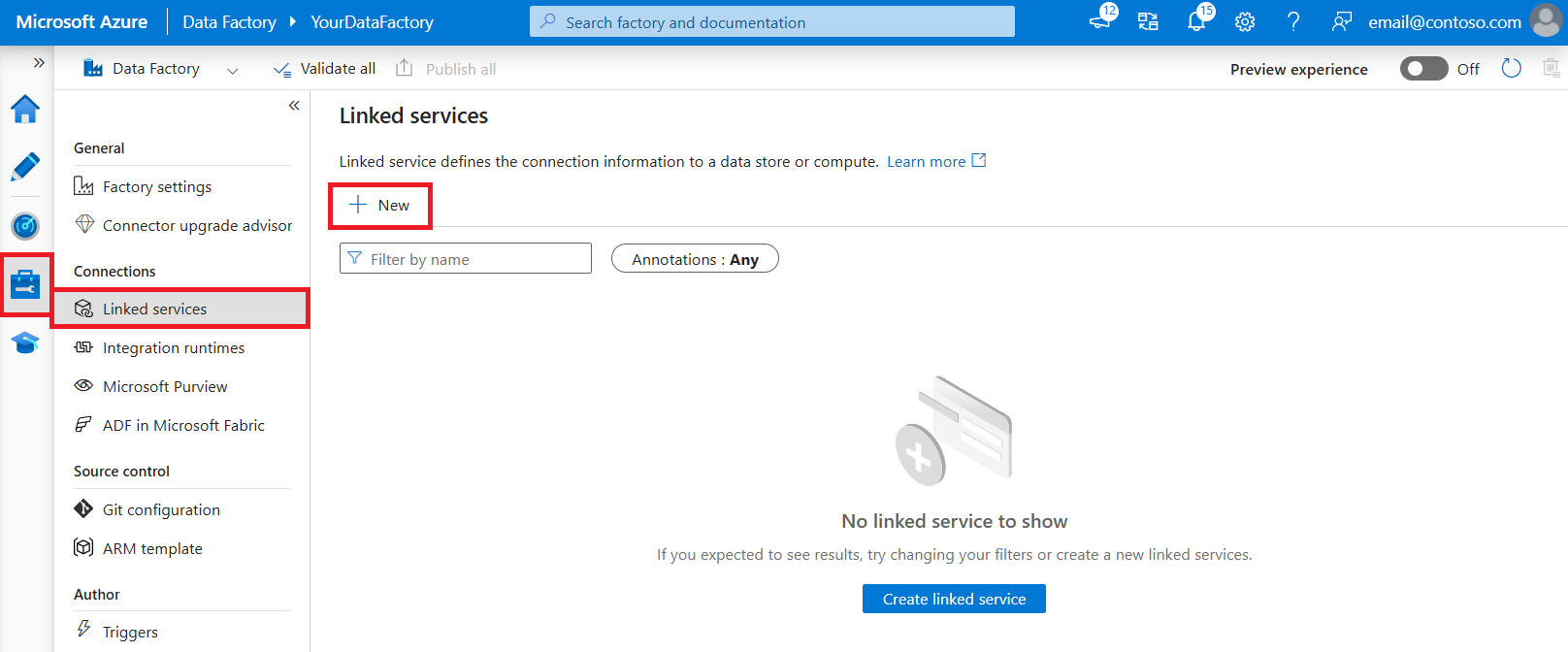

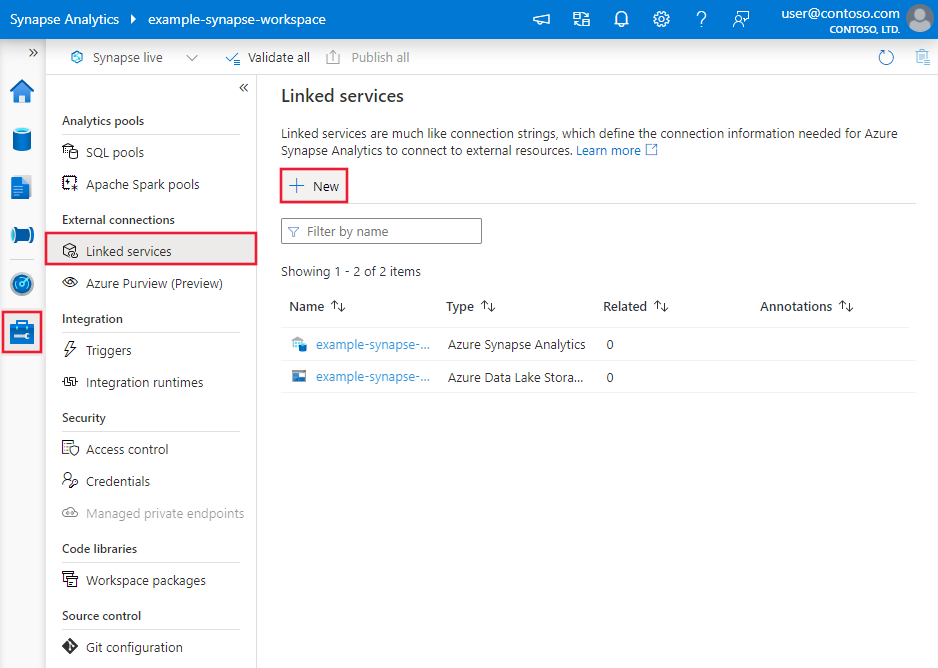

浏览到 Azure 数据工厂或 Synapse 工作区中的“管理”选项卡并选择“链接服务”,然后单击“新建”:

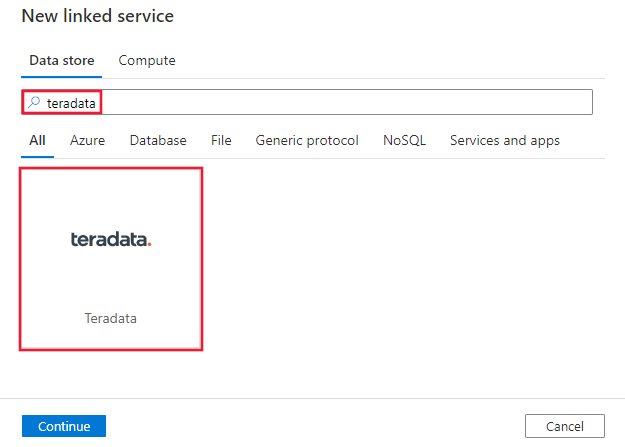

搜索 Teradata 并选择 Teradata 连接器。

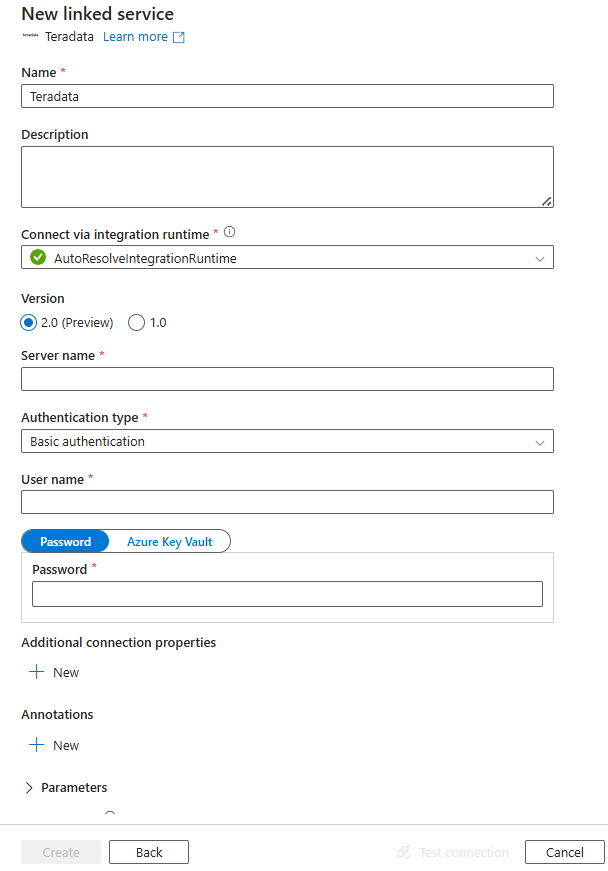

配置服务详细信息、测试连接并创建新的链接服务。

连接器配置详细信息

对于特定于 Teradata 连接器的数据工厂实体,以下部分提供有关用于定义这些实体的属性的详细信息。

链接服务属性

Teradata 连接器现在支持版本 2.0。 请参考本部分,以从版本 1.0 升级 Teradata 连接器版本。 关于属性详情,请参阅对应部分。

版本 2.0

应用版本 2.0 时,Teradata 链接服务支持以下属性:

| 属性 | 描述 | 必需 |

|---|---|---|

| 类型 | type 属性必须设置为 Teradata。 | 是 |

| 版本 | 指定的版本。 该值为 2.0。 |

是 |

| 服务器 | Teradata 服务器名称。 | 是 |

| 验证类型 | 连接到 Teradata 的认证类型。 有效值,包括 基本、 Windows 和 LDAP | 是 |

| 用户名 | 指定用于连接到 Teradata 的用户名。 | 是 |

| 密码 | 指定为用户名指定的用户帐户的密码。 此外,还可以选择引用 Azure Key Vault 中存储的机密。 | 是 |

| 连接方式 | 用于连接到数据存储的集成运行时。 在先决条件部分了解更多信息。 如果未指定,则使用默认 Azure Integration Runtime。 | 否 |

可以根据自己的情况在连接字符串中设置更多连接属性:

| 属性 | 描述 | 默认值 |

|---|---|---|

| SSL模式 | 用于连接到数据库的 SSL 模式。 有效值包括Disable、Allow、Prefer、Require、Verify-CA、Verify-Full。 |

Verify-Full |

| 端口号 | 通过非 HTTPS/TLS 连接连接到服务器的端口号。 | 1025 |

| HTTPS端口号 | 通过HTTPS/TLS连接连接到服务器时使用的端口号。 | 443 |

| UseDataEncryption | 指定是否对 Teradata 数据库的所有通信进行加密。 允许的值为 0 或 1。 - 0 (已禁用):仅加密身份验证信息。 - 1(已启用,默认值):加密驱动程序和数据库之间传递的所有数据。 HTTPS/TLS 连接忽略此设置。 |

1 |

| 字符集 | 要用于会话的字符集。 例如,CharacterSet=UTF16。此值可以是用户定义的字符集,也可以是以下预定义字符集之一: - ASCII - ARABIC1256_6A0 - CYRILLIC1251_2A0 - HANGUL949_7R0 - HEBREW1255_5A0 - KANJI932_1S0 - KANJISJIS_0S - LATIN1250_1A0 - LATIN1252_3A0 - LATIN1254_7A0 - LATIN1258_8A0 - SCHINESE936_6R0 - TCHINESE950_8R0 - THAI874_4A0 - UTF8 - UTF16 |

ASCII |

| 最大响应大小 | SQL请求的响应缓冲区的最大大小,以字节为单位。 例如,MaxRespSize=10485760。允许值的范围从 4096 到 16775168。 默认值是 524288。 |

524288 |

示例

{

"name": "TeradataLinkedService",

"properties": {

"type": "Teradata",

"version": "2.0",

"typeProperties": {

"server": "<server name>",

"username": "<user name>",

"password": "<password>",

"authenticationType": "<authentication type>"

},

"connectVia": {

"referenceName": "<name of Integration Runtime>",

"type": "IntegrationRuntimeReference"

}

}

}

版本 1.0

应用版本 1.0 时,Teradata 链接服务支持以下属性:

| 属性 | 描述 | 必需 |

|---|---|---|

| 类型 | type 属性必须设置为 Teradata。 | 是 |

| connectionString | 指定连接到 Teradata 实例所需的信息。 请参阅以下示例。 还可以将密码放在 Azure Key Vault 中,并从连接字符串中拉取 password 配置。 有关更多详细信息,请参阅在 Azure Key Vault 中存储凭据。 |

是 |

| 用户名 | 指定用于连接到 Teradata 的用户名。 使用 Windows 身份验证时适用。 | 否 |

| 密码 | 指定为用户名指定的用户帐户的密码。 此外,还可以选择引用 Azure Key Vault 中存储的机密。 使用 Windows 身份验证时,或引用 Key Vault 中用于基本身份验证的密码时适用。 |

否 |

| 连接方式 | 用于连接到数据存储的集成运行时。 在先决条件部分了解更多信息。 如果未指定,则使用默认 Azure Integration Runtime。 | 否 |

可以根据自己的情况在连接字符串中设置更多连接属性:

| 属性 | 描述 | 默认值 |

|---|---|---|

| TdmstPortNumber | 用于访问 Teradata 数据库的端口号。 除非技术支持指示,否则请勿更改此值。 |

1025 |

| UseDataEncryption | 指定是否对 Teradata 数据库的所有通信进行加密。 允许的值为 0 或 1。 - 0(已禁用,为默认值) :仅加密身份验证信息。 - 1(已启用) :对驱动程序和数据库之间传递的所有数据进行加密。 |

0 |

| 字符集 | 要用于会话的字符集。 例如,CharacterSet=UTF16。此值可以是用户定义的字符集,也可以是以下预定义的字符集之一: - ASCII - UTF8 - UTF16 - LATIN1252_0A - LATIN9_0A - LATIN1_0A - Shift-JIS(Windows、兼容 DOS、KANJISJIS_0S) - EUC(兼容 Unix、KANJIEC_0U) - IBM 大型机 (KANJIEBCDIC5035_0I) - KANJI932_1S0 - BIG5 (TCHBIG5_1R0) - GB (SCHGB2312_1T0) - SCHINESE936_6R0 - TCHINESE950_8R0 - NetworkKorean (HANGULKSC5601_2R4) - HANGUL949_7R0 - ARABIC1256_6A0 - CYRILLIC1251_2A0 - HEBREW1255_5A0 - LATIN1250_1A0 - LATIN1254_7A0 - LATIN1258_8A0 - THAI874_4A0 |

ASCII |

| 最大响应大小 | SQL 请求的响应缓冲区的最大大小,以千字节 (KB) 为单位。 例如,MaxRespSize=10485760。对于 Teradata 数据库版本 16.00 或更高版本,最大值为 7361536。 对于使用较早版本的连接,最大值为 1048576。 |

65536 |

| 机制名称 | 若要使用 LDAP 协议对连接进行身份验证,请指定 MechanismName=LDAP。 |

不适用 |

示例:使用基本身份验证

{

"name": "TeradataLinkedService",

"properties": {

"type": "Teradata",

"typeProperties": {

"connectionString": "DBCName=<server>;Uid=<username>;Pwd=<password>"

},

"connectVia": {

"referenceName": "<name of Integration Runtime>",

"type": "IntegrationRuntimeReference"

}

}

}

示例:使用 Windows 身份验证

{

"name": "TeradataLinkedService",

"properties": {

"type": "Teradata",

"typeProperties": {

"connectionString": "DBCName=<server>",

"username": "<username>",

"password": "<password>"

},

"connectVia": {

"referenceName": "<name of Integration Runtime>",

"type": "IntegrationRuntimeReference"

}

}

}

示例:使用 LDAP 身份验证

{

"name": "TeradataLinkedService",

"properties": {

"type": "Teradata",

"typeProperties": {

"connectionString": "DBCName=<server>;MechanismName=LDAP;Uid=<username>;Pwd=<password>"

},

"connectVia": {

"referenceName": "<name of Integration Runtime>",

"type": "IntegrationRuntimeReference"

}

}

}

注意

仍支持以下有效负载。 不过,今后应使用新的有效负载。

先前的有效负载:

{

"name": "TeradataLinkedService",

"properties": {

"type": "Teradata",

"typeProperties": {

"server": "<server>",

"authenticationType": "<Basic/Windows>",

"username": "<username>",

"password": {

"type": "SecureString",

"value": "<password>"

}

},

"connectVia": {

"referenceName": "<name of Integration Runtime>",

"type": "IntegrationRuntimeReference"

}

}

}

数据集属性

本部分提供 Teradata 数据集支持的属性列表。 有关可用于定义数据集的各个部分和属性的完整列表,请参阅数据集。

从 Teradata 复制数据时,支持以下属性:

| 属性 | 描述 | 必需 |

|---|---|---|

| 类型 | 数据集的 type 属性必须设置为 TeradataTable。 |

是 |

| 数据库 | Teradata 实例的名称。 | 否(如果指定了活动源中的“query”) |

| 表 | Teradata 实例中的表名。 | 否(如果指定了活动源中的“query”) |

示例:

{

"name": "TeradataDataset",

"properties": {

"type": "TeradataTable",

"typeProperties": {},

"schema": [],

"linkedServiceName": {

"referenceName": "<Teradata linked service name>",

"type": "LinkedServiceReference"

}

}

}

注意

仍支持 RelationalTable 类型数据集。 不过,我们建议使用新的数据集。

先前的有效负载:

{

"name": "TeradataDataset",

"properties": {

"type": "RelationalTable",

"linkedServiceName": {

"referenceName": "<Teradata linked service name>",

"type": "LinkedServiceReference"

},

"typeProperties": {}

}

}

复制活动属性

本部分提供 Teradata 源支持的属性列表。 有关可用于定义活动的各个部分和属性的完整列表,请参阅管道。

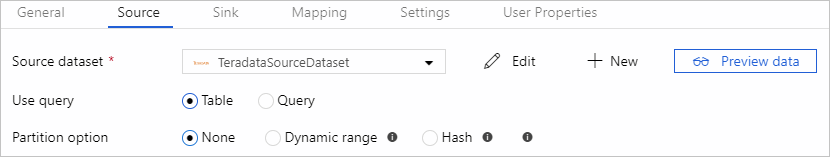

以 Teradata 作为源

提示

若要详细了解如何使用数据分区从 Teradata 有效加载数据,请参阅从 Teradata 进行并行复制部分。

从 Teradata 复制数据时,复制活动的 source 节支持以下属性:

| 属性 | 描述 | 必需 |

|---|---|---|

| 类型 | 复制活动 source 的 type 属性必须设置为 TeradataSource。 |

是 |

| 查询 | 使用自定义 SQL 查询读取数据。 例如 "SELECT * FROM MyTable"。启用分区加载时,需要在查询中挂接任何相应的内置分区参数。 有关示例,请参阅从 Teradata 进行并行复制部分。 |

否(如果指定了数据集中的表) |

| 分区选项 | 指定用于从 Teradata 加载数据的数据分区选项。 允许的值为:None(默认值)、Hash 和 DynamicRange。 启用分区选项(即,该选项不为 None)时,用于从 Teradata 并行加载数据的并行度由复制活动上的 parallelCopies 设置控制。 |

否 |

| 分区设置 | 指定数据分区的设置组。 当分区选项不是 None 时适用。 |

否 |

| partitionColumnName (分区列名称) | 指定用于并行复制的,由范围分区或哈希分区使用的源列的名称。 如果未指定,系统会自动检测表的主索引并将其用作分区列。 当分区选项是 Hash 或 DynamicRange 时适用。 如果使用查询来检索源数据,请在 WHERE 子句中挂接 ?AdfHashPartitionCondition 或 ?AdfRangePartitionColumnName。 请参阅从 Teradata 进行并行复制部分中的示例。 |

否 |

| partitionUpperBound 分区上限 | 要从中复制数据的分区列的最大值。 当分区选项是 DynamicRange 时适用。 如果使用查询来检索源数据,请在 WHERE 子句中挂接 ?AdfRangePartitionUpbound。 有关示例,请参阅从 Teradata 进行并行复制部分。 |

否 |

| partitionLowerBound 分区下限 | 要从中复制数据的分区列的最小值。 当分区选项是 DynamicRange 时适用。 如果使用查询来检索源数据,请在 WHERE 子句中挂接 ?AdfRangePartitionLowbound。 有关示例,请参阅从 Teradata 进行并行复制部分。 |

否 |

注意

仍支持 RelationalSource 类型复制源,但它不支持从 Teradata 进行并行加载(分区选项)的新内置功能。 不过,我们建议使用新的数据集。

示例:使用基本查询但不使用分区复制数据

"activities":[

{

"name": "CopyFromTeradata",

"type": "Copy",

"inputs": [

{

"referenceName": "<Teradata input dataset name>",

"type": "DatasetReference"

}

],

"outputs": [

{

"referenceName": "<output dataset name>",

"type": "DatasetReference"

}

],

"typeProperties": {

"source": {

"type": "TeradataSource",

"query": "SELECT * FROM MyTable"

},

"sink": {

"type": "<sink type>"

}

}

}

]

从 Teradata 进行并行复制

Teradata 连接器提供内置的数据分区,用于从 Teradata 并行复制数据。 可以在复制活动的“源”表中找到数据分区选项。

启用分区复制后,服务将对 Teradata 源运行并行查询,以便按分区加载数据。 可通过复制活动中的 parallelCopies 设置控制并行度。 例如,如果将 parallelCopies 设置为 4,则该服务会根据指定的分区选项和设置并行生成并运行 4 个查询,每个查询从 Teradata 检索一部分数据。

建议同时启用并行复制和数据分区,尤其是从 Teradata 加载大量数据时。 下面是适用于不同方案的建议配置。 将数据复制到基于文件的数据存储中时,建议将数据作为多个文件写入文件夹(仅指定文件夹名称),在这种情况下,性能优于写入单个文件。

| 方案 | 建议的设置 |

|---|---|

| 从大型表进行完整加载。 |

分区选项:哈希。 在执行期间,服务会自动检测主索引列,对其应用哈希,然后按分区复制数据。 |

| 使用自定义查询加载大量数据。 |

分区选项:哈希。 查询: SELECT * FROM <TABLENAME> WHERE ?AdfHashPartitionCondition AND <your_additional_where_clause>。分区列:指定用于应用哈希分区的列。 如果未指定,服务会自动检测 Teradata 数据集中指定的表的 PK 列。 在执行期间,服务会将 ?AdfHashPartitionCondition 替换为哈希分区逻辑,并将其发送到 Teradata。 |

| 使用自定义查询加载大量数据,某个整数列包含均匀分布的范围分区值。 |

分区选项:动态范围分区。 查询: SELECT * FROM <TABLENAME> WHERE ?AdfRangePartitionColumnName <= ?AdfRangePartitionUpbound AND ?AdfRangePartitionColumnName >= ?AdfRangePartitionLowbound AND <your_additional_where_clause>。分区列:指定用于对数据进行分区的列。 可以针对整数数据类型的列进行分区。 分区上限和分区下限:指定是否要对分区列进行筛选,以便仅检索介于下限和上限之间的数据。 在执行期间,服务会将 ?AdfRangePartitionColumnName、?AdfRangePartitionUpbound 和 ?AdfRangePartitionLowbound 替换为每个分区的实际列名称和值范围,并将其发送到 Teradata。 例如,如果将分区列“ID”设置为 1,上限设置为 80,并行复制设置为 4,则服务按 4 个分区检索数据。 其 ID 分别介于 [1, 20]、[21, 40]、[41, 60] 和 [61, 80] 之间。 |

示例:使用哈希分区进行查询

"source": {

"type": "TeradataSource",

"query": "SELECT * FROM <TABLENAME> WHERE ?AdfHashPartitionCondition AND <your_additional_where_clause>",

"partitionOption": "Hash",

"partitionSettings": {

"partitionColumnName": "<hash_partition_column_name>"

}

}

示例:使用动态范围分区进行查询

"source": {

"type": "TeradataSource",

"query": "SELECT * FROM <TABLENAME> WHERE ?AdfRangePartitionColumnName <= ?AdfRangePartitionUpbound AND ?AdfRangePartitionColumnName >= ?AdfRangePartitionLowbound AND <your_additional_where_clause>",

"partitionOption": "DynamicRange",

"partitionSettings": {

"partitionColumnName": "<dynamic_range_partition_column_name>",

"partitionUpperBound": "<upper_value_of_partition_column>",

"partitionLowerBound": "<lower_value_of_partition_column>"

}

}

Teradata 的数据类型映射

从 Teradata 复制数据时,将应用下列从 Teradata 的数据类型到服务使用的内部数据类型的映射。 若要了解复制活动如何将源架构和数据类型映射到接收器,请参阅架构和数据类型映射。

| Teradata 数据类型 | 临时服务数据类型(适用于版本 2.0) | 临时服务数据类型(适用于版本 1.0) |

|---|---|---|

| BigInt | Int64 | Int64 |

| 团块 | Byte[] | Byte[] |

| 字节(Byte) | Byte[] | Byte[] |

| ByteInt | Int16 | Int16 |

| 煳 | 字符串 | 字符串 |

| Clob | 字符串 | 字符串 |

| 日期 | 日期 | 日期时间 |

| 十进制 | Decimal | 十进制 |

| 加倍 | 加倍 | 加倍 |

| 图形 | 字符串 | 不支持。 在源查询中应用显式强制转换。 |

| 整数 | Int32 | Int32 |

| 间隔日 | 时间跨度 | 不支持。 在源查询中应用显式强制转换。 |

| 间隔日到小时 | 时间跨度 | 不支持。 在源查询中应用显式强制转换。 |

| 间隔日到分钟 | 时间跨度 | 不支持。 在源查询中应用显式强制转换。 |

| 日到秒的时间间隔 | 时间跨度 | 不支持。 在源查询中应用显式强制转换。 |

| 间隔小时 | 时间跨度 | 不支持。 在源查询中应用显式强制转换。 |

| 间隔小时到分钟 | 时间跨度 | 不支持。 在源查询中应用显式强制转换。 |

| 间隔小时到秒 | 时间跨度 | 不支持。 在源查询中应用显式强制转换。 |

| 间隔分钟 | 时间跨度 | 不支持。 在源查询中应用显式强制转换。 |

| 间隔分钟到秒 | 时间跨度 | 不支持。 在源查询中应用显式强制转换。 |

| 间隔月份 | 字符串 | 不支持。 在源查询中应用显式强制转换。 |

| 间隔秒数 | 时间跨度 | 不支持。 在源查询中应用显式强制转换。 |

| 间隔年 | 字符串 | 不支持。 在源查询中应用显式强制转换。 |

| 年月间隔 | 字符串 | 不支持。 在源查询中应用显式强制转换。 |

| 编号 | 加倍 | 加倍 |

| 期间(日期) | 字符串 | 不支持。 在源查询中应用显式强制转换。 |

| 期间(时间) | 字符串 | 不支持。 在源查询中应用显式强制转换。 |

| 期间(带时区的时间) | 字符串 | 不支持。 在源查询中应用显式强制转换。 |

| 期间(时间戳) | 字符串 | 不支持。 在源查询中应用显式强制转换。 |

| 期间(带时区的时间戳) | 字符串 | 不支持。 在源查询中应用显式强制转换。 |

| SmallInt | Int16 | Int16 |

| 时间 | 时间 | 时间跨度 |

| 带时区的时间 | 字符串 | 时间跨度 |

| 时间戳 | 日期时间 | 日期时间 |

| 带时区的时间戳 | 日期时间偏移 (DateTimeOffset) | 日期时间 |

| VarByte | Byte[] | Byte[] |

| VarChar | 字符串 | 字符串 |

| VarGraphic | 字符串 | 不支持。 在源查询中应用显式强制转换。 |

| Xml | 字符串 | 不支持。 在源查询中应用显式强制转换。 |

查找活动属性

若要了解有关属性的详细信息,请查看 Lookup 活动。

升级 Teradata 连接器

下面是帮助你升级 Teradata 连接器的步骤:

在 “编辑链接服务 ”页中,选择 2.0 版本,并通过引用 链接服务版本 2.0 属性来配置链接服务。

Teradata 链接服务版本 2.0 的数据类型映射不同于版本 1.0 的数据类型映射。 若要了解最新的数据类型映射,请参阅 Teradata 的数据类型映射。

Teradata 连接器版本 2.0 和版本 1.0 之间的差异

Teradata 连接器版本 2.0 提供新功能,并与版本 1.0 的大多数功能兼容。 下表显示了版本 2.0 和版本 1.0 之间的功能差异。

| 版本 2.0 | 版本 1.0 |

|---|---|

| 以下映射用于将 Teradata 数据类型转换为中间服务数据类型。 DATE -> 日期 时区时间 -> 字符串 时间戳与时区 -> DateTimeOffset 图形 -> 字符串 间隔日 -> 时间跨度 从天到小时的时间间隔 -> 时间跨度 从天到分钟的时间间隔 -> 时间跨度 Interval Day To Second -> 时间跨度 间隔小时 -> 时间跨度 Interval Hour To Minute -> 时间跨度 从小时到秒的时间间隔 -> 时间跨度 间隔分钟 -> 时间跨度 从分钟到秒的时间间隔 -> 时间跨度 间隔月份 -> 字符串 间隔秒 -> 时间跨度 间隔年份 -> 字符串 年到月的间隔 -> 字符串 数字 -> 双精度 时间段(日期)->字符串 时间段 (时间) -> 字符串 时间段(包含时区的时间) -> 字符串 周期 (时间戳) -> 字符串 时间段(带时区的时间戳) -> 字符串 VarGraphic -> 字符串 Xml -> 字符串 |

以下映射用于将 Teradata 数据类型转换为中间服务数据类型。 日期 -> Datetime Time With Time Zone -> 时间跨度 时区时间戳 -> DateTime 版本 2.0 支持的其他映射列在左边,而版本 1.0 不支持这些映射。 请在源查询中应用显式强制转换。 |

相关内容

有关复制活动支持作为源和接收器的数据存储的列表,请参阅受支持的数据存储。