适用于: Azure 数据工厂

Azure 数据工厂  Azure Synapse Analytics

Azure Synapse Analytics

本文概述了如何使用 Azure 数据工厂和 Azure Synapse 中的复制活动从 HTTP 终结点复制数据。 本文是根据复制活动一文(其中大致介绍了复制活动)编写的。

此 HTTP 连接器、REST 连接器和 Web 表连接器之间的区别如下:

- REST 连接器专门支持从 RESTful API 复制数据。

- HTTP 连接器是通用的,可从任何 HTTP 终结点检索数据,以执行文件下载等操作。 在 REST 连接器可用之前,你可能偶然使用 HTTP 连接器从 RESTful API 复制数据,这是受支持的,但与 REST 连接器相比功能较少。

- Web 表连接器用于从 HTML 网页中提取表内容。

支持的功能

此 HTTP 连接器支持以下功能:

| 支持的功能 | IR |

|---|---|

| 复制活动(源/-) | (1) (2) |

| Lookup 活动 | (1) (2) |

① Azure 集成运行时 ② 自承载集成运行时

如需可以用作源/接收器的数据存储的列表,请参阅支持的数据存储。

可以使用此 HTTP 连接器:

- 通过 HTTP GET 或 POST 方法,从 HTTP/S 终结点检索数据 。

- 使用以下身份验证之一检索数据:Anonymous、Basic、Digest、Windows 或 ClientCertificate。

- 按原样复制 HTTP 响应,或者使用支持的文件格式和压缩编解码器分析该响应。

提示

若要先测试数据检索的 HTTP 请求,再配置 HTTP 连接器,请了解标头和正文的 API 规范要求。 可以使用 Visual Studio、PowerShell 的 Invoke-RestMethod 或 Web 浏览器等工具进行验证。

先决条件

如果数据存储位于本地网络、Azure 虚拟网络或 Amazon Virtual Private Cloud 内部,则需要配置自承载集成运行时才能连接到该数据存储。

如果数据存储是托管的云数据服务,则可以使用 Azure Integration Runtime。 如果访问范围限制为防火墙规则中允许的 IP,你可以选择将 Azure Integration Runtime IP 添加到允许列表。

此外,还可以使用 Azure 数据工厂中的托管虚拟网络集成运行时功能访问本地网络,而无需安装和配置自承载集成运行时。

要详细了解网络安全机制和数据工厂支持的选项,请参阅数据访问策略。

开始

若要使用管道执行复制活动,可以使用以下工具或 SDK 之一:

使用 UI 创建指向 HTTP 源的链接服务

使用以下步骤在 Azure 门户 UI 中创建指向 HTTP 源的链接服务。

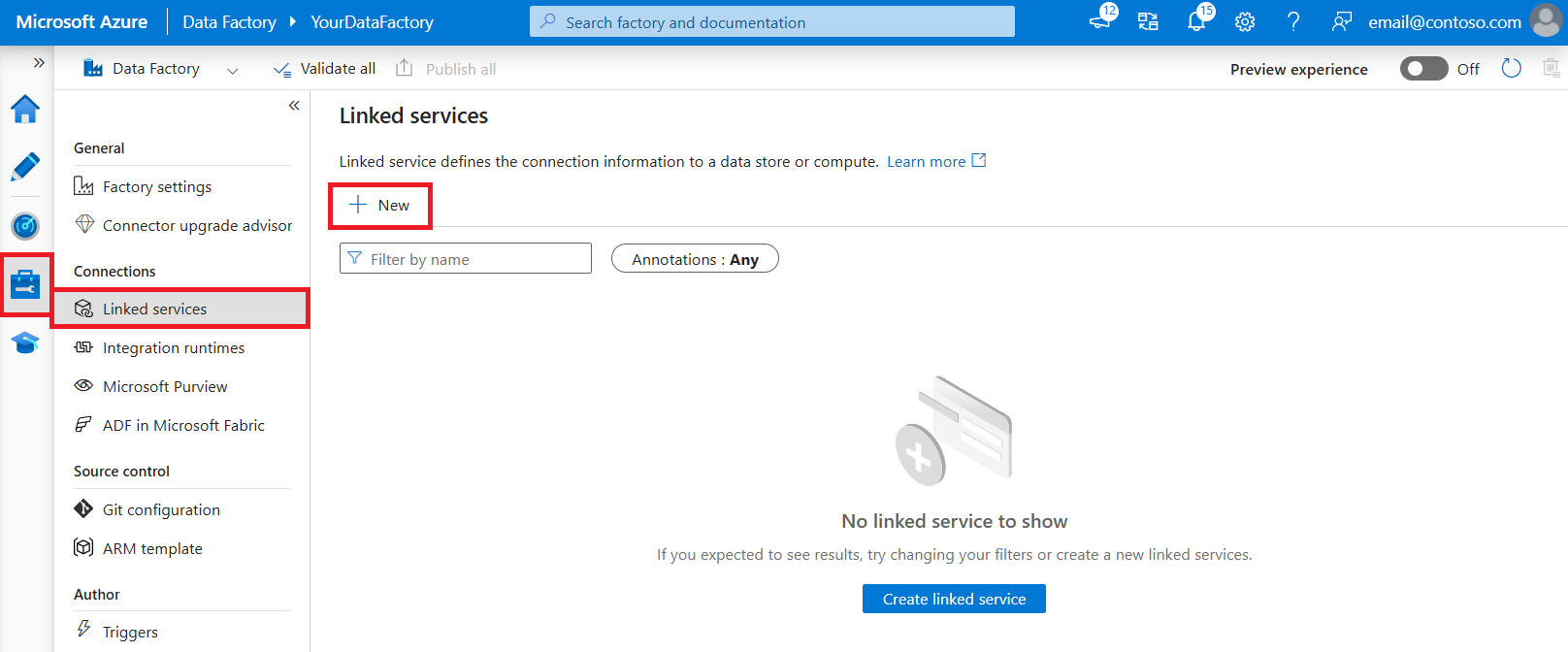

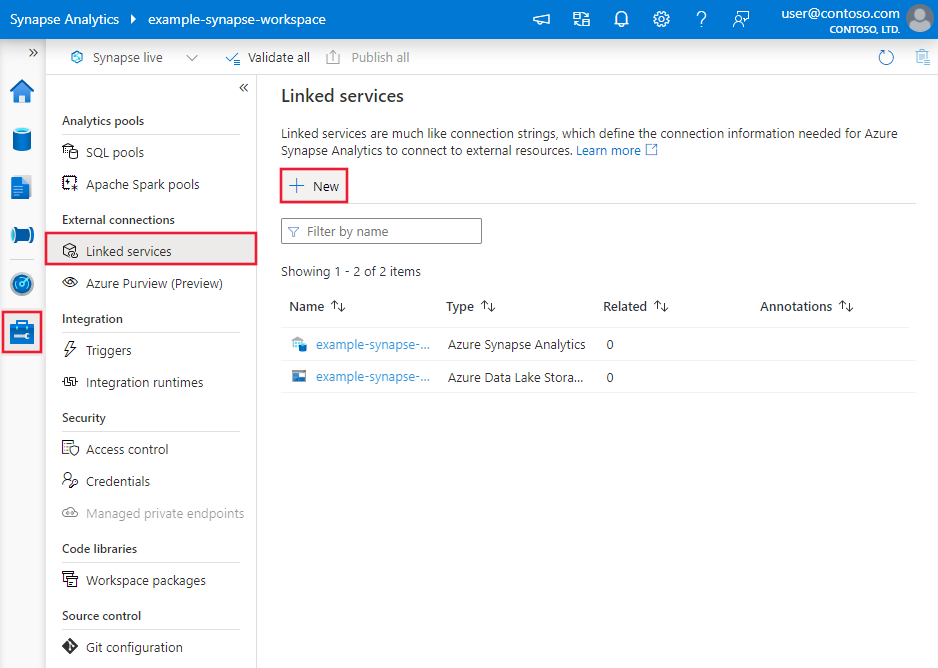

浏览到 Azure 数据工厂或 Synapse 工作区中的“管理”选项卡并选择“链接服务”,然后单击“新建”:

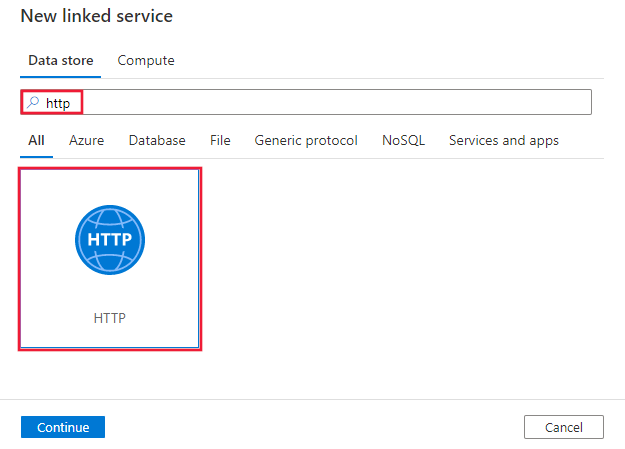

搜索 HTTP 并选择 HTTP 连接器。

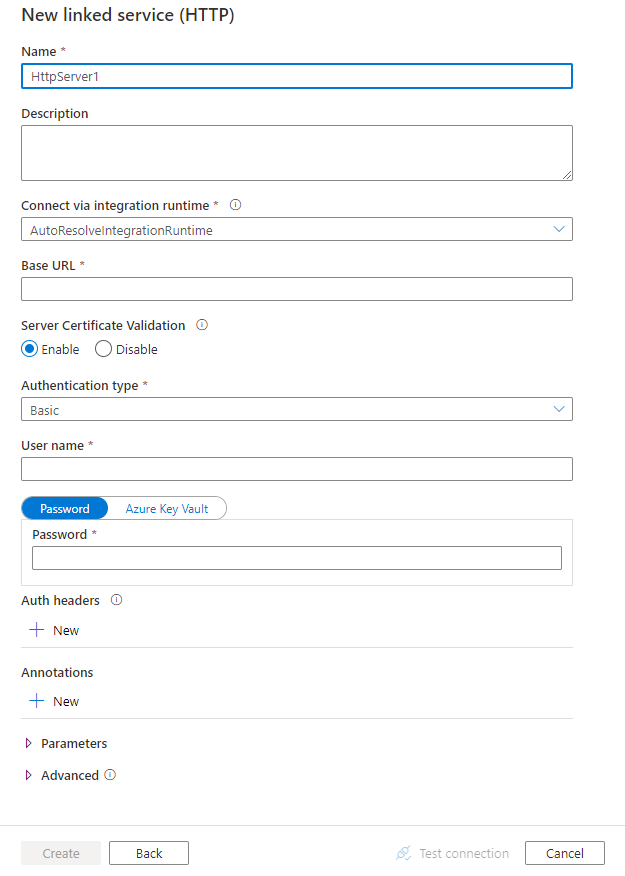

配置服务详细信息、测试连接并创建新的链接服务。

连接器配置详细信息

对于特定于 HTTP 连接器的实体,以下部分提供有关用于定义这些实体的属性的详细信息。

链接服务属性

HTTP 链接的服务支持以下属性:

| 属性 | 描述 | 必需 |

|---|---|---|

| 类型 | type 属性必须设置为 HttpServer 。 | 是 |

| url | Web 服务器的基 URL。 | 是 |

| enableServerCertificateValidation | 指定连接到 HTTP 终结点时,是否是启用服务器 TLS/SSL 证书验证。 HTTPS 服务器使用自签名证书时,将此属性设置为 false 。 | 否 (默认值为 true) |

| authenticationType | 指定身份验证类型。 允许的值为:Anonymous、Basic、Digest、Windows 和 ClientCertificate 。 此外,还可以在 authHeader 属性中配置身份验证标头。 有关这些身份验证类型的更多属性和 JSON 示例,请参阅此表格下面的部分。 |

是 |

| authHeaders | 附加的用于身份验证的 HTTP 请求标头。 例如,若要使用 API 密钥身份验证,可以将身份验证类型选为“匿名”,然后在标头中指定 API 密钥。 |

否 |

| connectVia | 用于连接到数据存储的 Integration Runtime。 从先决条件部分了解更多信息。 如果未指定,则使用默认 Azure Integration Runtime。 | 否 |

使用基本、摘要或 Windows 身份验证

将 authenticationType 属性设置为 Basic、Digest 或 Windows 。 除了前面部分所述的通用属性,还指定以下属性:

| 属性 | 描述 | 必需 |

|---|---|---|

| userName | 用于访问 HTTP 终结点的用户名。 | 是 |

| 密码 | 用户(userName 值)的密码 。 将此字段标记为 SecureString 类型以将其安全存储。 此外,还可以引用 Azure Key Vault 中存储的机密。 | 是 |

示例

{

"name": "HttpLinkedService",

"properties": {

"type": "HttpServer",

"typeProperties": {

"authenticationType": "Basic",

"url" : "<HTTP endpoint>",

"userName": "<user name>",

"password": {

"type": "SecureString",

"value": "<password>"

}

},

"connectVia": {

"referenceName": "<name of Integration Runtime>",

"type": "IntegrationRuntimeReference"

}

}

}

使用 ClientCertificate 身份验证

若要使用 ClientCertificate 身份验证,将 authenticationType 属性设置为ClientCertificate 。 除了前面部分所述的通用属性,还指定以下属性:

| 属性 | 描述 | 必需 |

|---|---|---|

| embeddedCertData | Base64 编码的证书数据。 | 指定是 embeddedCertData,还是 certThumbprint 。 |

| certThumbprint | 自承载集成运行时计算机的证书存储中所安装证书的指纹。 仅当在 connectVia 属性中指定自承载类型的 Integration Runtime 时适用 。 | 指定是 embeddedCertData,还是 certThumbprint 。 |

| 密码 | 与证书关联的密码。 将此字段标记为 SecureString 类型以将其安全存储。 此外,还可以引用 Azure Key Vault 中存储的机密。 | 否 |

如果使用 certThumbprint 进行身份验证,并在本地计算机的个人存储中安装了证书,则需要授予对自承载集成运行时的读取权限 :

- 打开 Microsoft 管理控制台 (MMC)。 添加面向“本地计算机”的“证书”管理单元。

- 展开“证书”“个人”,然后选择“证书” 。

- 右键单击个人存储中的证书,并选择“所有任务”“管理私钥” 。

- 在“安全性”选项卡上,添加运行 Integration Runtime 主机服务 (DIAHostService) 的、对证书具有读取访问权限的用户帐户 。

- HTTP 连接器仅加载受信任的证书。 如果使用自签名或未合并的 CA 颁发的证书来启用信任,则还必须在以下其中一个存储中安装该证书:

- 受信任人

- 第三方根证书颁发机构

- 受信任的根证书颁发机构

示例 1:使用 certThumbprint

{

"name": "HttpLinkedService",

"properties": {

"type": "HttpServer",

"typeProperties": {

"authenticationType": "ClientCertificate",

"url": "<HTTP endpoint>",

"certThumbprint": "<thumbprint of certificate>"

},

"connectVia": {

"referenceName": "<name of Integration Runtime>",

"type": "IntegrationRuntimeReference"

}

}

}

示例 2:使用 embeddedCertData

{

"name": "HttpLinkedService",

"properties": {

"type": "HttpServer",

"typeProperties": {

"authenticationType": "ClientCertificate",

"url": "<HTTP endpoint>",

"embeddedCertData": "<Base64-encoded cert data>",

"password": {

"type": "SecureString",

"value": "password of cert"

}

},

"connectVia": {

"referenceName": "<name of Integration Runtime>",

"type": "IntegrationRuntimeReference"

}

}

}

使用身份验证标头

此外,还可以配置身份验证请求标头,以及内置的身份验证类型。

示例:使用 API 密钥身份验证

{

"name": "HttpLinkedService",

"properties": {

"type": "HttpServer",

"typeProperties": {

"url": "<HTTP endpoint>",

"authenticationType": "Anonymous",

"authHeader": {

"x-api-key": {

"type": "SecureString",

"value": "<API key>"

}

}

},

"connectVia": {

"referenceName": "<name of Integration Runtime>",

"type": "IntegrationRuntimeReference"

}

}

}

数据集属性

有关可用于定义数据集的各部分和属性的完整列表,请参阅数据集一文。

Azure 数据工厂支持以下文件格式。 请参阅每一篇介绍基于格式的设置的文章。

基于格式的数据集中 location 设置下的 HTTP 支持以下属性:

| 属性 | 描述 | 必需 |

|---|---|---|

| 类型 | 数据集中 location 下的 type 属性必须设置为 HttpServerLocation。 |

是 |

| relativeUrl | 包含数据的资源的相对 URL。 HTTP 连接器从以下组合 URL 复制数据:[URL specified in linked service][relative URL specified in dataset]。 |

否 |

注意

支持的 HTTP 请求有效负载大小约为 500 KB。 如果要传递给 Web 终结点的有效负载大小大于 500 KB,请考虑以更小的区块对该有效负载进行批处理。

示例:

{

"name": "DelimitedTextDataset",

"properties": {

"type": "DelimitedText",

"linkedServiceName": {

"referenceName": "<HTTP linked service name>",

"type": "LinkedServiceReference"

},

"schema": [ < physical schema, optional, auto retrieved during authoring > ],

"typeProperties": {

"location": {

"type": "HttpServerLocation",

"relativeUrl": "<relative url>"

},

"columnDelimiter": ",",

"quoteChar": "\"",

"firstRowAsHeader": true,

"compressionCodec": "gzip"

}

}

}

复制活动属性

本部分提供 HTTP 源支持的属性列表。

有关可用于定义活动的各个部分和属性的完整列表,请参阅管道。

HTTP 作为源

Azure 数据工厂支持以下文件格式。 请参阅每一篇介绍基于格式的设置的文章。

基于格式的复制源中 storeSettings 设置下的 HTTP 支持以下属性:

| 属性 | 描述 | 必需 |

|---|---|---|

| 类型 |

storeSettings 下的 type 属性必须设置为 HttpReadSettings。 |

是 |

| requestMethod | HTTP 方法。 允许的值为 Get(默认值)和 Post 。 |

否 |

| additionalHeaders | 附加的 HTTP 请求标头。 | 否 |

| requestBody | HTTP 请求的正文。 | 否 |

| httpRequestTimeout | 用于获取响应的 HTTP 请求的超时(TimeSpan 值)。 该值是获取响应而不是读取响应数据的超时。 默认值为 00:01:40。 | 否 |

| maxConcurrentConnections | 活动运行期间与数据存储建立的并发连接的上限。 仅在要限制并发连接时指定一个值。 | 否 |

示例:

"activities":[

{

"name": "CopyFromHTTP",

"type": "Copy",

"inputs": [

{

"referenceName": "<Delimited text input dataset name>",

"type": "DatasetReference"

}

],

"outputs": [

{

"referenceName": "<output dataset name>",

"type": "DatasetReference"

}

],

"typeProperties": {

"source": {

"type": "DelimitedTextSource",

"formatSettings":{

"type": "DelimitedTextReadSettings",

"skipLineCount": 10

},

"storeSettings":{

"type": "HttpReadSettings",

"requestMethod": "Post",

"additionalHeaders": "<header key: header value>\n<header key: header value>\n",

"requestBody": "<body for POST HTTP request>"

}

},

"sink": {

"type": "<sink type>"

}

}

}

]

查找活动属性

若要了解有关属性的详细信息,请查看 Lookup 活动。

旧模型

注意

仍按原样支持以下模型,以实现向后兼容性。 建议使用上面几个部分中提到的新模型,并且创作 UI 已切换为生成新模型。

旧数据集模型

| 属性 | 描述 | 必需 |

|---|---|---|

| 类型 | 数据集的 type 属性必须设置为 HttpFile 。 | 是 |

| relativeUrl | 包含数据的资源的相对 URL。 未指定此属性时,仅使用链接服务定义中指定的 URL。 | 否 |

| requestMethod | HTTP 方法。 允许的值为 Get(默认值)和 Post 。 | 否 |

| additionalHeaders | 附加的 HTTP 请求标头。 | 否 |

| requestBody | HTTP 请求的正文。 | 否 |

| format | 如果要在未经分析的情况下从 HTTP 终结点按原样检索数据,并将其复制到基于文件的存储,请跳过输入和输出数据集定义中的格式部分 。 如果要在复制期间分析 HTTP 响应内容,则支持以下文件格式类型:TextFormat、JsonFormat、AvroFormat、OrcFormat 和 ParquetFormat 。 请将格式中的“type”属性设置为上述值之一 。 有关详细信息,请参阅 JSON 格式、文本格式、Avro 格式、Orc 格式和 Parquet 格式。 |

否 |

| 压缩 | 指定数据的压缩类型和级别。 有关详细信息,请参阅受支持的文件格式和压缩编解码器。 支持的类型:GZip、Deflate、BZip2 和 ZipDeflate。 支持的级别为:最佳和最快。 |

否 |

注意

支持的 HTTP 请求有效负载大小约为 500 KB。 如果要传递给 Web 终结点的有效负载大小大于 500 KB,请考虑以更小的区块对该有效负载进行批处理。

示例 1:使用 Get 方法(默认)

{

"name": "HttpSourceDataInput",

"properties": {

"type": "HttpFile",

"linkedServiceName": {

"referenceName": "<HTTP linked service name>",

"type": "LinkedServiceReference"

},

"typeProperties": {

"relativeUrl": "<relative url>",

"additionalHeaders": "Connection: keep-alive\nUser-Agent: Mozilla/5.0\n"

}

}

}

示例 2:使用 Post 方法

{

"name": "HttpSourceDataInput",

"properties": {

"type": "HttpFile",

"linkedServiceName": {

"referenceName": "<HTTP linked service name>",

"type": "LinkedServiceReference"

},

"typeProperties": {

"relativeUrl": "<relative url>",

"requestMethod": "Post",

"requestBody": "<body for POST HTTP request>"

}

}

}

旧复制活动源模型

| 属性 | 描述 | 必需 |

|---|---|---|

| 类型 | 复制活动源的 type 属性必须设置为:HttpSource 。 | 是 |

| httpRequestTimeout | 用于获取响应的 HTTP 请求的超时(TimeSpan 值)。 该值是获取响应而不是读取响应数据的超时。 默认值为 00:01:40。 | 否 |

示例

"activities":[

{

"name": "CopyFromHTTP",

"type": "Copy",

"inputs": [

{

"referenceName": "<HTTP input dataset name>",

"type": "DatasetReference"

}

],

"outputs": [

{

"referenceName": "<output dataset name>",

"type": "DatasetReference"

}

],

"typeProperties": {

"source": {

"type": "HttpSource",

"httpRequestTimeout": "00:01:00"

},

"sink": {

"type": "<sink type>"

}

}

}

]

相关内容

有关复制活动支持作为源和接收器的数据存储的列表,请参阅支持的数据存储和格式。